What Should Be Checked at the First Phase of SEO Projects?

At Zeo, our SEO operation team works in different teams. As the "Volunteer Team", we wanted to prepare a guide on what to check first in an SEO project. In this article, you can find all our opinions on the subject.

Crawlability and Crawl Budget Optimization

The most important thing to do before starting a new SEO project is to analyze whether the site is crawlable by bots. Otherwise, the improvements we make are of no importance, unfortunately.

Let's see what we can do to check whether the sites are crawlable by search engine bots.

Robots.txt Check

Robots.txt file is the command center of a website. Creating a robots.txt file helps us make sure we are managing search engine requests correctly and optimizing the crawl budget well.

If you do not have a robots.txt file on your website, you can find out how to create one in the "What is Robots.txt File, Why is it Important?" content.

If you already have a robots.txt file, we need to make sure that search engine bots have access to the site (except for URL paths, pdf files or parameters that we turn off to optimize the crawl budget of the bots).

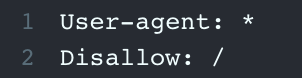

If your robots.txt file has the following commands, then no bots are crawling your site.

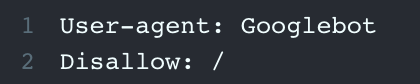

If your robots.txt file looks like the one below, it simply means that Googlebot cannot crawl your site.

In this case, you need to open the website to crawl requests from search engine bots with the "Allow" command.

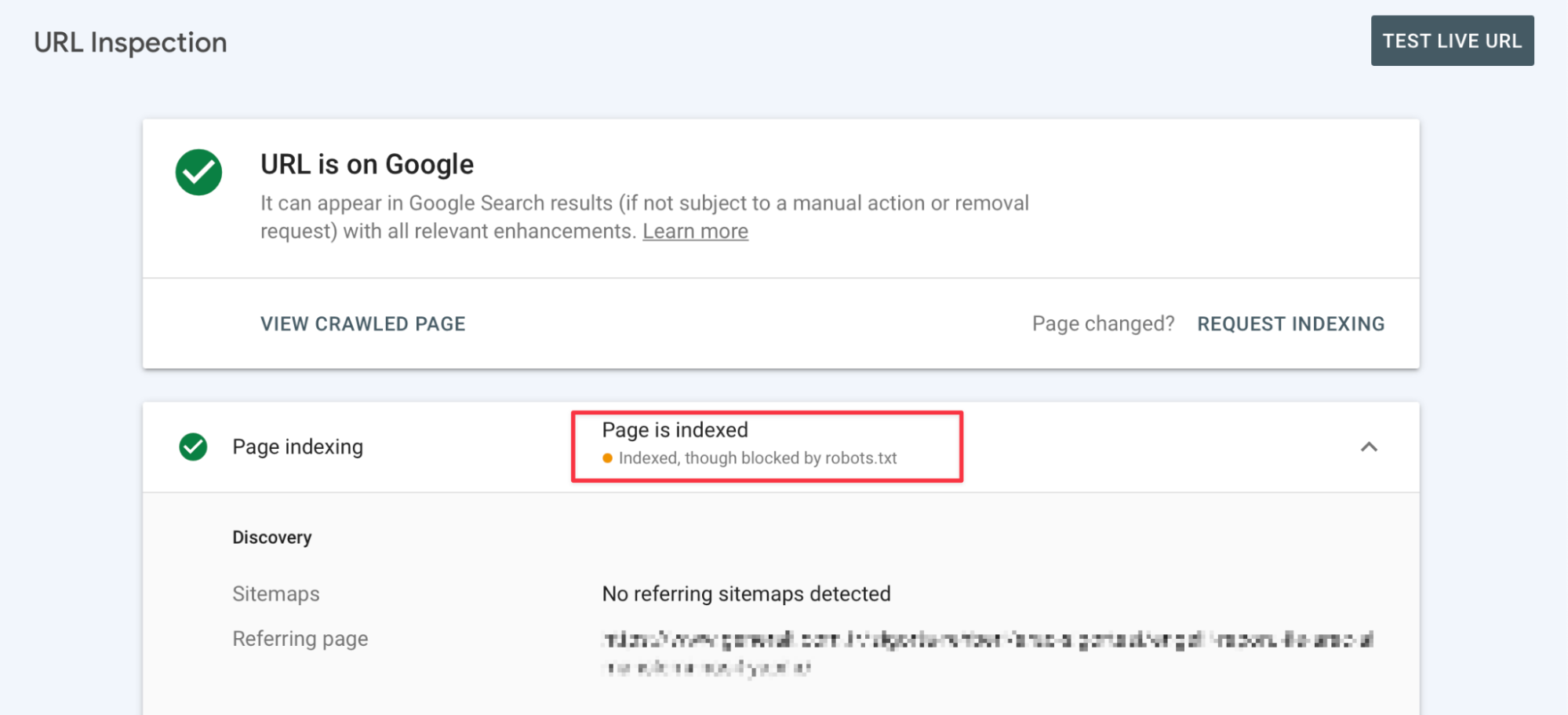

Google Search Console URL Inspection and Crawl Stats

Apart from the Robots.txt file, you can also check whether a specific URL is crawlable with the URL Inspection module of the Search Console tool.

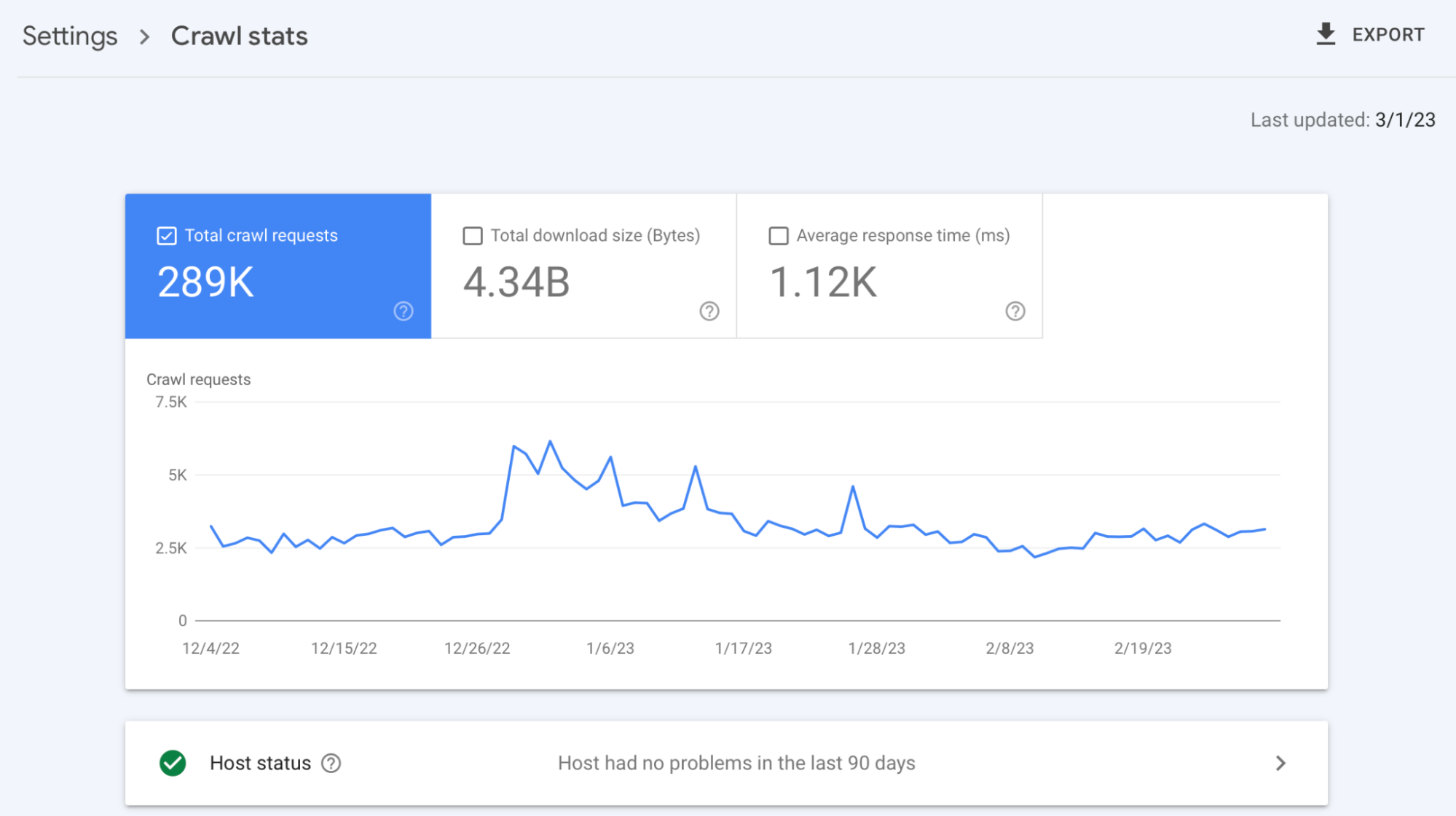

You can also check whether your website is crawlable by checking the crawl requests to your website from the "Crawl Stats" section in the Search Console tool.

When we are sure that the site is not blocking search engine bots requests, you need to make sure that a sitemap exists and that internal link building is present on the website. Because search engine bots need to discover that page before they crawl it.

If you have a page that is not included in the sitemap or internal linking setup, you need to add it to the sitemap and include it in the internal link setup. Otherwise, it is close to impossible for this page to be discovered and crawled.

It is also necessary to check whether the internal links within the site are "rel=nofollow". Search engine bots usually do not follow links with the nofollow tag and therefore do not crawl.

If we are sure that our site is crawlable, it is time to make sure that it is "properly crawlable"!

Crawlability, of course, does not mean and does not require that all pages are crawled. We have a problem known as crawl budget, which means something different from the word "budget" we all think of first.

I think optimizing the crawl budget is very important for any site, large, medium or small. Why would Googlebot crawl a useless page like a search page instead of crawling the page that matters to me (unless of course the page that matters to me is a search page)?

Optimizing crawl budget is all about your site or strategy.

For example, if tag pages are actually category pages of your website and you get a lot of traffic from them, then there is no negative impact on your crawl budget.

To optimize the crawl budget, it is important to first know which pages search engine bots can crawl. For this process, you can examine the "Disallow" commands in the robots.txt tool, perform manual reviews on the site, or use the Crawl Stats tool.

In manual reviews, you can detect parameterized URLs that you come across and are not closed to crawling with the robots.txt file, unnecessary URL paths, or pages such as search if you are not using it. Then, if the pages are not indexed, you can optimize your crawl budget by closing these pages, parameters, etc. to crawling with robots.txt.

But if these pages are in the index, you need to wait for them to fall out of the index by adding noindex to the pages first. Then you can close these pages to crawling with robots.txt.

By the way, if you want the pages you noindexed to fall off the index quickly, you can create a sitemap where these URLs are found so that search engine bots can reach these pages.

Note: Not investment advice!

Let's say you didn't get the results you wanted from manual reviews or it wasn't enough, and let's examine how we can detect unnecessary URLs that are requested with Crawl Stats.

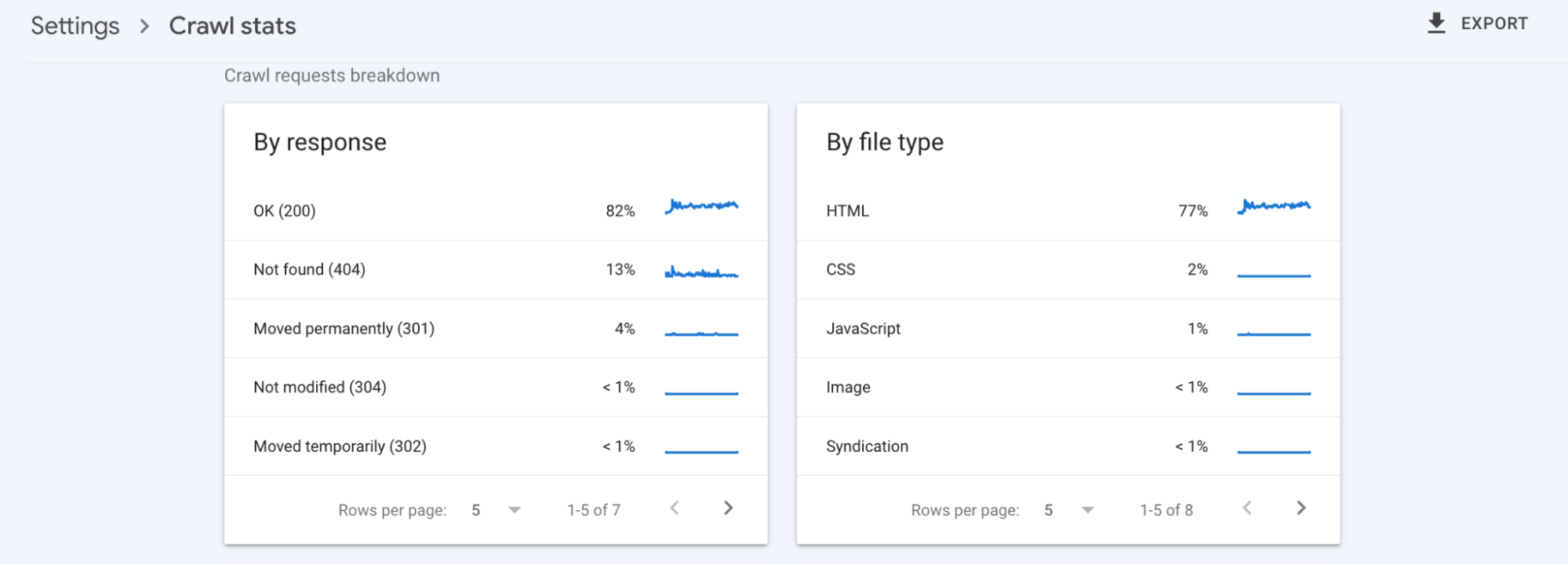

Crawl Stats is a tremendous tool that shows us the total number of requests Google bots made to our site, the file size downloaded in these requests, and the average response time of our site to bots as a result of these requests.

And that's not all! Here are its other tricks:

- Status codes of discarded requests,

- How many requests which Google bot sent and to which pages,

- Which URL you have just discovered, which URL you are re-crawling,

- A tool that shares the type of requests (HTML, JS, CSS, Image, etc.) with us.

The only downside is that it only shares 1K URLs.

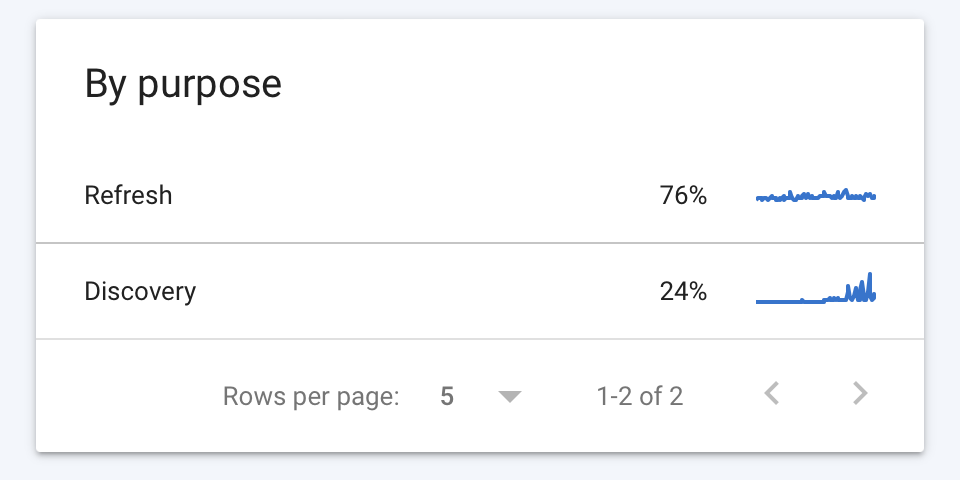

To optimize the crawl budget, let's first look at the "By purpose" field of the Crawl Stas tool.

Under the "By purpose" heading, we can see the URLs that Google bots have re-requested or discovered for the first time.

Under Refresh and Discovery, we can analyze newly discovered or re-requested URLs that negatively affect our crawl budget.

For example, if Googlebots frequently make requests to a page path with low added value, we can consider closing these pages to crawling.

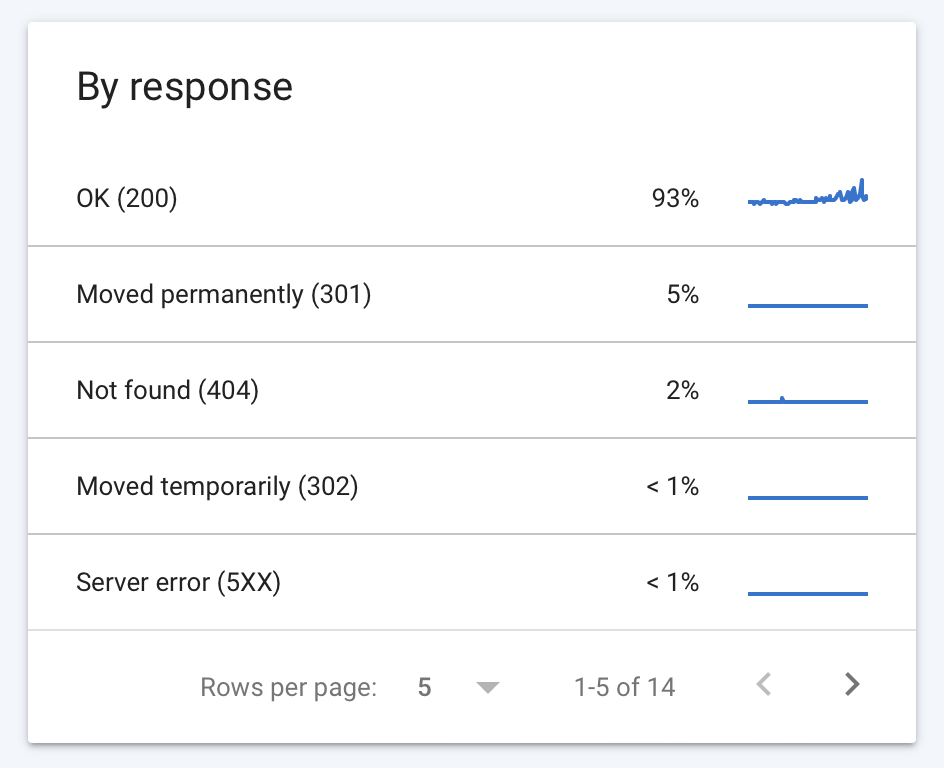

Under the "By response" heading, we can see the status codes translated by the URLs as a result of the requests made by Google bots.

Remember that it's not just unnecessary pages that negatively affect the crawl budget.

Search engine bots requesting too many URLs with status codes such as 301, 404 and 500 also negatively affects your crawl budget. In fact, if the number of pages with status codes other than 200 status codes increases too much, it can sometimes negatively affect your rankings.

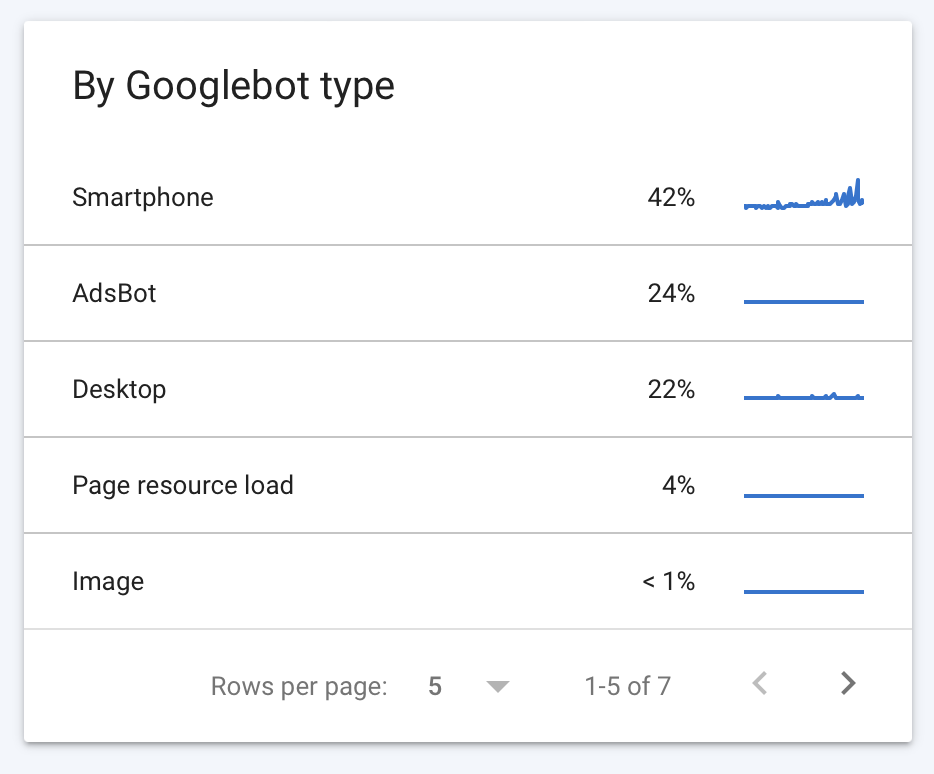

Under the "By Googlebot type" heading, we can see which Google bots have how many requests and what kind of pages they request.

For example, if there are URLs created only for Ads on our site; by detecting them, we can close this URL path to search engine bots with a robots.txt file and open it only for Google Adsbot to crawl.

Let's see how we can do this with a small example.

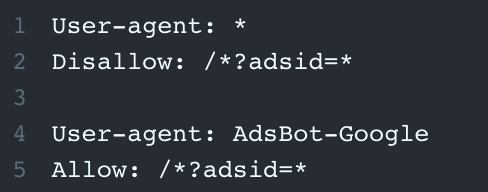

Let's assume that we have URLs with the parameter ?adsid= created for ads and that they are open to crawling by all bots.

With the following robot.txt commands, we can close this parameter to all search engine bots and open it only to Adsbot.

Since the bots will also crawl the robots.txt file from top to bottom, the "Allow" command will override the Disallow command for Adsbot.

I hope we were able to solve the crawlability problem and crawl budget optimization together in the first phase :)

Analyzing Search Console to Master Project History

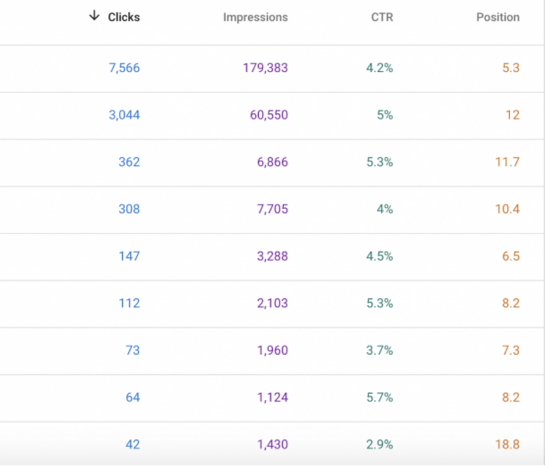

When an SEO project comes, what happened in the past of the project is very important in order to direct the future of the project. When the project's Search Console and Analytics accesses are taken, a quick look at the site's history will allow you to better understand the project and see if there is or has been any negative situation.

First of all, as we always look at in the Search Console account, it will be useful to look at the click, impression, ctr and position values of the site in the last 16 months from the Search Results section. It will be useful to check whether the site has experienced a huge decline / increase in this 16-month period, to compare the last months with the months before it and the year before it and to check if there is any different situation. At this point, taking into account the recent Google Core Updates, analyzing how the site has left these updates with a table can allow you to better understand the status of the site along with the content of Core Uptade.

Of course, it may be useful to check the Google Analytics account for organic traffic and other source data. It is also useful to analyze the site's history in more detail with 3rd party tools such as Ahrefs, Semrush and SearchMetrics.

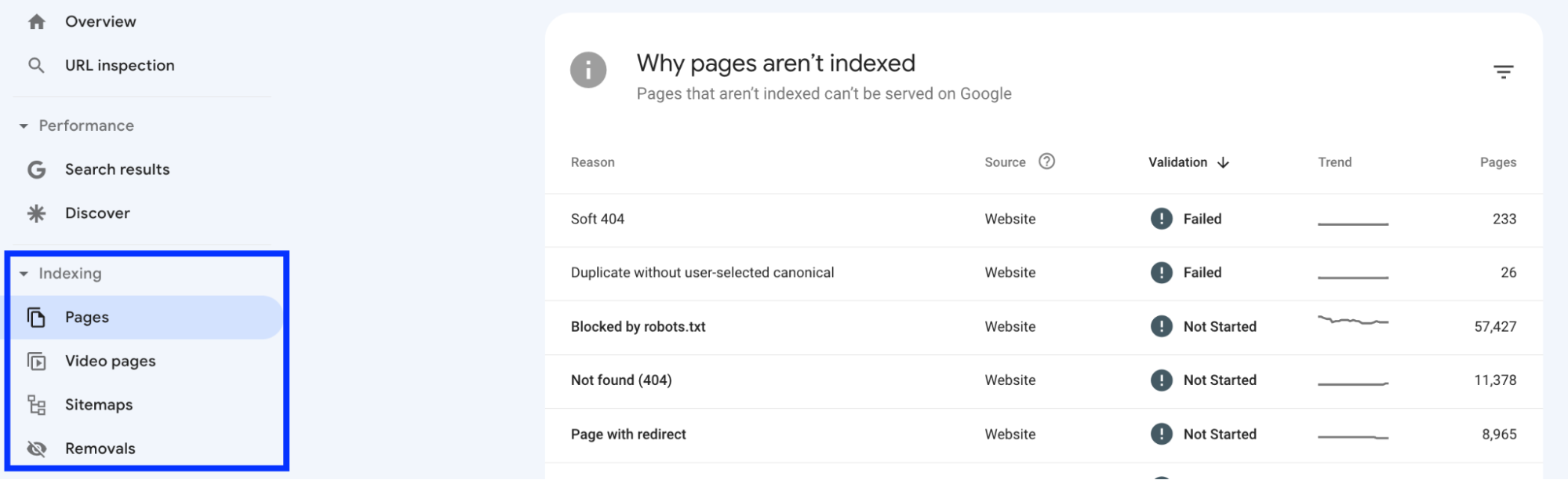

In addition to traffic data, especially the Search Console account gives us much different information. Examining the warnings that the pages on the site have recently received from the Pages tab in the Indexing section and looking at the reasons why the pages here are not indexed will also help to catch major problems in the project - if any.

From the Sitemaps tab, it is also necessary to check if there are previously uploaded sitemaps and to check whether they are consistent with the sitemap pointed to by robots.txt, whether there are different sitemaps, etc.

In the Video pages section in the Indexing tab, you can get information about the status of the videos on the site. In the Removals section, you can check if the pages on the site have been temporarily removed, if there are old content sections and if there is a markup in SafeSearch.

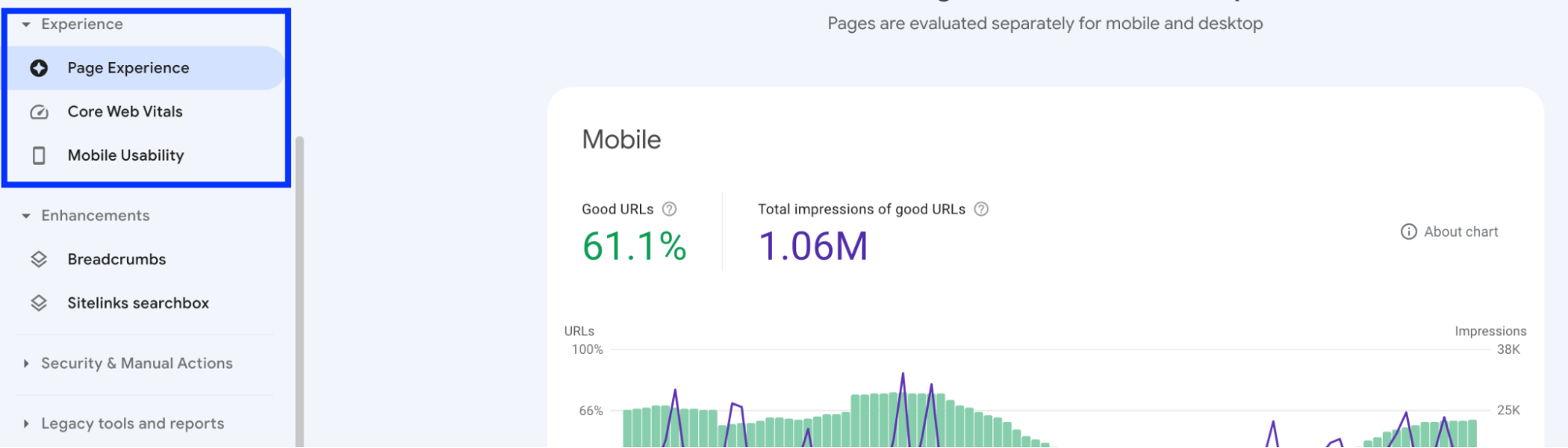

From the Experience tab, you can review the page experience report of the site for the last 3 months. Here you can look at the change in Core Web Vitals metrics over the last 3 months and check if there has been a negative situation. In addition, in the Mobile Usability tab, if there are pages with a bad mobile experience, you can find them and quickly check the reasons. You can also check the HTTPS report from this tab. If there are pages without HTTPS, you can easily see from this tab.

Another tab is the Security section, which is perhaps one of the most important tabs. Questions such as whether the site is actively under a manual process, whether it has been under any manual process in previous years, whether it has a security problem are very important. If it has been under manual action in previous years, it is necessary to examine in detail the reasons for this and how it was resolved, what time interval it covers and how organic traffic was affected in that time interval.

If there is a violation for any reason in the Security issues tab, it is necessary to talk about what is the reason for this and how we can quickly resolve it at the very beginning of the project (Hacked content, malware, etc.).

In one of my projects last year, while checking Search Console, I saw that the site was under Manual Actions. When I examined the reason, many unnatural backlinks were coming to the site. When I examined these sites, I found that especially the brand's test site was not closed to crawling and many links came from this site. In addition, many links were coming from nearly 500 different spam sites with the same content and the same word. At this point, we quickly closed the test site to crawling and rejected all links with malicious link analysis. Then we reported this situation to Google and the manual process was quickly removed.

In the Link tab, we can see both internal links and external links. At this point, we can quickly analyze the sites that link to us here and with the help of 3rd party tools.

Finally, in the Settings section, we can access the site's Crawl analysis and whether it is crawled with mobile priority. The Crawl stats tab can give us an insight, especially in terms of crawl budget. We can quickly check which type of bot Google bots mostly come to our site, which status codes and which file types they encounter the most. In addition, we can also look at the total number of crawl requests and server response time average for the last 3 months. Quick checks here will actually show us the existing and past problems of the site.

The mobile priority mentioned above is actually a critical issue for the site.

It will also be important to quickly check our site with Zeo's MFI tool and see if there are differences between the mobile and desktop versions of the site in terms of content, meta tags, images and number of links.

Internal Links

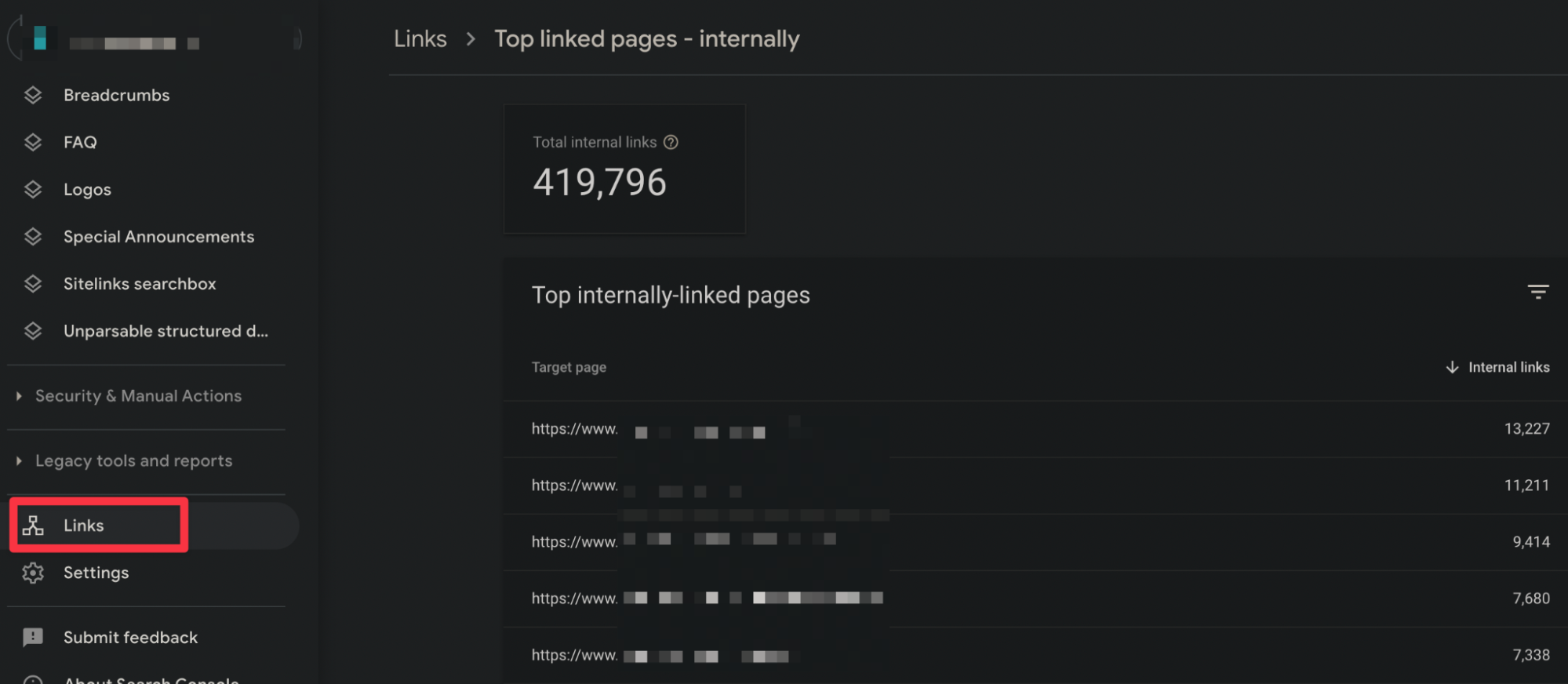

By checking the internal link structure when the SEO project first starts, you can earn more revenue from your very important pages. Since our topic is the control phase, I tried to convey some simple but effective suggestions. Of course, you can also pay attention to topics such as Topic Clusters in the future. After verifying your site with Google Search Console, you can learn the most linked pages on your site according to Google from the section below:

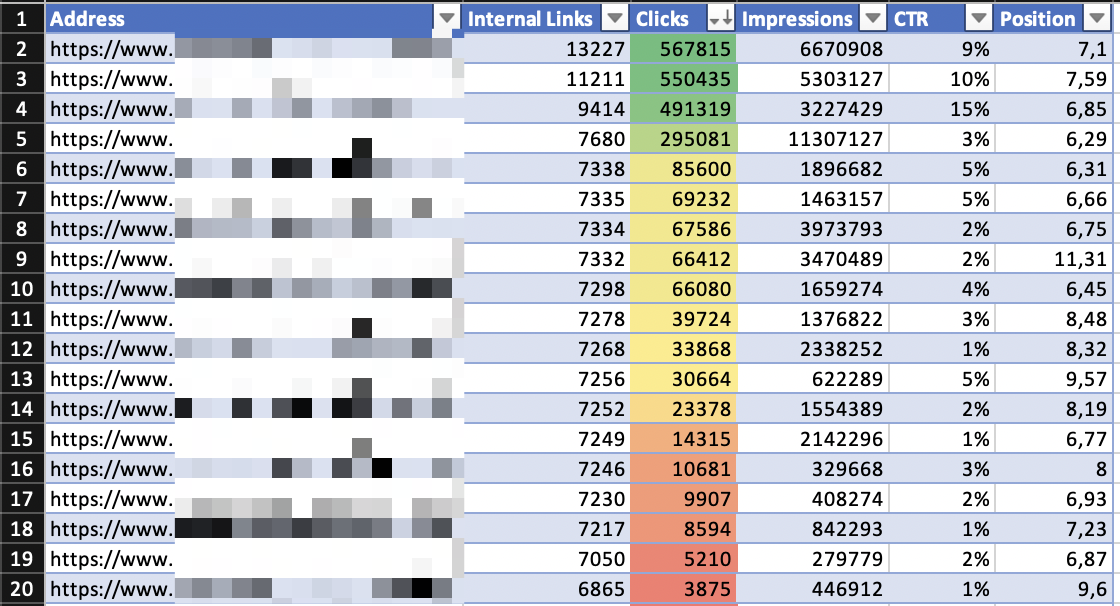

You can download these pages with export and find out how much organic traffic they receive using Search Console API. In this way, you can identify pages that are linked too much from within the site but get too few clicks or impressions and take actions with more internal linking constructions. If there are pages that need to be blocked, you can block them from crawling with the help of robots.txt:

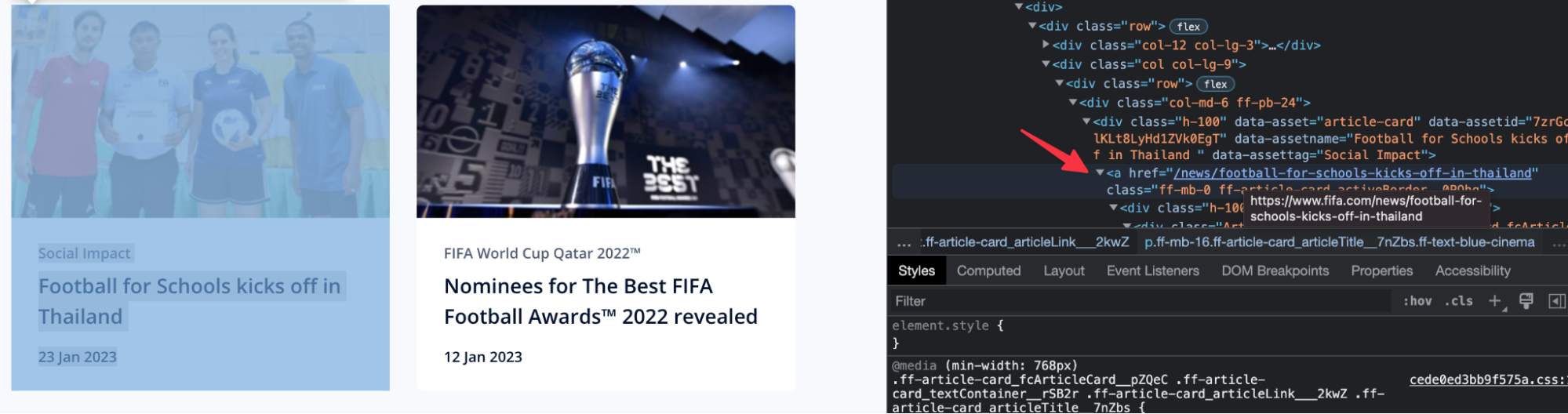

One of the most common shortcomings I see is the use of "onclick" in internal links, especially on Javascript-based sites. Google recommends that these links should be given with a href, so I strongly recommend that you test this kind of usage in the internal link structure:

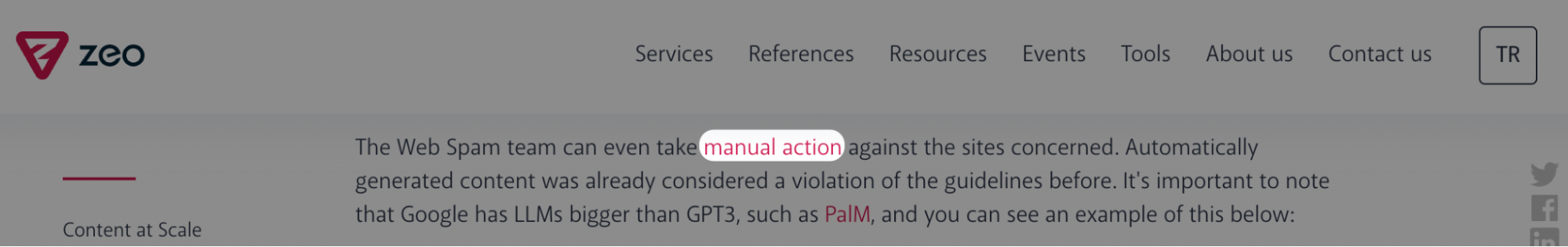

Anchor texts used in in-site links should give users very clear statements about the page they will go to. For example, if you are referring to a topic related to manual processing, uses such as you can visit our Google penalties page on the subject will be very healthy. You can also pay attention to these uses in the first place in SEO projects.

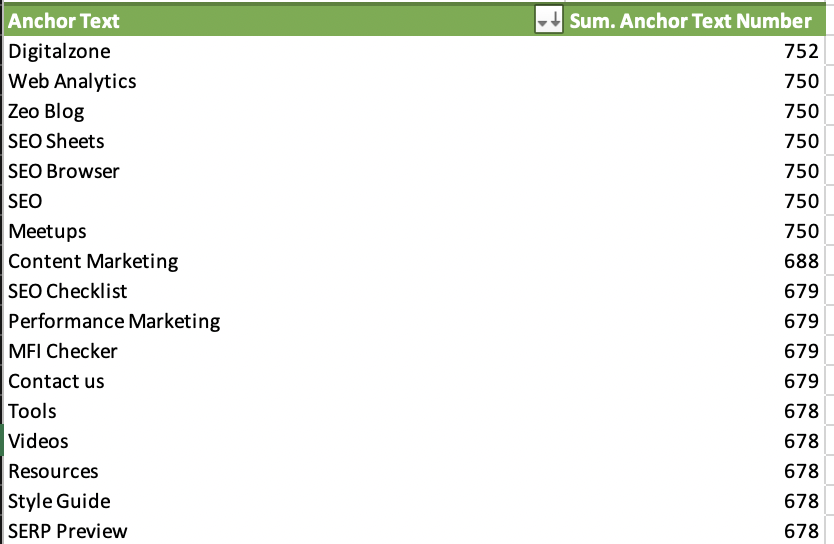

You can analyze the anchor text parts of internal links and check the number of anchor texts given within the site. It is normal for anchor texts such as home page, site name, site name logo to be high on sites. Here, you can make optimization by taking a look at anchor texts that do not provide information about the page, such as "from here". Below you can see the anchor text distribution of the number of pages crawled by Zeo up to a certain number of URLs:

Avoiding mistakes such as linking to the "camping chair" page with the word "ballet shoes" will also be beneficial for the performance of the pages.

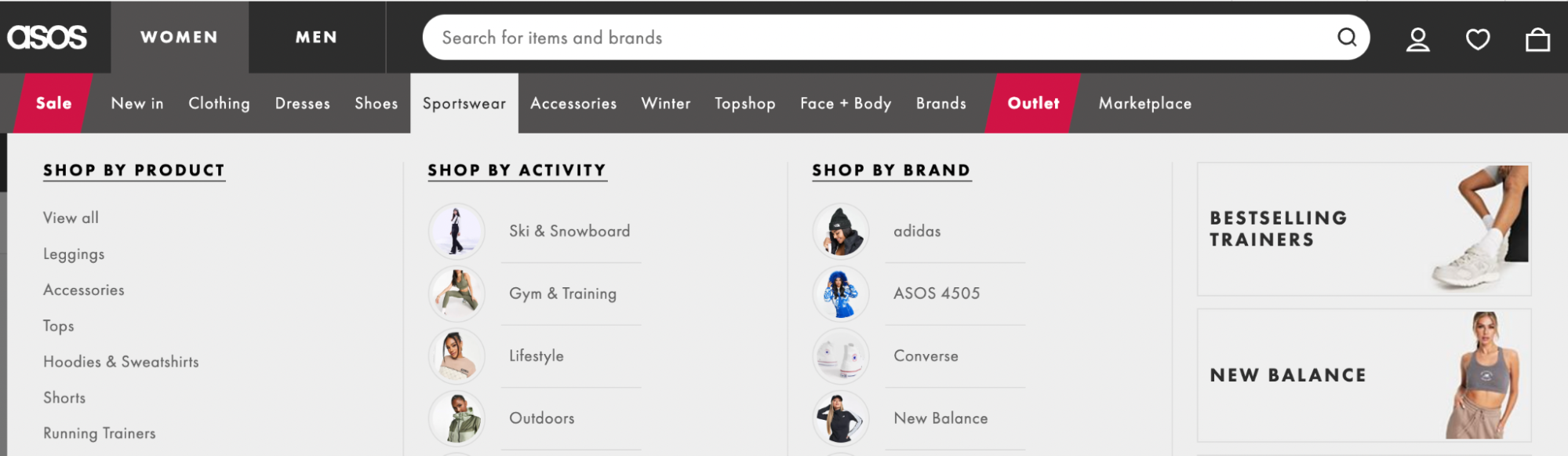

Since areas such as header or footer are repeated on almost every page within the site, bulk optimization of the links here should be a much higher priority in terms of impact on SEO. For example, you have an e-commerce site and your categories in the menu consist of URLs redirected with 301. Naturally, it will be difficult for Google to find the final URLs of the pages you are redirected to. Therefore, you can confirm that the final URLs of your important pages (especially those in the top 100 according to Search Console) are included in the site:

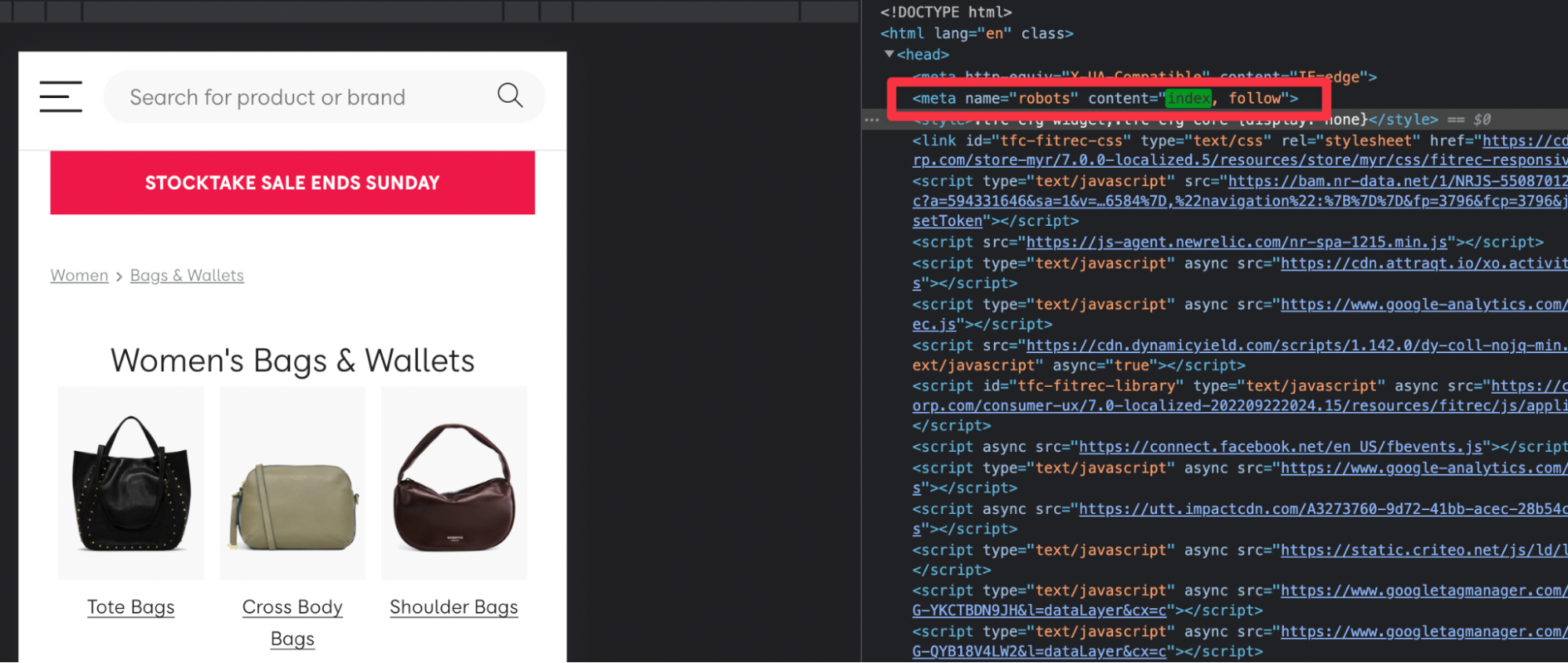

In addition to crawling your valuable pages, you should also check that they are not excluded from the index with the "noindex tag". Even if you use in-site link building efficiently, not indexing important pages will not bring visitors to your site from the organic channel. Therefore, be sure to reconfirm that your important pages can be indexed:

Content Optimization

We always say how important it is to produce content. But there is another issue at least as important as producing content: Optimization of Existing Content.

Especially when you are about to start a new project, it is very important to review the status of your existing content. So, what do we actually mean by "reviewing the current situation"? Let's take a closer look together.

Optimization of Existing Content

Once you produce a piece of content, you cannot forget it. Because if you forget your content, your content will forget you. It will never contribute enough to you again. Remember that as important as the production phase is, it is just as important to track the performance of your content and optimize accordingly!

In particular, you may have a lot of content that doesn't bring any traffic to your website, doesn't get any clicks, doesn't accompany your target audience at any stage in the buying journey, doesn't bring backlinks, doesn't rank high in search engines or doesn't do anything to create brand awareness. Your priority should be to identify this "inefficient" content and optimize it.

Diagnosis

First of all, you can use two important tools, Google Search Console and Google Analytics, to identify content that is not performing well. For example, in Search Console, you can take a look at the data provided by the performance page, and by selecting the relevant metrics and time intervals from there, you can easily identify your content that is not getting traffic, experiencing a ranking drop, or experiencing a click drop.

You can also select the time interval to see how long these changes have been happening. For example, let's say a piece of your content has lost significant traffic in the last 3 months and has fallen far behind in the rankings. At this stage, you can also use tools such as Ahrefs and SEMrush to see your competitors who experienced a rise during the period when you experienced a decline, and you can easily identify what you are missing from them.

So, what should we do after identifying such inefficient content? If you didn't get the conversion you wanted, it means that your content is doing something wrong. Therefore, you should diagnose the missing points and apply the appropriate treatment.

Without wasting any more time, let's move on to the treatment phase, that is, how to optimize this content step by step.

1. Meta Title and Description Optimization

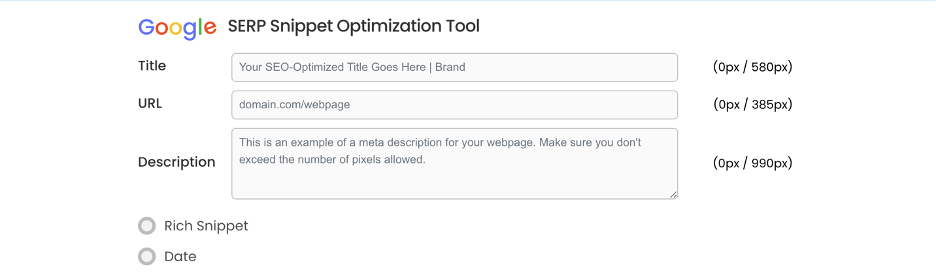

Meta title is a ranking criterion, so our title edits are very important. You can get more clicks with title optimization alone. While meta description is not a ranking criterion, it is very useful to encourage potential visitors to your content. With a title and description that is long enough and includes an engaging CTA, you can attract visitors to your page and get much better conversion rates than before. You can use the Serpsim tool for title and description adjustments.

2. URL Optimization

We always recommend using a search engine friendly URL structure. If a correct URL structure is not used on your page/content that you cannot get enough efficiency, it will be useful to correct this. If possible, you should prefer a short URL structure that contains your keyword and does not contain Turkish characters. You can detect your pages with the wrong URL structure using a tool such as Screaming Frog or Lumar and take action accordingly.

3. Keyword Optimization

Many people are still building their keyword strategy as if it were still 2010. But those years are long gone. You should keep up with the change and update yourself. Just like you need to update your content. Otherwise, no matter how hard you try, you may not get the necessary benefit from your content. For example, did you know that some of your content can fall behind just because of excessive keyword usage?

So when reviewing your existing content, pay attention to how you use keywords. You may have used too many keywords and lost the naturalness of the content. Now search engine bots have become so smart that they don't even need to be directly mentioned in your content to rank for a word. Since the bots can establish the relevance very well, they can associate some words and rank your content in related words.

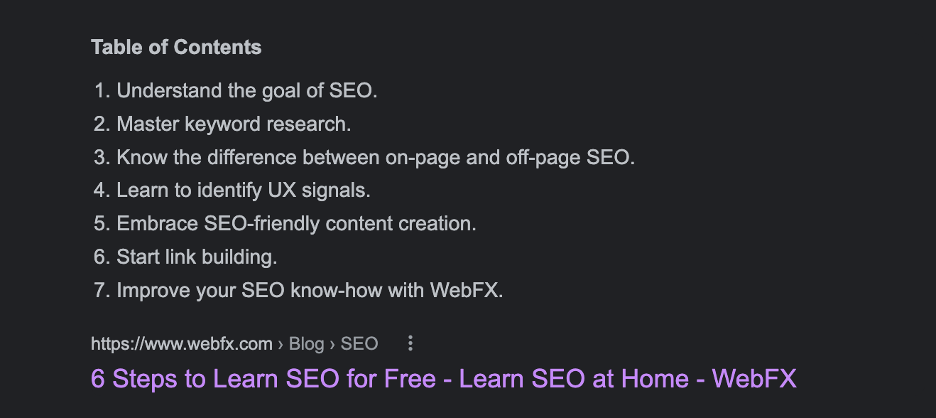

For example, the keyword we are targeting is "how to learn seo". Let's do such a search on Google. I came across a site in Featured snippet.

The title does not directly say "How to Learn SEO?". We click on the content and pay attention to how many times the relevant keyword appears in our content.

I searched for "how to learn seo" in the content and got zero results. So there was no "how to learn seo" in the content. But when I did a Google search for "how to learn seo", this site came up immediately. This is exactly what I'm talking about. There are no longer situations like "you should use the keyword at this rate, you should pass it this many times, you should fill the content with plenty of keywords". The content that creates its content in a natural fiction and really gives the user the necessary information stands out. Since bots can already understand the level of relevance very well, they can highlight quality content in similar searches.

Therefore, take a look at the content you have already produced and are not getting enough efficiency. Is keyword stuffing done? Are keywords used too much? Is your content really better quality than your competitors? Your answers to questions like these will form the basis of a better quality content.

4. Search Intent

It is extremely important to consider users' search intent when optimizing your content. Therefore, you should always consider whether your existing content is created with search intent in mind. For example, if a user searches for "calculate calorie needs" and is presented with a text-only blog post, you're making a mistake.

Because the user's main intention here is to access a calculation tool where they can calculate calories. Therefore, having a calculation tool on your content page will give you a leg up. Especially if your "bounce rate" is high, it is useful to question whether you are producing content suitable for search intent. Because even if the user clicks on your page, they will leave your site immediately because they cannot find what they are looking for.

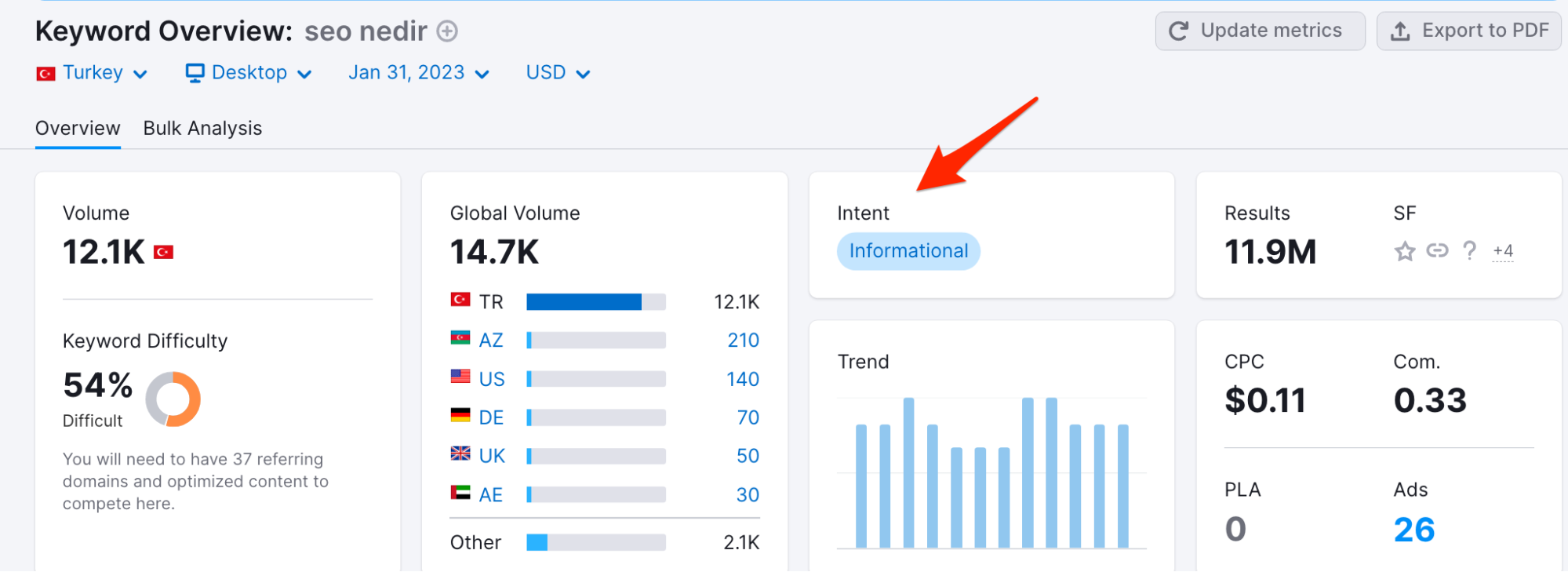

If you want to learn the search intent of any keyword, you can get help from SEMrush. For example, you can easily learn that the keyword "what is seo" is an Informational query.

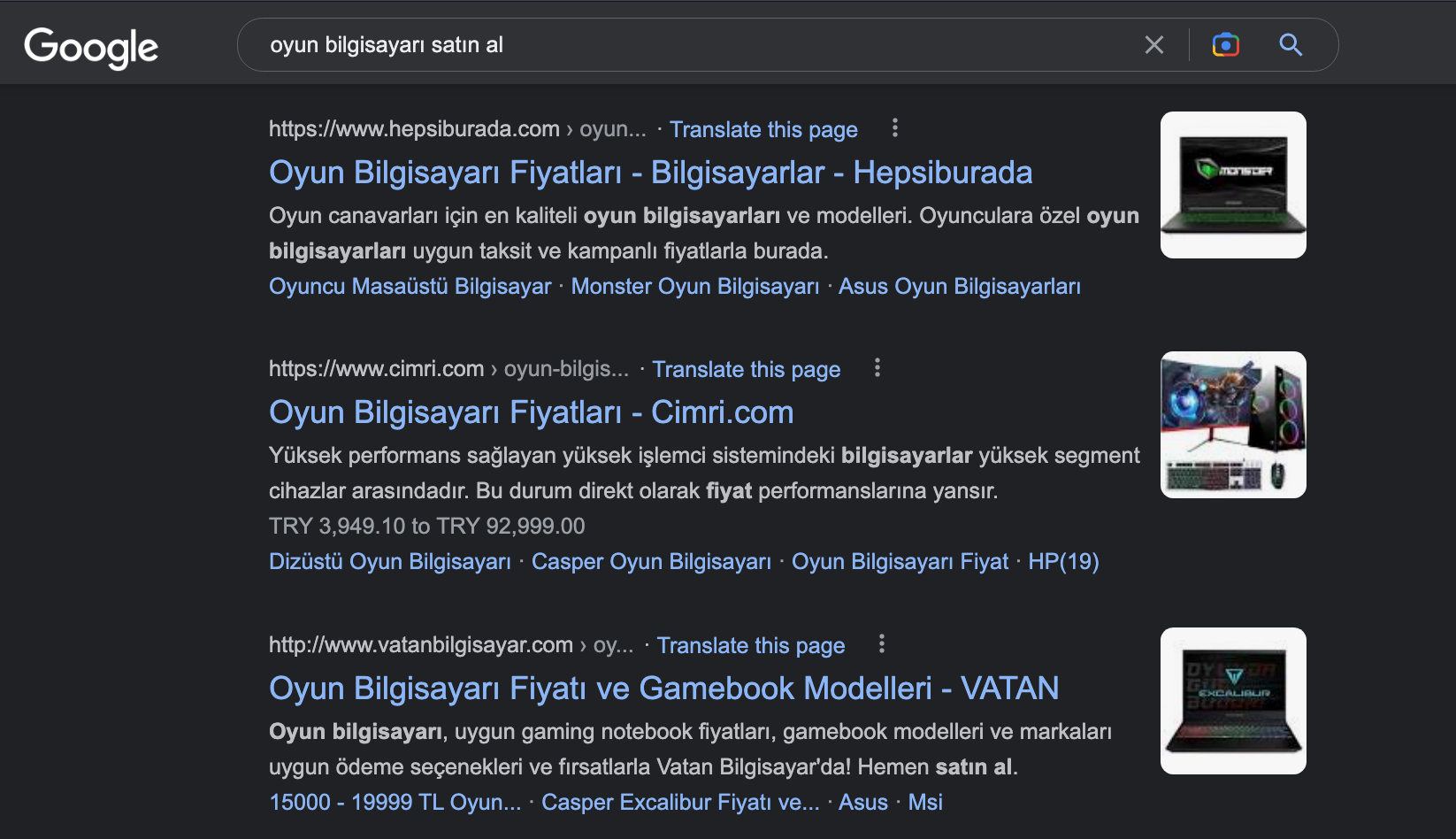

If you do not have a SEMrush account, you can alternatively use Google manual searches and take action according to the status of the pages you see here. For example, when we do a search like "buy a gaming computer", we come across the product pages of e-commerce sites.

Considering the status of these pages in the SERP, we can say that the search intent of the word "buy gaming computer" is Transactional. Thanks to this inference, we can make our relevant page suitable for search intent by applying the necessary optimizations.

5. Heading Tag Setup

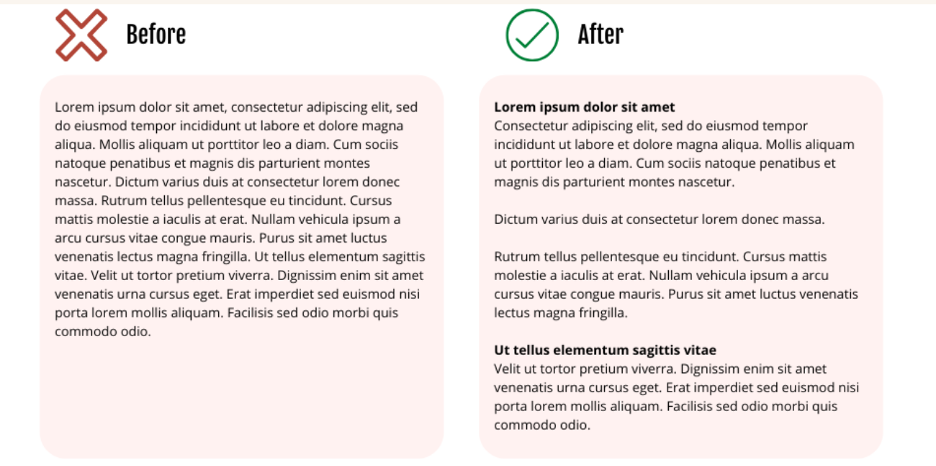

Creating a correct heading structure in your content will benefit both bots and users in terms of showing the content hierarchy. For example, you may have more than one H1 tag in your content. Or you may not have included subheadings such as H2 and H3 at all. So, how should the correct title setup be at this point? If you wish, let's make you better assimilate the subject by exemplifying it with a health content.

First, let's imagine that you are going to write a content about a heart attack(Kalp Krizi). Within the framework of this topic, the correct title hierarchy can be as follows:

Do not forget that presenting the heading tags in a correct hierarchy will make the content easier to understand by both search engine bots and users.

6. Visual & Media Optimization

If you only use text in your content, you may not be able to attract users sufficiently. Especially making the content that you cannot get efficiency with videos and visuals will benefit you a lot.

7. General Optimization

This stage is actually the process of reading your content from a user perspective and optimizing it accordingly. Unfortunately, there are many people who think that SEO-compatible content is just writing content for search engine bots and produce content accordingly. Here is a great opportunity to differentiate from your competitors and stand out! You can also follow these steps and give your content a general check:

- Always produce your content with users in mind.

- Produce content in a communication tone suitable for your target audience.

- Take care to make short and clear sentences.

- Create a consistent time flow in your content. One sentence should not be in the past tense, the next sentence in the present tense, the next sentence in the future tense, and the next sentence in the present tense.

- Always keep your introductory sentences interesting. If the introductory paragraph is not engaging enough, users may bounce from your site.

- Break up paragraphs in a way that is not intimidating. I would like to share an SS that will explain the importance of this:

I'm sure you can imagine how much of a difference just this one item can make.

I'm sure you can imagine how much of a difference just this one item can make.

8. Internal Linking

There are many benefits of internal linking in your content. With internal linking, we can direct users coming to your site to your similar content, and at the same time, we can tell the bots that these pages are related to each other. If you are not using internal linking in your content, you should definitely use it.

9. E-E-A-T Update

As you know, Google has released the E-E-A-T Update. Now, it has become much more important that an expert produces the content. If you are not getting enough efficiency from your content, check whether the content produced is really produced by an expert. Make sure that your new content is always produced in this context. Don't forget to use author bios in your content and develop author pages as much as possible.

In the meantime, you can learn more about the E-E-A-T Update in the comprehensive content prepared by our teammate Samet Özsüleyman.

10. Competitor Analysis

There is no need to say over and over again how important it is to analyze your opponent. If our competitor has already surpassed us, it means that they have done something better than us. What you need to do at this point is to identify what your competitors are doing better and optimize your content accordingly to beat them. For example, if your competitor's content is on the rise while your content is losing rankings, we recommend that you thoroughly observe the content produced by the competitor and the backlinks they receive. You can think of these items we have written as a checklist and compare your competitor's content with your own content in this context, and you can see the difference much more easily.

Duplicate Content

Duplicate Content is content that is similar or identical to content on our site, either on our own site or on another site. So, if you had to make a decision, how would you rank similar content on your site on Google? Yes, it's hard to decide. It's hard for search engine bots too.

When search engine bots see pages with similar/similar content, they may not be able to decide which to rank first and all pages with similar content may be ranked low. It can even sometimes lower the ranking of pages with duplicate content and rank pages with quality and original content. In other words, when there is duplicate content on our site, our pages may not be listed effectively. Even if they are listed, the performance of the pages may not be what we want, as the duplicate content will compete with the original page.

Presenting the same content on a website with different pages will send mixed signals to search engine bots and will negatively affect the crawling budget. Crawling the pages that we do not prefer to be crawled, and not crawling the pages that we consider important and added recently is a situation we do not want. In order to use the crawling budget efficiently, it is useful to check the duplicate content regularly throughout the entire process as well as at the beginning of SEO projects.

Duplicate content problems can be encountered with manual checks or technical crawling with tools such as Screaming Frog and Lumar (formerly Deepcrawl).

Duplicate content problems can be encountered with manual checks or technical crawling with tools such as Screaming Frog and Lumar (formerly Deepcrawl).

Different Domain Versions

When we have the same content on different protocols, search engine bots see these pages differently. When we think that two different pages have the same content, we encounter the duplicate content problem. A website can have HTTP and HTTPS versions, and different existing versions that are WWW and non-WWW. If the same content can be accessed from both www.example.com and www.example.com, or from http://www.example.com and https://www.example.com, you may have a duplicate content issue.

Let's say we have WWW and non-WWW versions of our site. We will have external links coming to the WWW version and external links coming to the non-WWW version. In this case, we can say that our SEO performance will be divided and the authority of the pages will be negatively affected by competing with each other.

The solution for the WWW problem is 301 permanent redirection to the preferred version. For the HTTP problem, the preferred solution is to 301 redirect the relevant pages to the HTTPS version.

Using Slash in URL

Pages with and without slashes can be seen as duplicates. If the relevant pages are reached with / at the end of the URL and likewise without /, we have a duplicate content problem. In this case, we should do a 301 permanent redirect to whichever option is our original pages.

URL Variations: Parameterized URLs

Parameters used for in-site filtering, pagination, rankings or session IDs can cause duplicate content problems.

For example; a URL with parameters that we can encounter on e-commerce sites can be as follows:

www.example.com/?q=women-shoes?color=red

In order to prevent our parameterized URLs from creating duplicate content problems, we can use canonical tags to tell search engine bots our standard URL that we want to be listed.

Although there is no penalty for duplicate content, we must have unique page structures to increase organic traffic and strengthen authority.

Keyword Cannibalization

When we first start the project, we examine the performance of the pages we target with the keywords that are most important to us. Sometimes the situation may not be very bright. One reason for this may be that we try to be listed in the SERP with different pages for the same keyword.

One of the reasons that reduce page performances is keyword cannibalization. One of the most common misconceptions on the SEO side is that if you rank on different pages with a keyword, you will get more traffic. For this reason, many people expect to be listed in a good position by creating multiple content on the same topic targeting the same keyword. However, we can say that the situation is the opposite. When we target different pages with the same keywords, we actually compete the pages among themselves.

Therefore, we reduce each other's SEO performance. In queries related to the same or very similar keyword, you can see that your page A is listed and your page B is listed. In these cases, we are our own competitors.

However, it is not correct to say that there is a keyword cannibalization problem for all cases where different pages are ranked for the same keyword. User intent and the purpose for which the pages are created are very important. For example; it is very likely that multiple pages are ranked with the same keyword on e-commerce sites. A page that provides information to the user in an informational query and purchase pages created for transactional queries will probably not cause keyword cannibalization problems.

Search engine bots will understand user intent and present the most relevant results in the SERP.

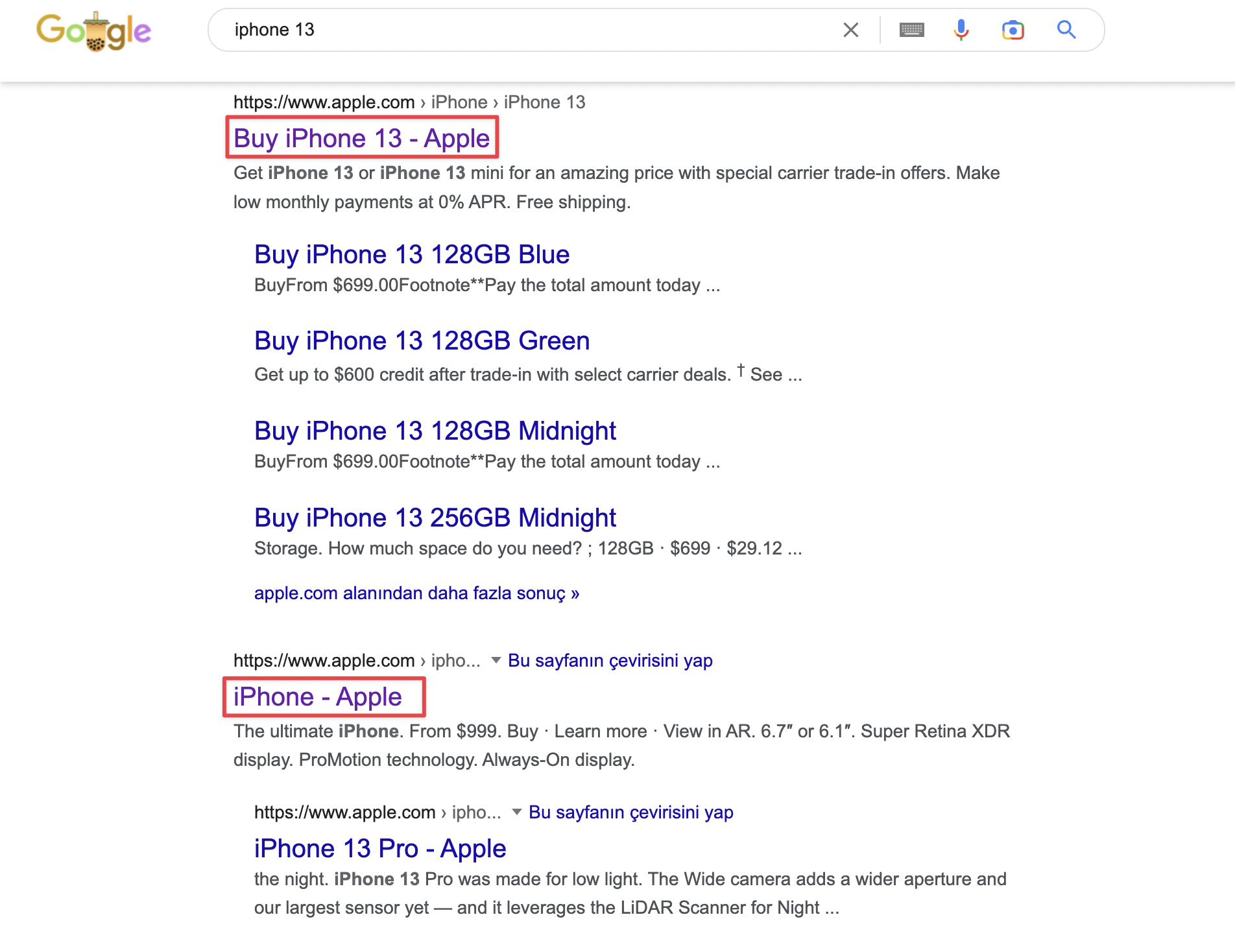

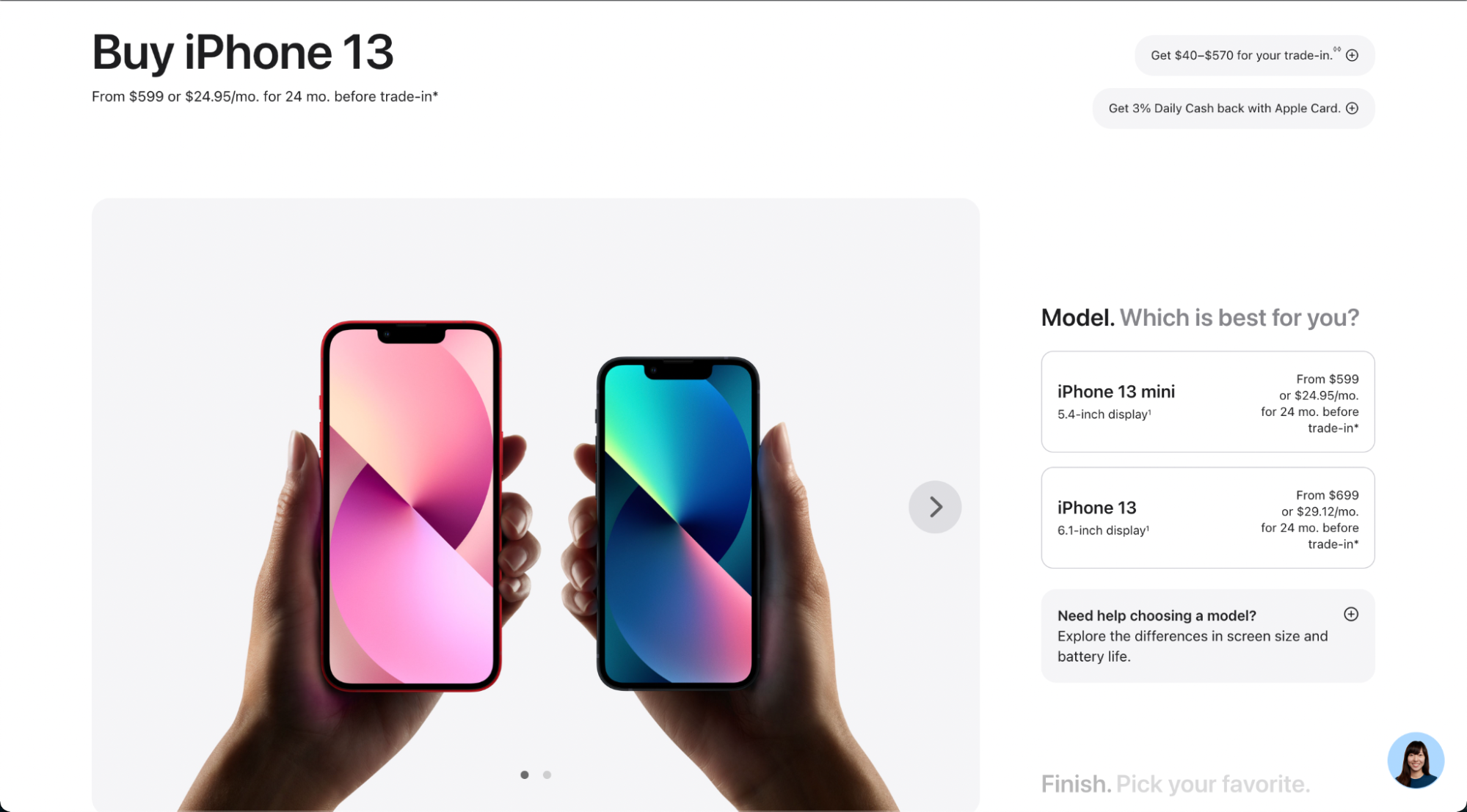

For example; when we search with the keyword iphone 13, we encounter 2 pages of Apple in the SERP. One is the iphone 13 purchase page and the other is the page with information about all iphone models.

"Buy Iphone 13" page listed first with the word "iphone 13":

"Iphone" page listed in 2nd place with the word "iphone 13":

Although these pages are listed for the same keyword, we see that they are created with different user intent. Therefore, it may not cause a cannibalization problem.

Why is keyword cannibalization control important?

- It is important not to divide page authorities and not to lose their value.

- It is important for the most relevant page for the relevant query to rank in a good position and get good organic traffic.

- It is important to use the crawl budget efficiently.

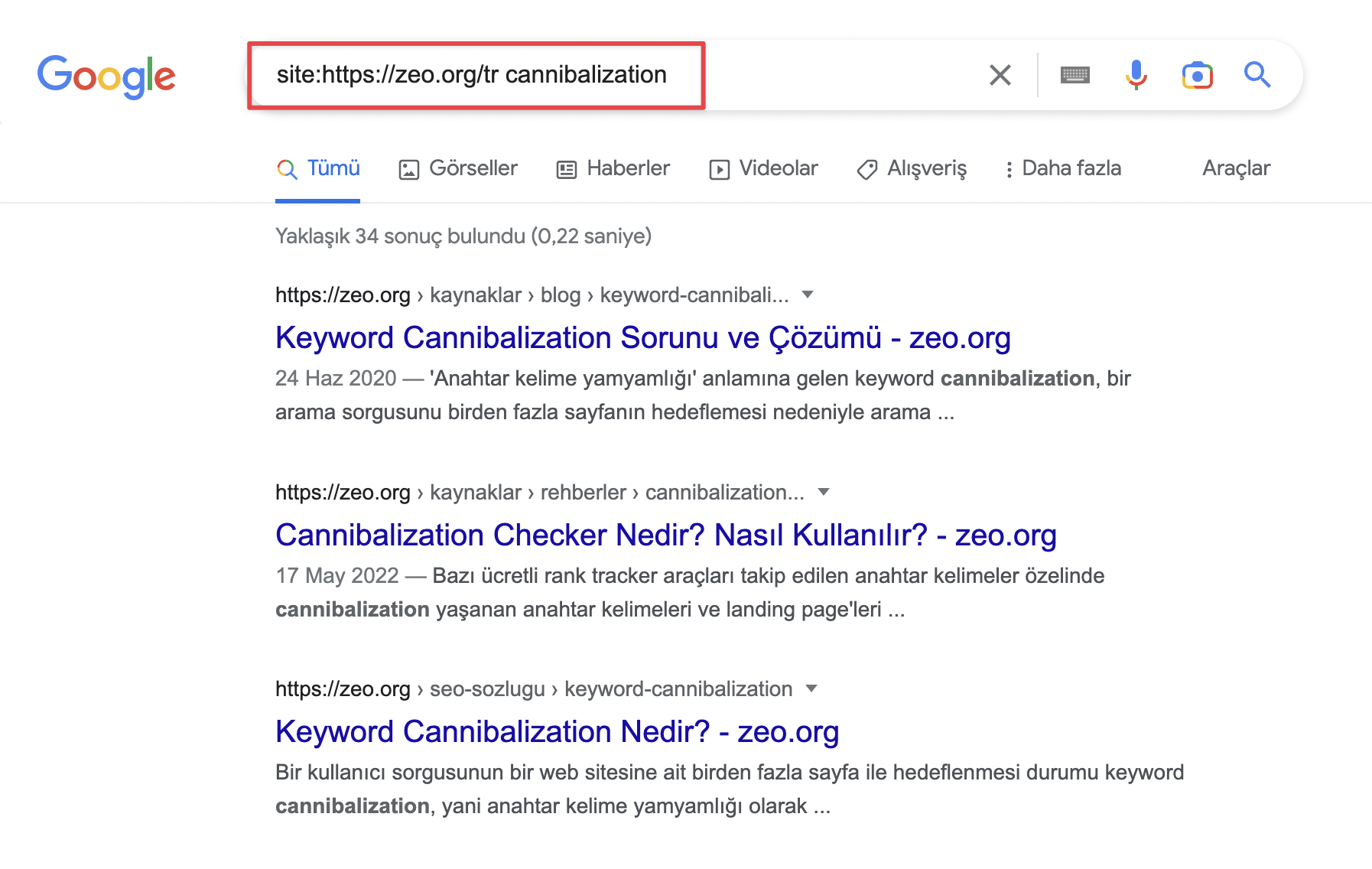

The fastest and most practical way to check for keyword cannibalization is to search for "site:example.com keyword" and examine all your pages ranking for the relevant query.

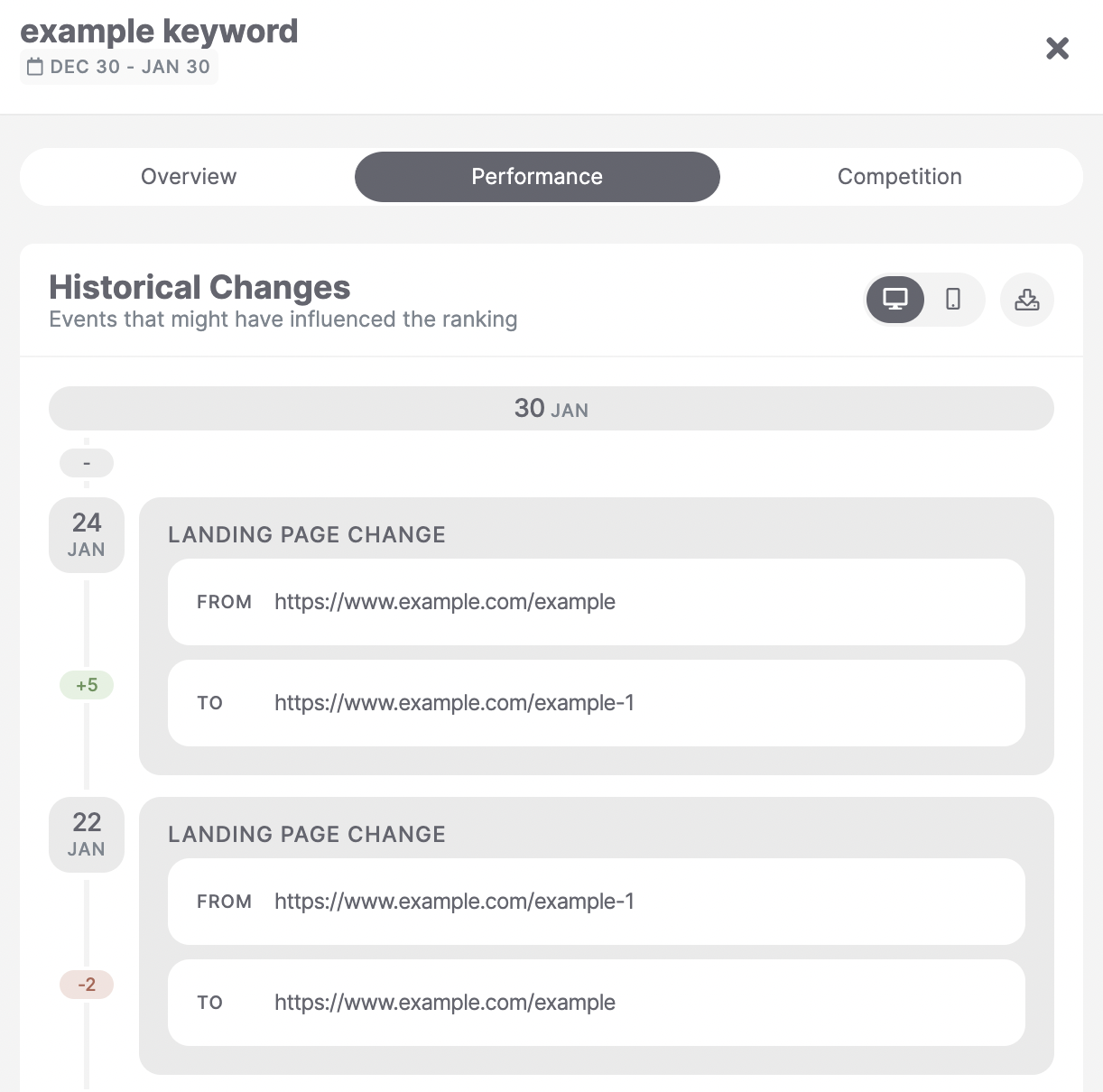

In addition to manual control, we have a tool where we track the keywords we set in each project. One of the most common tools we use to track the performance of our keywords is SEOmonitor. SEOmonitor checks keywords on a daily basis and detects words with keyword cannibalization problems and gives warnings as follows.

You can examine which pages are ranked with keywords related to SEOmonitor and their changes over time from the "Performance" field. It will also be very useful to manually examine the pages. After manual review, you can decide on the most suitable solution for pages competing with each other.

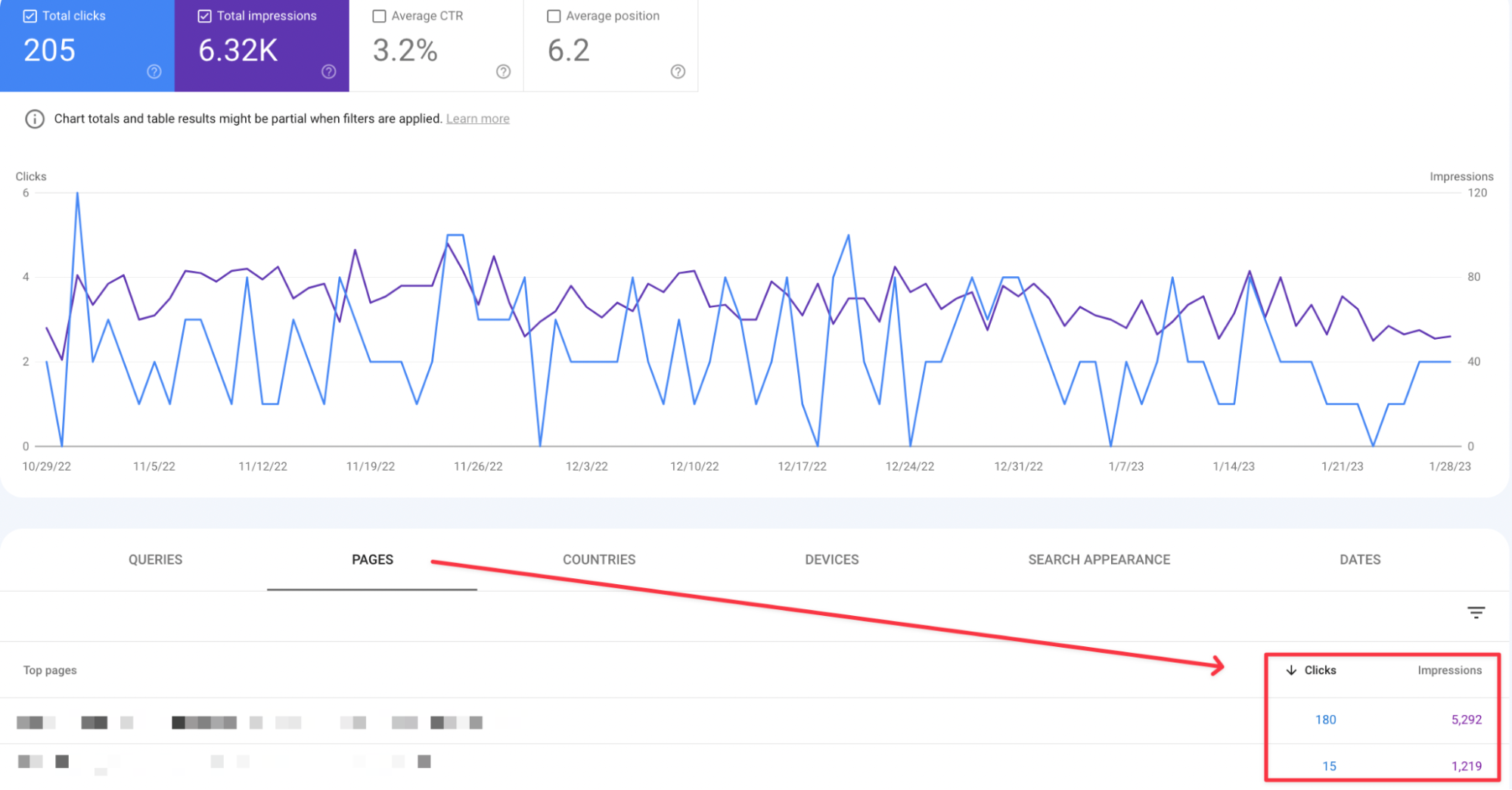

In addition, considering that we have just started the project and have not yet set up tools for keyword performance tracking, the tool that we often use and will enlighten us will of course be Search Console. Even if we do keyword tracking using a different tool, we can perform many of our checks free of charge with Search Console.

To check which pages are ranked for any keyword, you can type any query you want in the "Query" section of your Search Console account and examine your pages that receive traffic in the relevant query in the "Pages" field. Or, if you are directly curious about specific page performances, you can do your research with page comparisons.

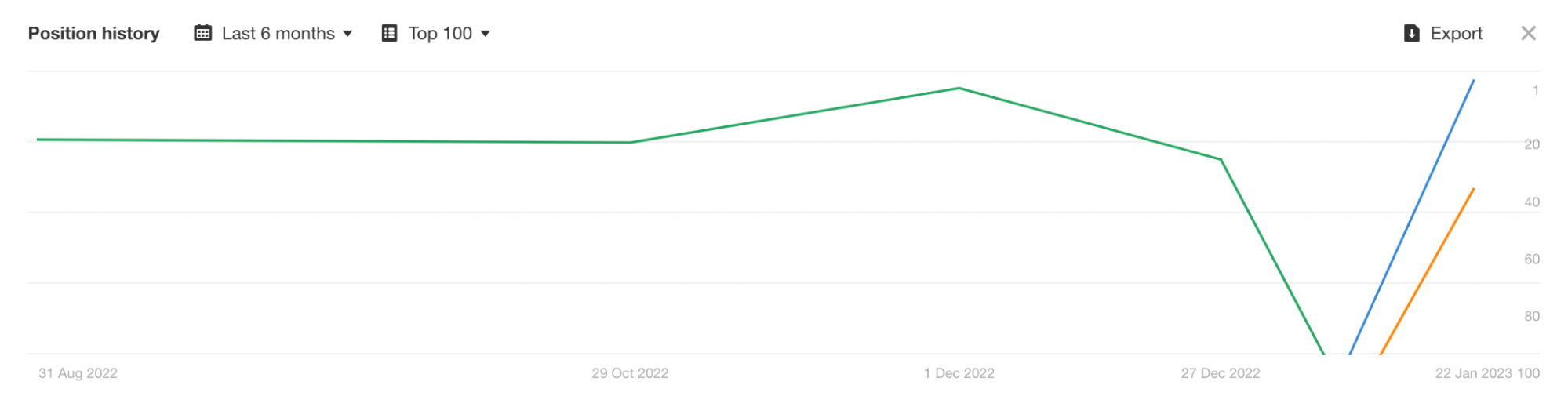

You can access your pages ranked for a specific keyword in the Ahrefs "Position history" field for any period of time. In the example below, we see that three different pages are ranked for a keyword.

You can collect data in many ways. What is Can's Cannibalization Checker? How to Use it? blog post, you can learn about how to use Cannibalization Checker, which ZEO offers free of charge, and use it in your studies.

Keyword Cannibalization Solution

Editing Internal Link Structure

One of the solutions to the cannibalization problem is to create a correct in-site linking structure. When a fiction is created by targeting the right pages with the right keywords, search engine bots and users can be directed by providing information about the pages with relevant anchor texts.

Title & Meta Description Edits

If we have competing pages that compete to be listed in the same keyword, it can be tried to solve the cannibalization problem by editing the title and meta description tags of the pages. Arrangements should be made so that the pages have different targets.

301 with Permanent Redirection

Pages with duplicate content with cannibalization problems can be solved by 301 permanent redirection to the page with good performance.

Noindex Usage

Sometimes, for certain reasons, the pages causing the problem may not be deleted or redirected. Our pages that do not have organic traffic and can be considered weak content can cause keyword cannibalization problem. In such cases, it may be preferable not to index the page that is not wanted to be indexed by using the meta noindex tag.

The point we will pay attention to on the page where we apply the noindex tag is the use of rel=canonical. John Mueller from Google stated in a statement that conflicting signals were sent to Google as a result of the use of canonical and noindex tags together. According to this information, it will be one of our priorities to remove the rel=canonical tag on the pages where we will add the noindex tag.

Competitor Analysis

Competitor analysis plays a big role in determining the SEO strategy. When starting an SEO project, apart from the general control of the site, seeing the potential and deficiencies with competitor analysis will be beneficial when creating the strategy. Determining which words competitors are listed in, what type of content they focus on, their strengths and weaknesses will make it easier to determine the actions to be taken. We should also underline that this analysis to be carried out in the first place is an ongoing process during SEO work.

Through competitor analysis;

- Identifying words to target,

- Determining what type of content will be produced,

- How to optimize this content,

- We get an idea of what kind of sites can link to this content.

Let's take a step-by-step look at how to perform this analysis.

Identifying Competitors

The brands or sites you see as competitors on the offline side may not be your strongest competitors on the SEO side, or you may need to consider different competitors for different areas of your site.

Tools such as Ahrefs, and Semrush will help you in this regard. You can perform all the items we have discussed within the scope of competitor analysis through such tools. You can see who you compete with on the organic side in the words you are listed with your site and examine these competitors. You can also direct your strategy by identifying different organic competitors based on the groupings you make after the keyword analysis you will perform.

Focusing only on high-volume words when identifying competitors can cause you to miss hundreds of potential queries. Examining low-volume but action-oriented queries such as direct purchase or form filling will contribute to the conversion rate you want on your site.

When identifying competitors, it is also important to determine which sites you will not compete with. For example, making an effort to leave resources such as Wikipedia behind may not be the right strategy.

Detection of Keywords that Competitors List and Our Site is not Listed (Keyword Gap Analysis)

We use the term "Keyword Gap" to identify keywords where your competitors are listed but you are not. With Keyword Gap analysis, you can close the gap with your competitors and add new ideas to your strategy. When identifying these words, it is necessary to perform analyzes to answer the following questions:

- Why am I not listed in related words? Do I offer products or services related to these words?

- What type of content are my competitors listed for related words? Are they listed with a service or product page or an informative blog content?

- Have off-site SEO techniques been used for the pages listed in related words? Has link building work been done?

Let's go through an example to elaborate. Suppose we have a website that provides psychological counseling. "What is Anxiety? Causes, Symptoms and Types of Anxiety", we can direct users to our informative content and direct them to our counseling service.

Identifying Pages with Good Performance

Identifying the pages that bring the most traffic to your competitors should be part of competitor analysis. When you look at the overall picture, let's say you have a competitor that gets high traffic. When you look at the pages that get traffic, this competitor gets 90% of its traffic from its homepage and brand queries. In this case, it may not make much sense to examine the SEO strategy of this competitor. On the other side, there is a competitor that produces content and drives traffic to product or service pages. It would be the right strategy to examine the traffic-attracting pages of this competitor and get ideas.

Another important detail to be examined while performing this analysis will be to determine the types of content. For example, an e-commerce site may be driving traffic with "how-to" guides, or your competitor in a niche industry may be producing detailed content guides and getting traffic this way.

Examining the Backlink Profile

The impact of links to sites from authoritative websites on rankings continues to this day. By examining the backlink profiles of your competitors, it will help you determine whether they allocate resources and time to this side. While doing this study, it is important to conduct a page-based review. Tools such as Ahrefs and Semrush will help you in this regard.

By examining the sites that link to your competitors, you can add contacting them to include your related content or service to your strategy. Another tip is that a content that links to two or more of your competitors is likely to link to you. You can perform outreach work by identifying this type of content through tools.

Closing Remarks

Let's end our article with the hope that you will achieve high organic traffic by planning prioritized and high-impact jobs at the very beginning of the SEO project with the controls and action suggestions we have mentioned. :)

Resources that can help: