How to Recover SEO of Hacked Sites?

From time to time, websites are attacked or hacked. In these cases, Google can impose penalties on websites to both protect users and provide a safer web experience. As a result, your site's SEO can suffer. In this article, I will share some suggestions on how to restore the SEO of websites that have been hacked or attacked.

You can find many threads in forums showing that traffic from Google drops after an attack or "hack":

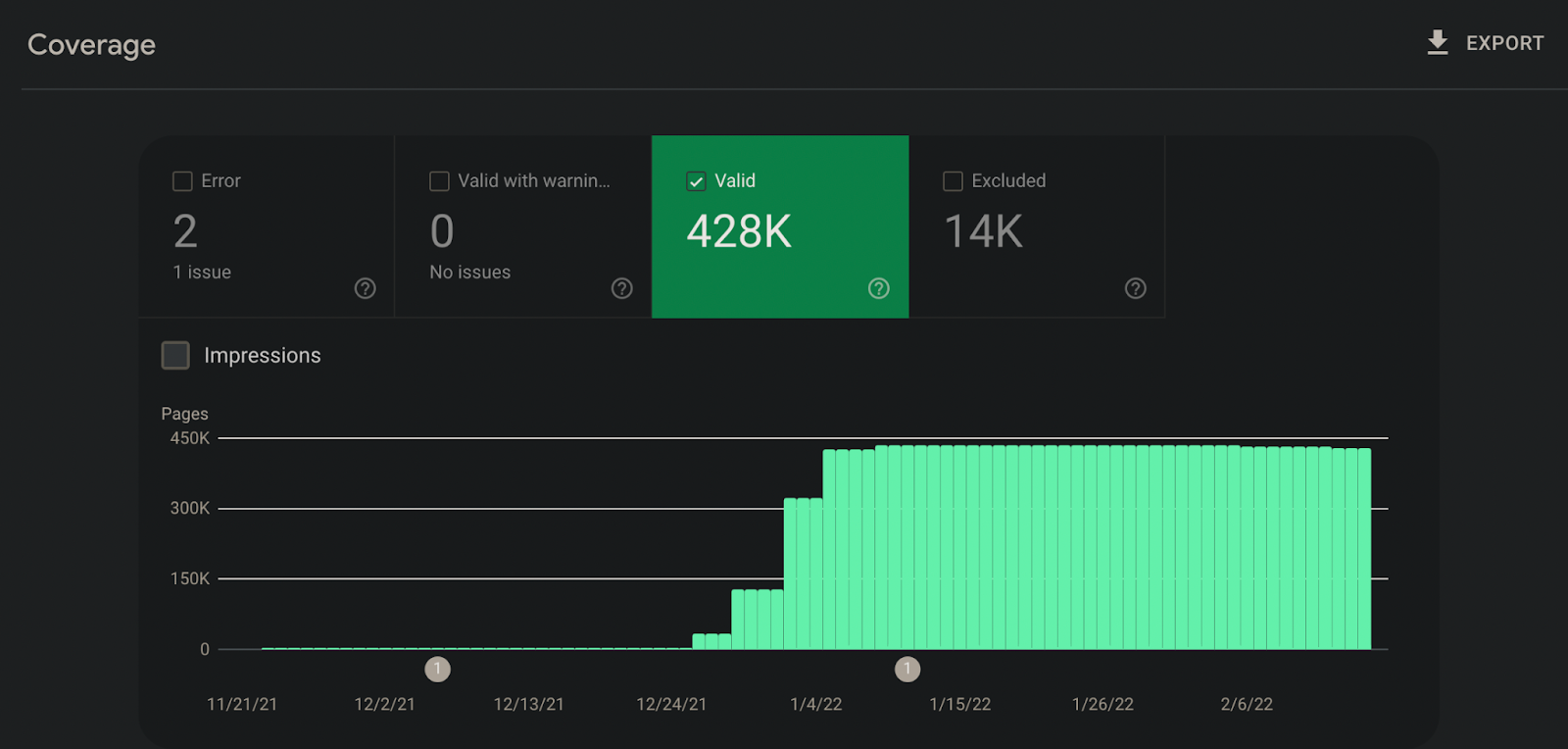

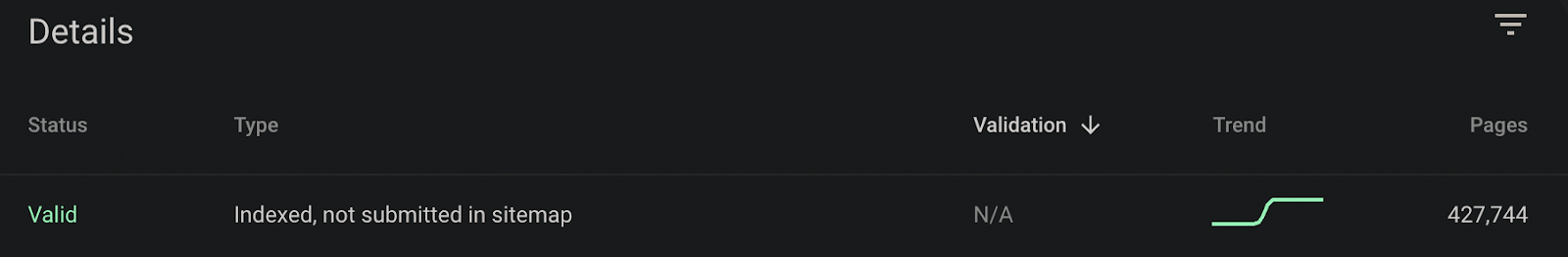

Hundreds of thousands of pages can suddenly get into the Google index due to the creation of pages on your site that you have no control over. These pages can be opened with a status code of 200 at the beginning and then send your visitors to the original targeted site with 301. If you do not prevent this situation, many pages may stop in Google as in the example image below:

Even if you don't want these pages, Google can find them more easily because they are automatically added to sitemaps in CMSs like WordPress. Even if you remove them from the sitemap, they can still be included in the index:

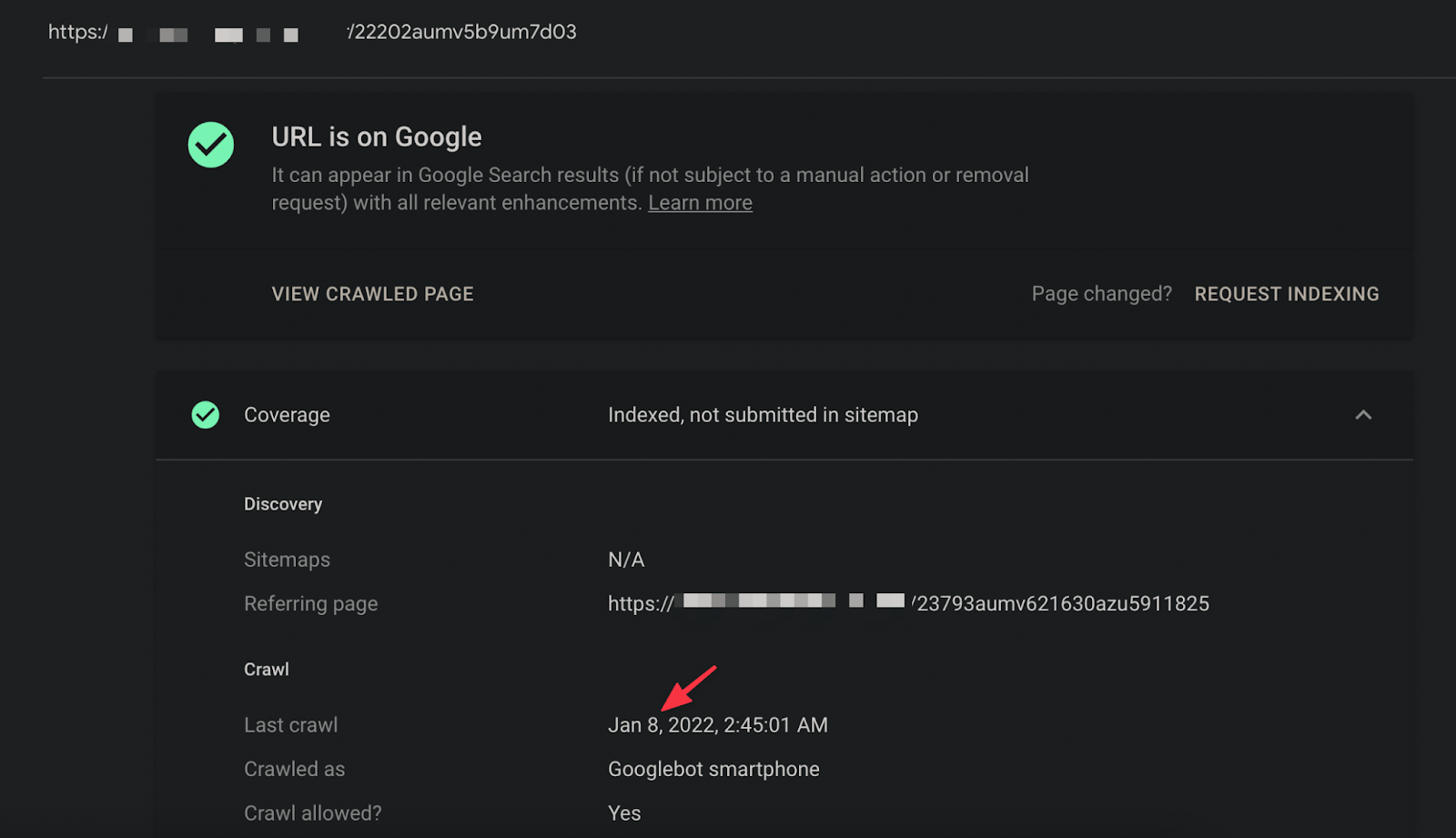

Googlebot needs to crawl the pages again to reduce the number of indexed URLs. Even if you clean the viruses, you may have problems with index optimization because Googlebot does not visit the relevant pages after the first time it sees these pages:

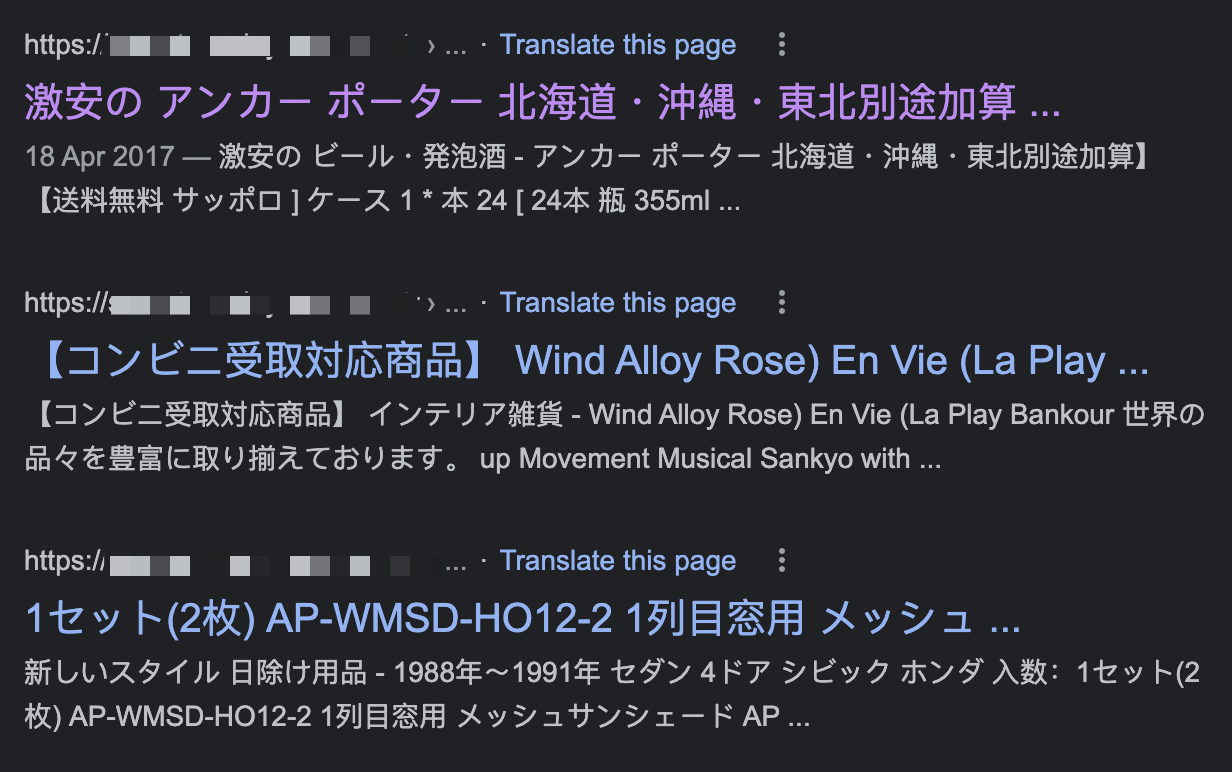

As you can see in the example below, pages resulting from Chinese or Japanese hacking attacks, which are well-known in the SEO community, can appear in the SERP:

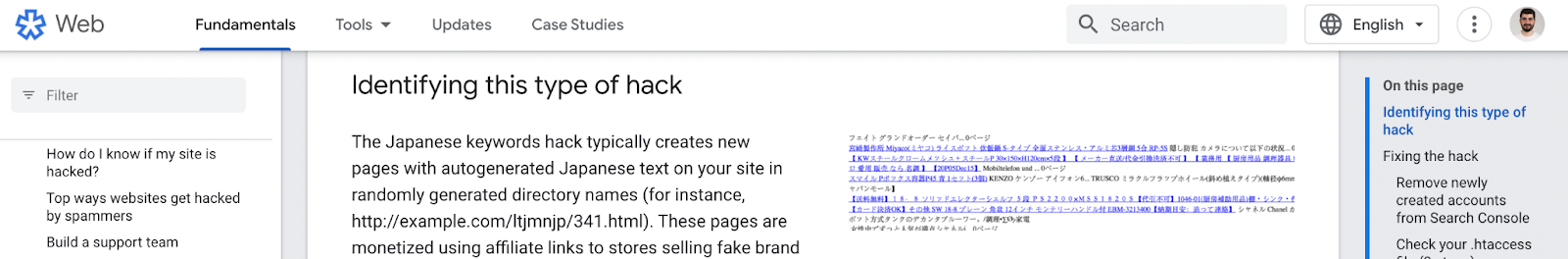

Google itself has also made specific statements just for this type of attack:

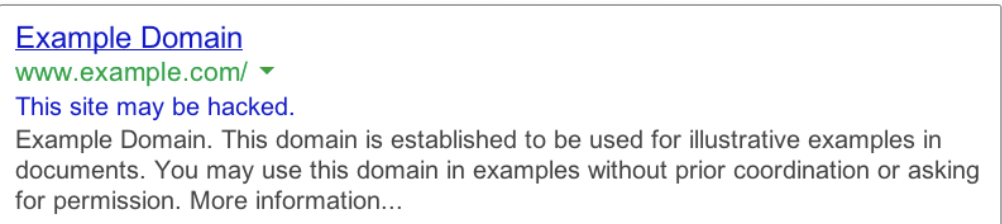

Also in the SERP, browsers such as Google Chrome may show a warning that "this site has been hacked" or "this site may harm your computer":

You can report sites that Google has not discovered but that you think may be harmful to you at https://safebrowsing.google.com/safebrowsing/report_badware/?hl=tr.

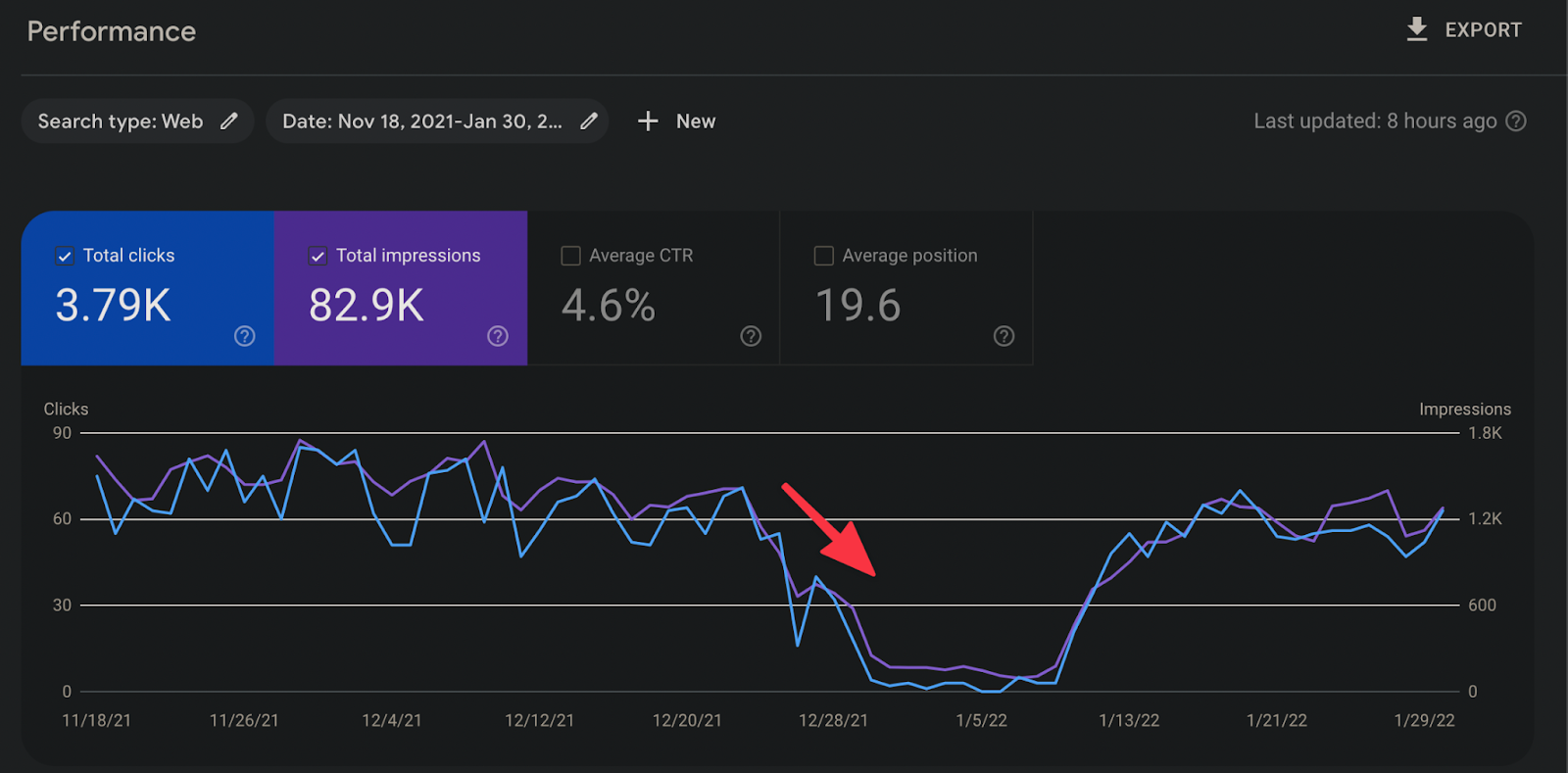

Google may not see hacked or malicious pages or may not take manual action on your site. In these cases, it is important to do the checks yourself. In the example below, you can see how the SEO performance of a hacked or hacked site drops. It is also normal to see decreases in Google News & Google Discover reports.

It is also useful to check that the hacked pages are not on sitemaps. You can check not only sitemaps created for articles but also sitemaps such as images-sitemap.xml.

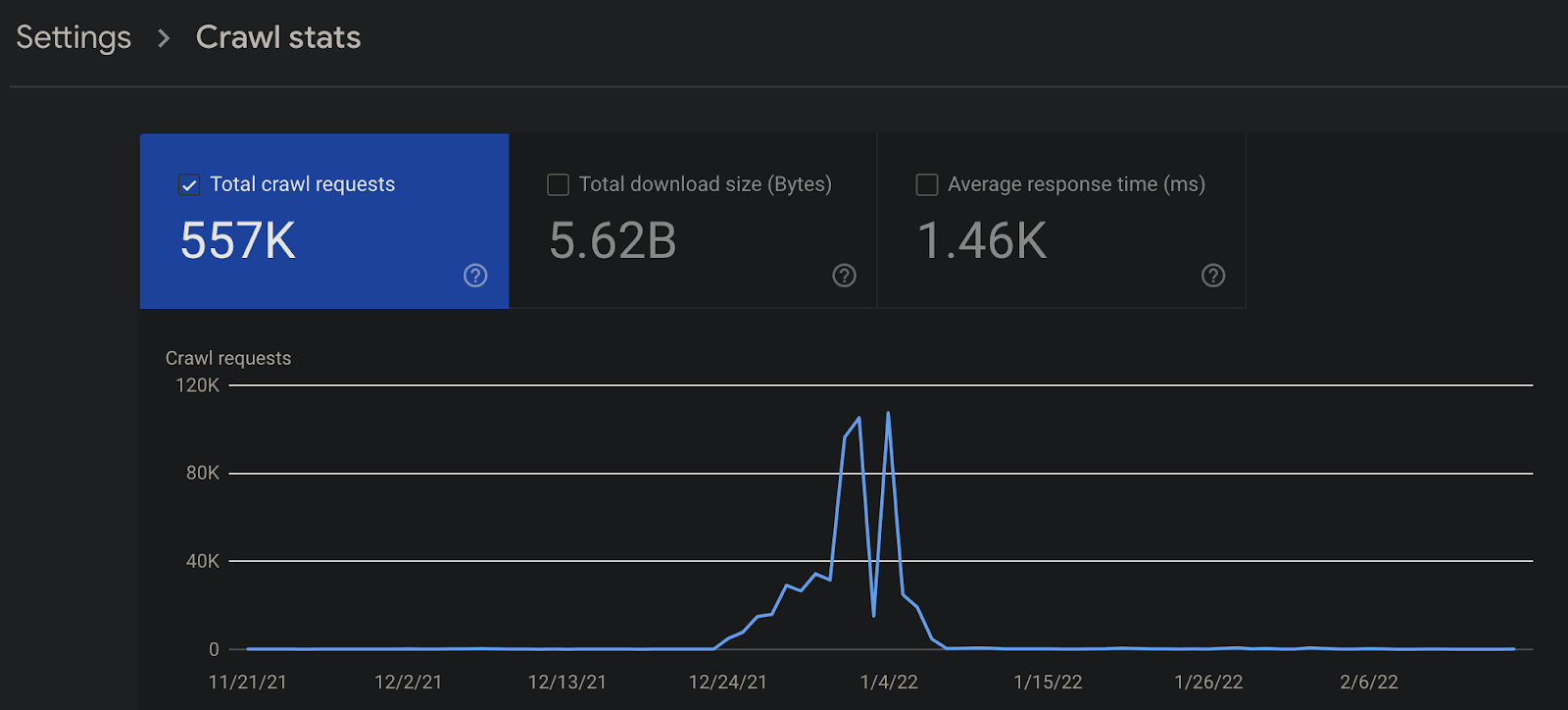

In crawl statistics reports, you can also determine how much crawl requests have increased since the pages entered the Google index and how much the requests have decreased after the pages have been deleted. If you have a similar situation on your site, you should definitely review these important reports.

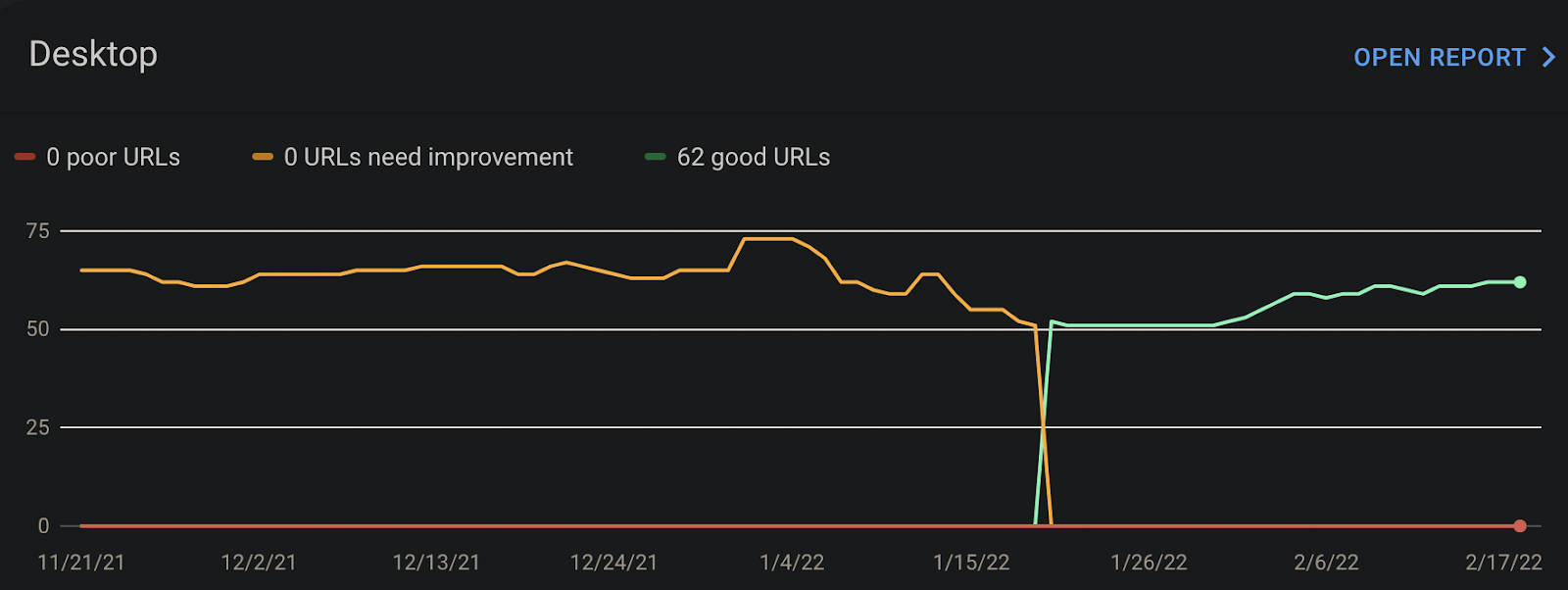

Your Core Web Vitals data may not be affected in this case. Since the data comes from the Chrome UX Report, the status of your indexed pages may be included here.

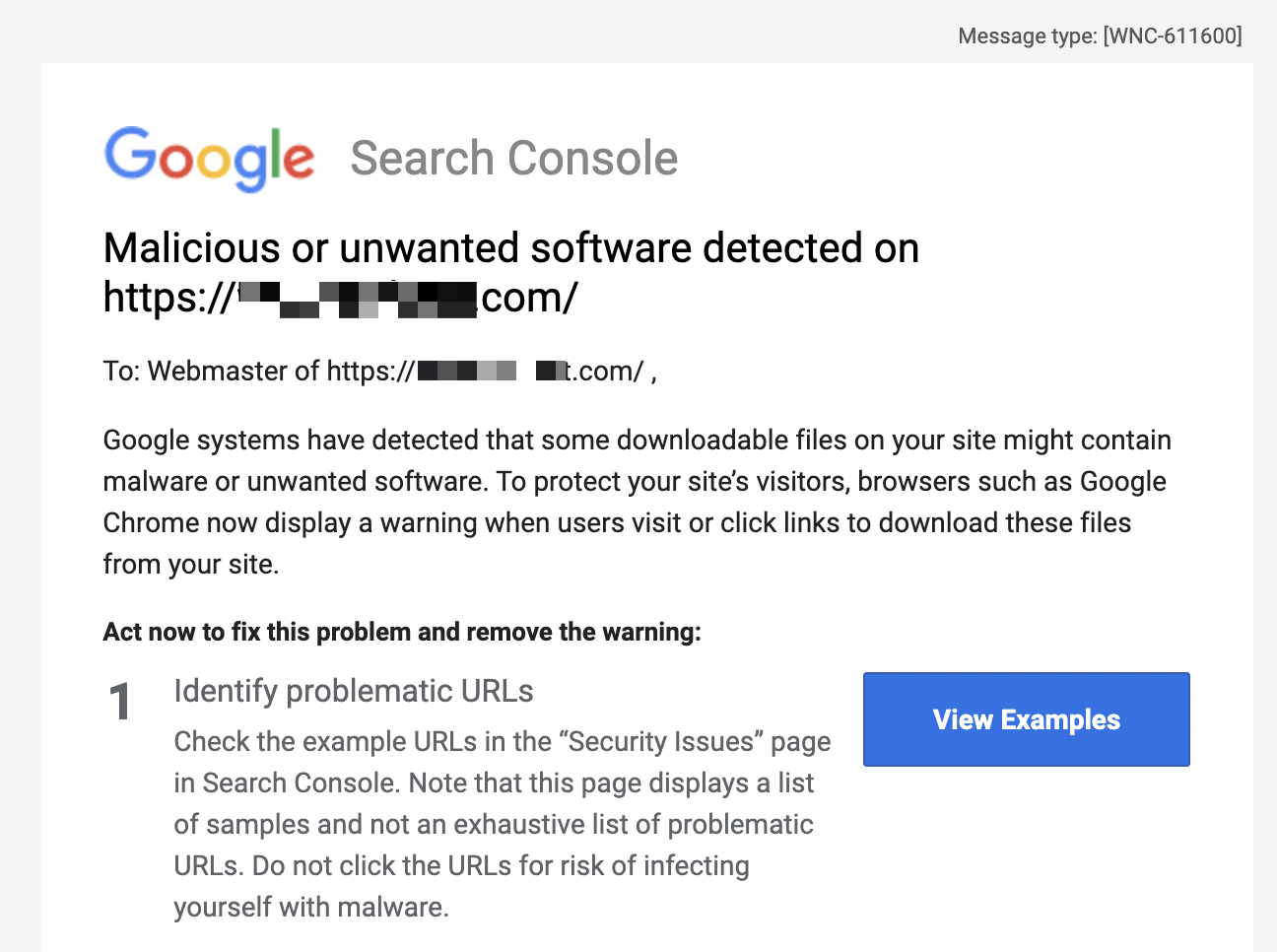

As I mentioned above, Google may not always send you an e-mail or notification. When it does, you can receive a notification similar to the example below:

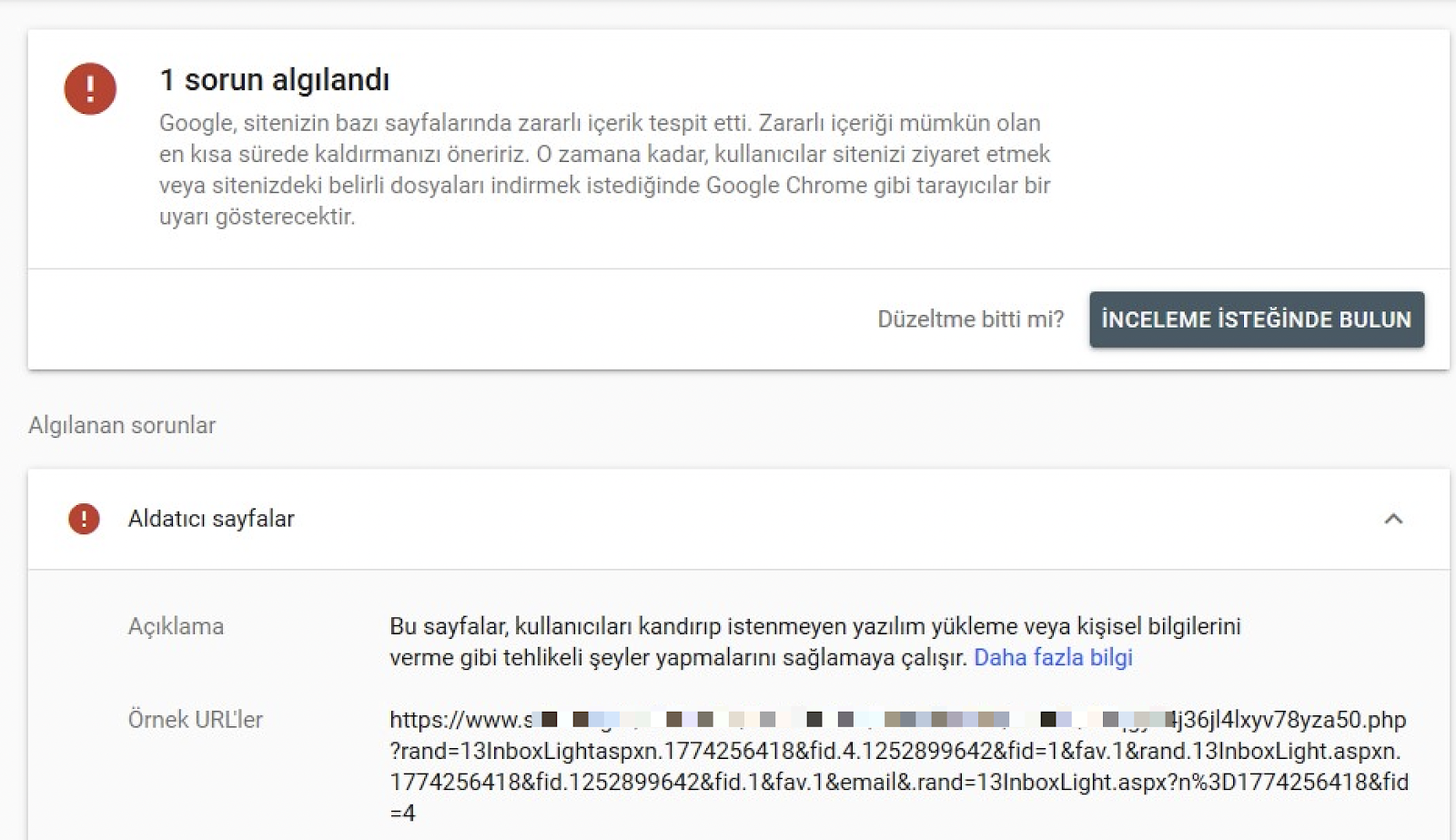

An example that can be found in Search Console is given below:

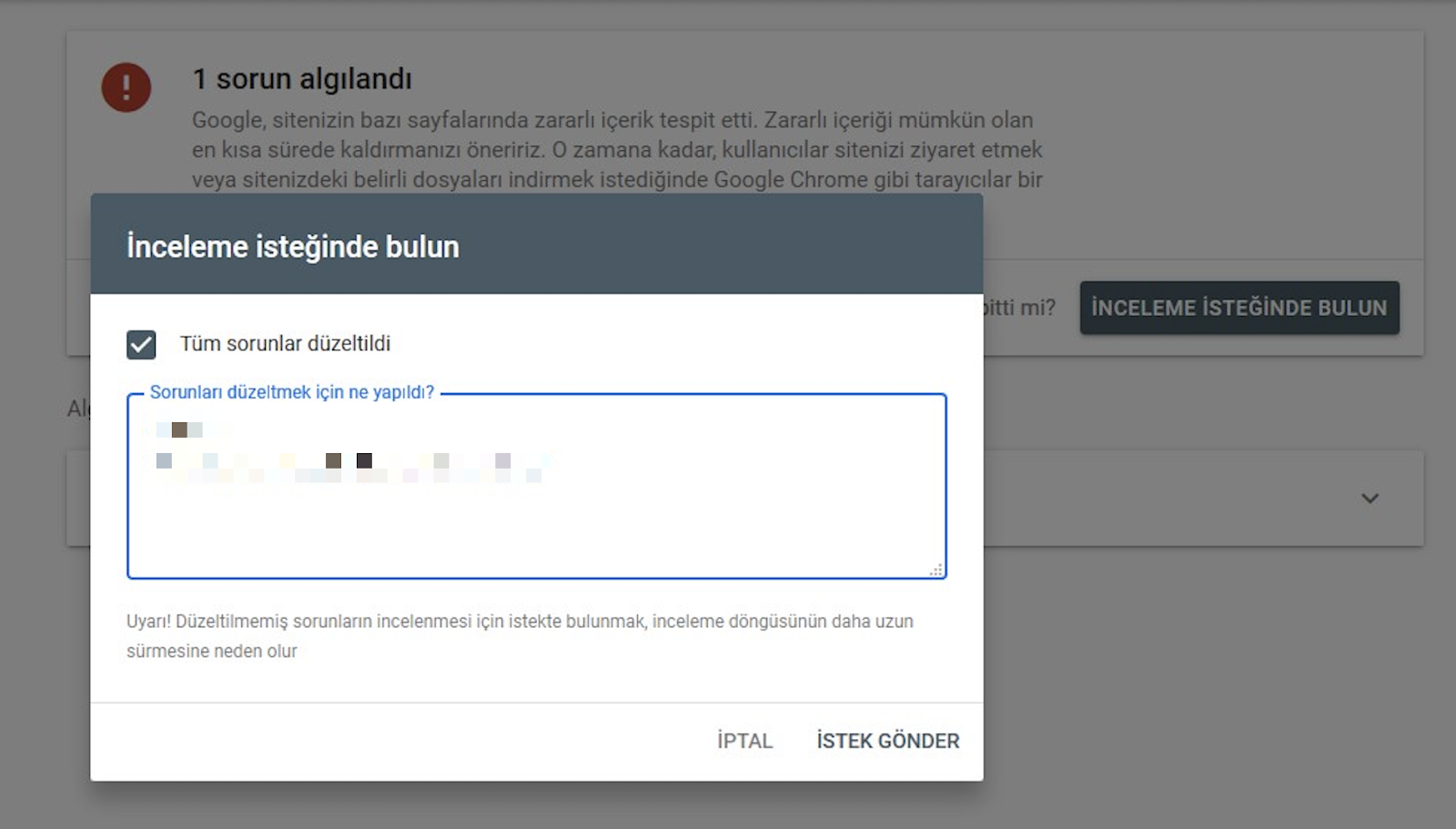

When you clean your site from viruses and deceptive pages, you should definitely request a review and write detailed procedures in the incoming form. If this notification belongs to the previous site owner, you can also indicate this situation in detail in the form. The manual process will be removed in a short time:

If I Secure My Site, Will I Regain Old SEO Performance?

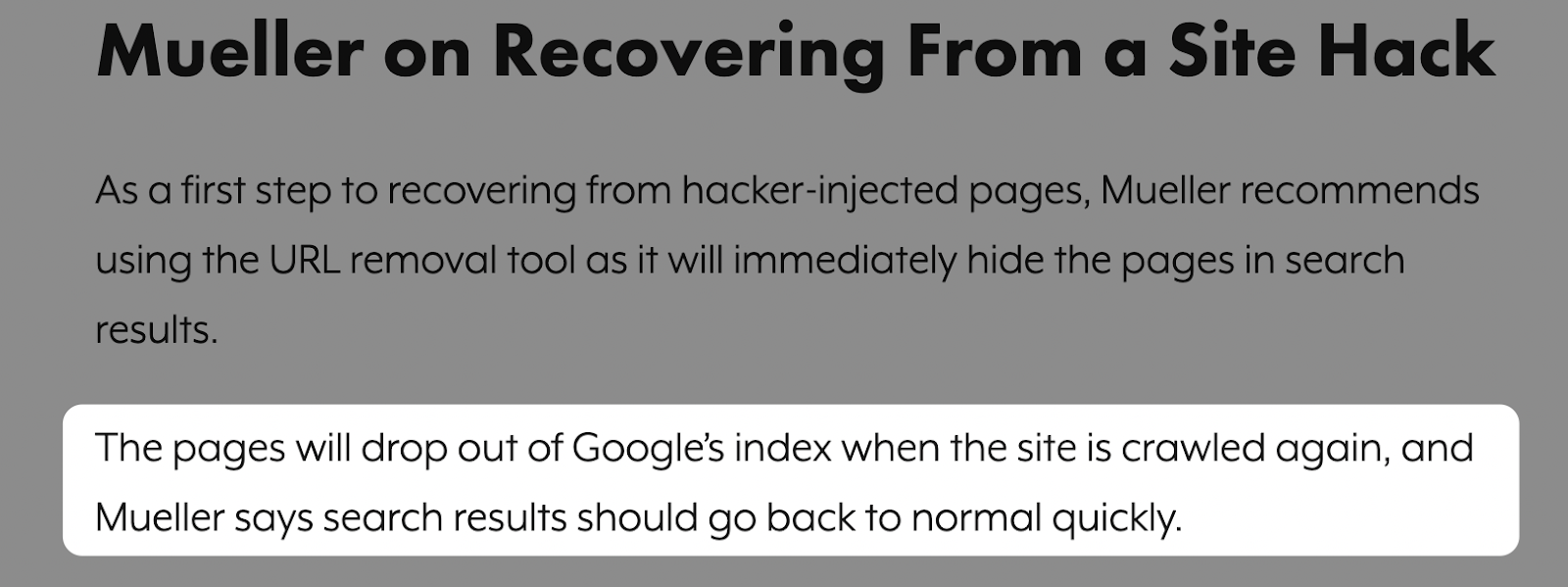

Yes, once you make your site more secure and Googlebot re-crawls your relevant pages, you can regain your performance over time. I would also like to note John Mueller's statement:

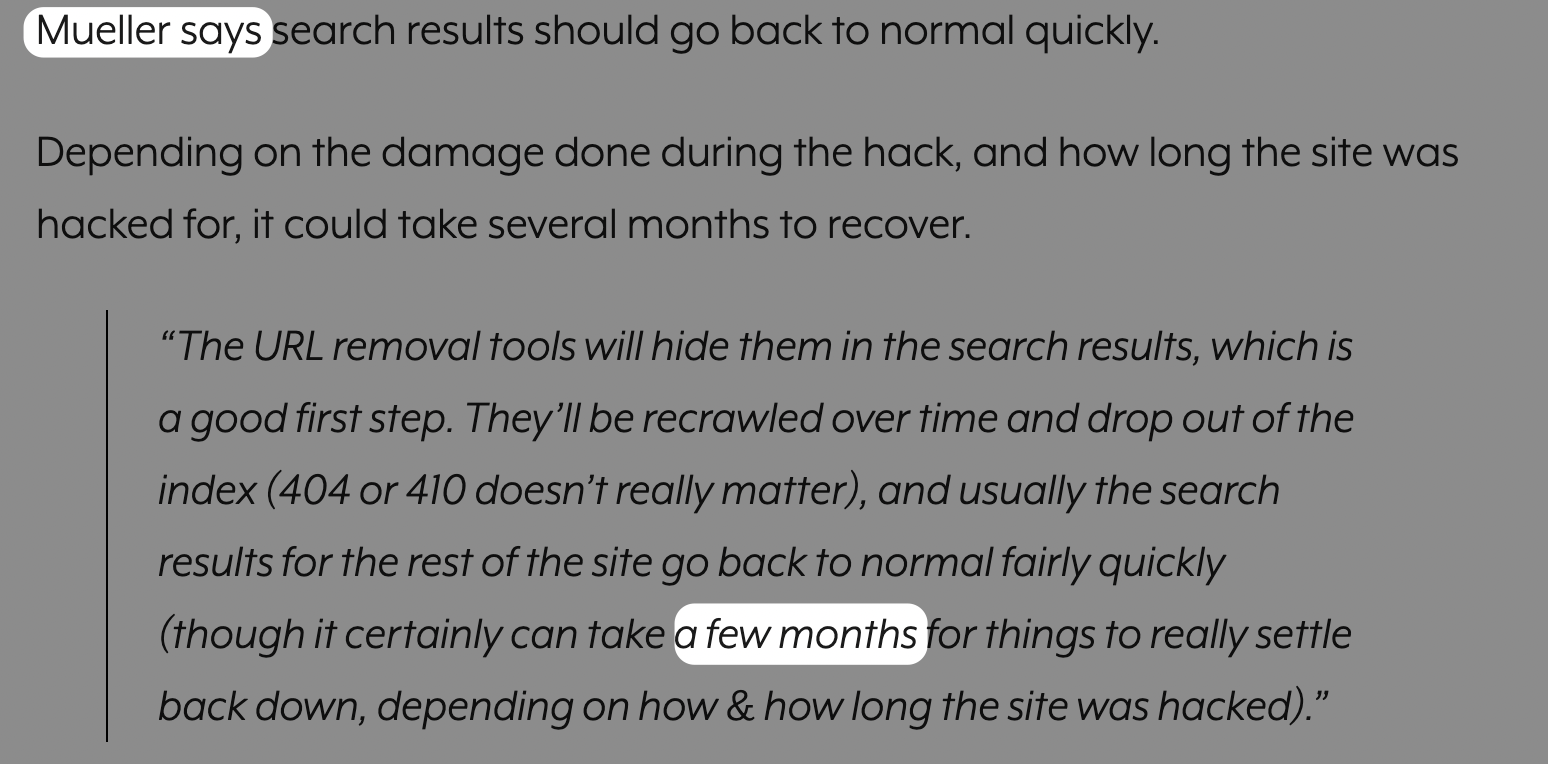

After How Many Months Will My Site's SEO Performance Come Back?

Depending on the size of your site, it may take a few months. If you have a URL structure that is rarely hacked, then you can think of it as a shorter period of time.

What Can Be Done to Clean Viruses and Recover Pages?

Deleting and finding viruses or malicious pages is a specialty. I wanted to bring together the details that came to my mind on this subject in a subtitle. At the end of these studies, you will at least take steps to restore SEO performance.

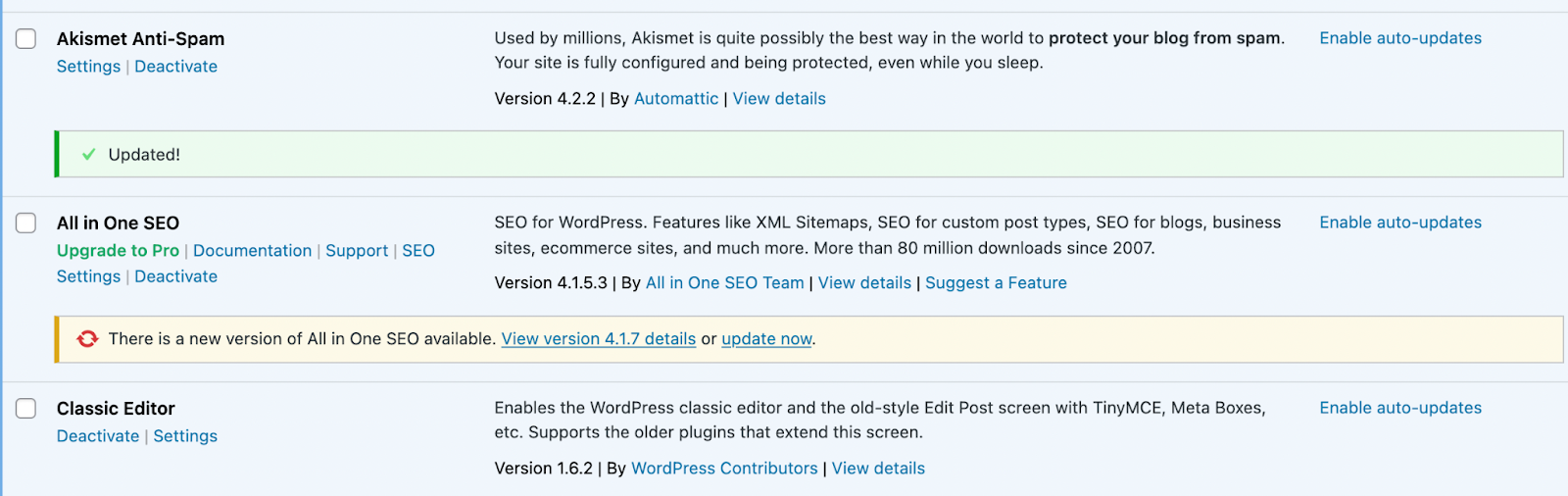

If you have a WordPress site, confirm that your plugins are up-to-date and reliable. You can get rid of outdated and unnecessary plugins:

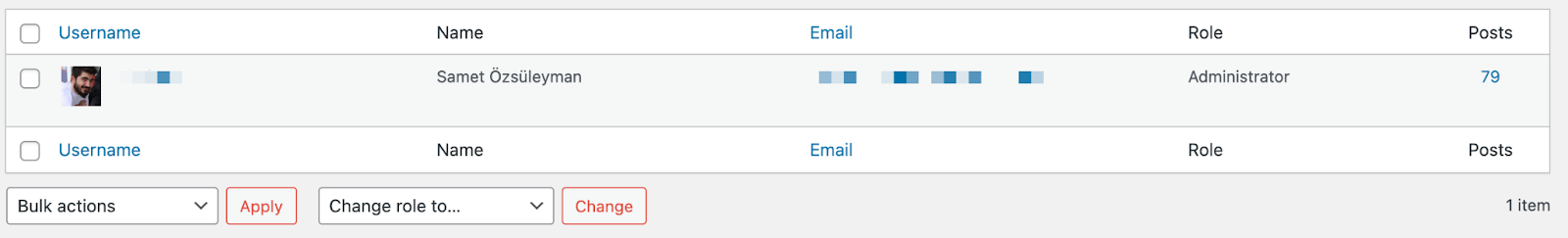

You can check if there are any users added to the dashboard other than you or your team. You should definitely change your password. In addition, you can set a custom administration panel path instead of the classic /wp-admin panel path:

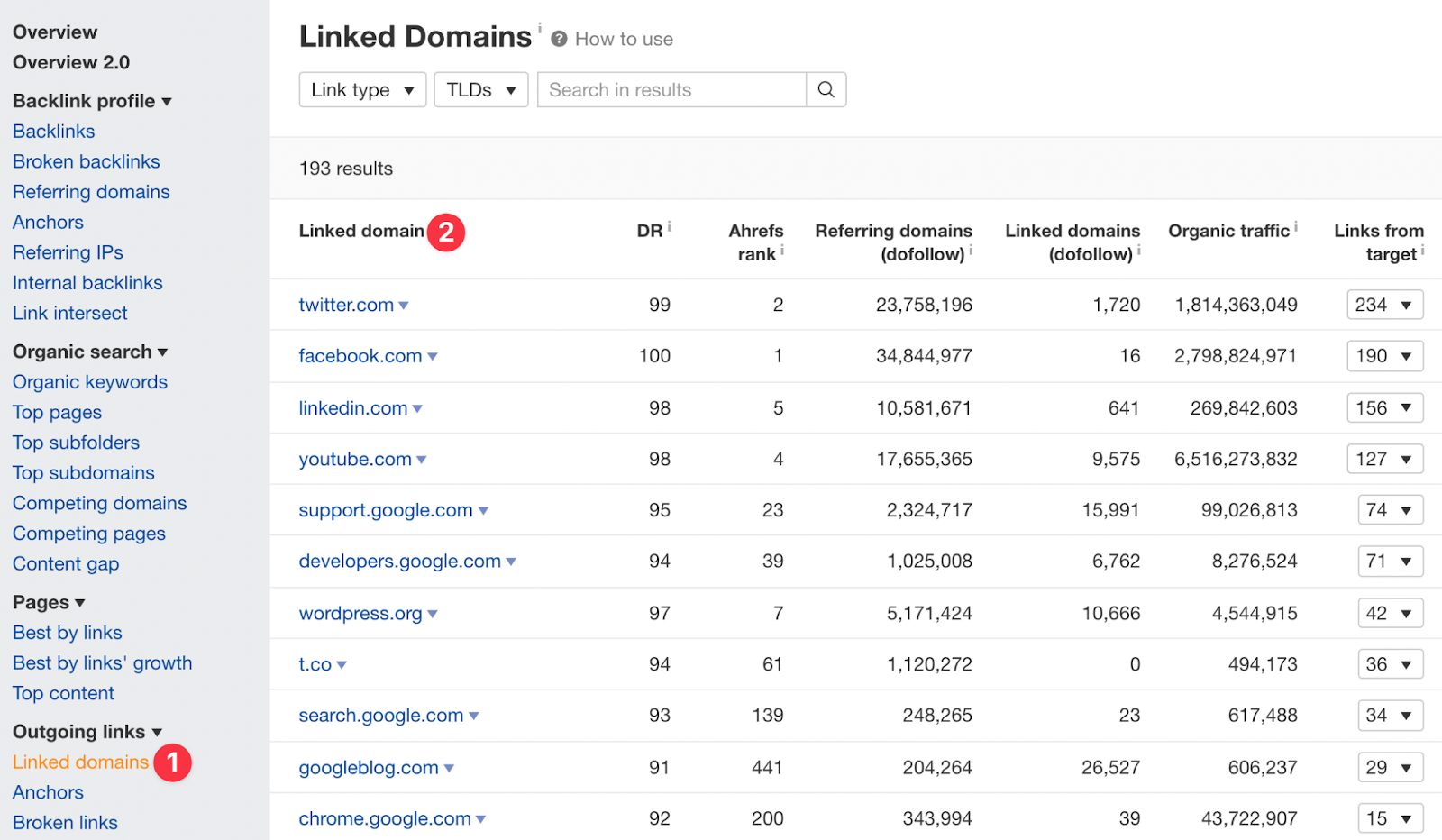

With the help of Ahrefs or a similar tool, you can detect links from your site to other sites. Since these are not in articles or pages, but in some cases are added to files such as footer.php, these tools can provide you with bulk information. If there are link outputs without your knowledge, open and clean the relevant files:

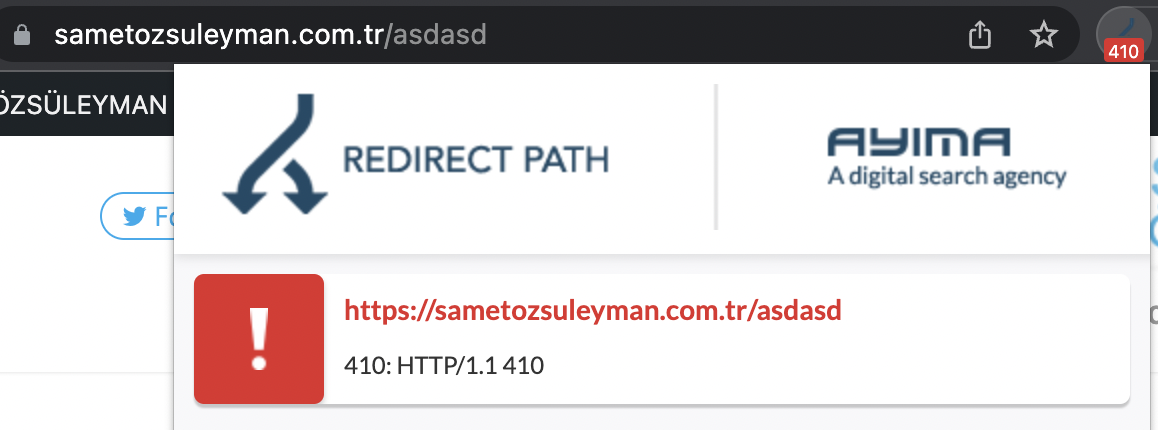

Delete redirected pages that have nothing to do with your site and make it return a 404 or 410 status code:

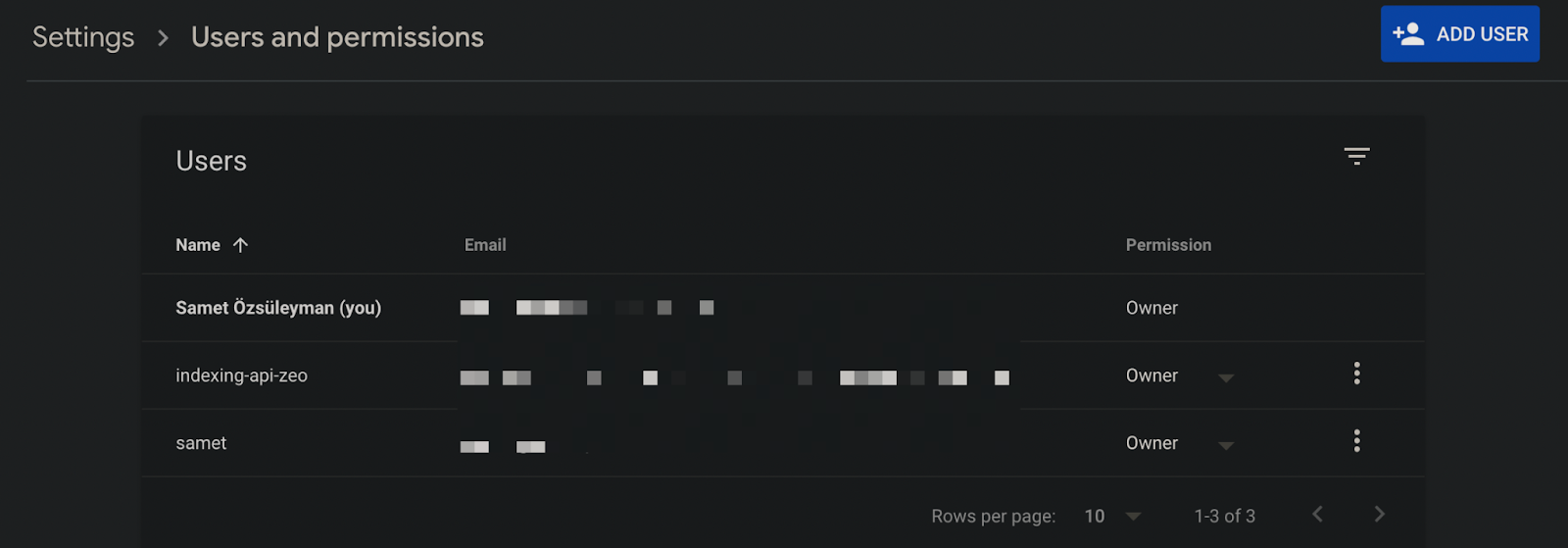

Check to see if other users have been added to your Google Search Console account. With a simple meta-verification code that can be added to the site, you can put the entire performance of your site in the hands of others:

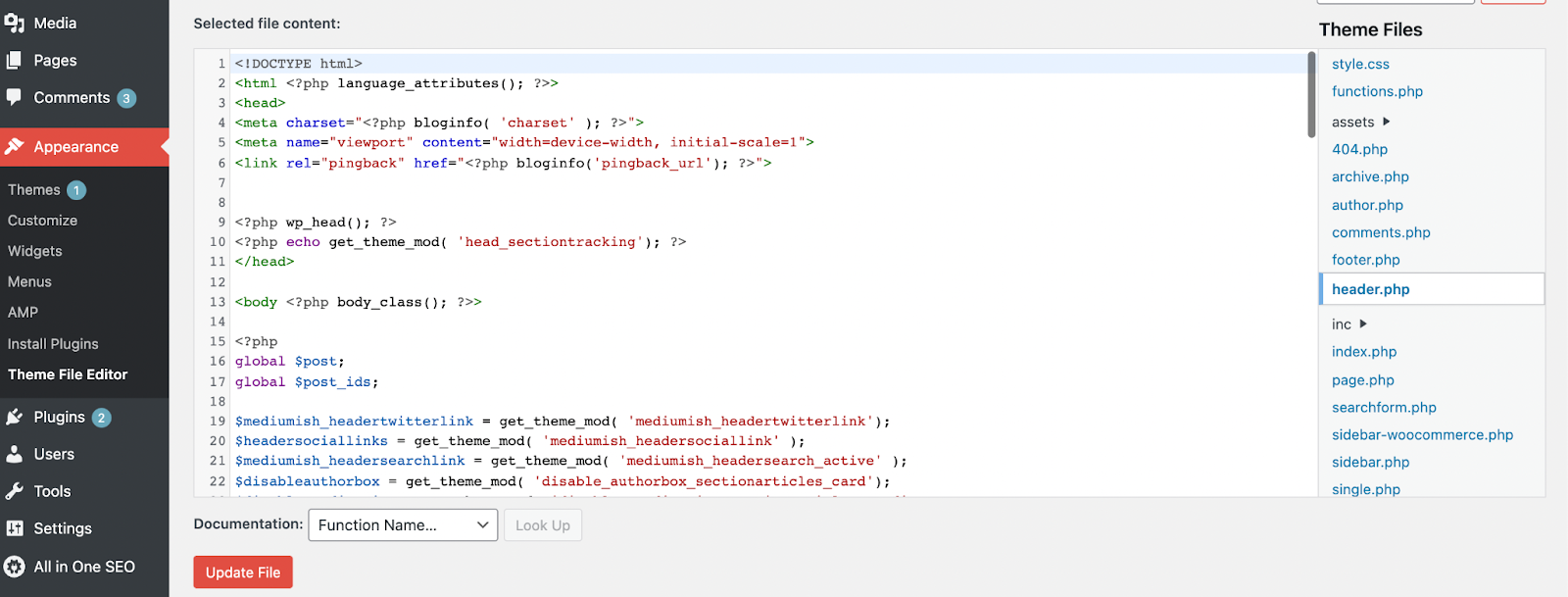

Update your themes and check if the files have been modified. If there are any code blogs without your knowledge, delete them. Of course, do not forget to take a backup before these operations:

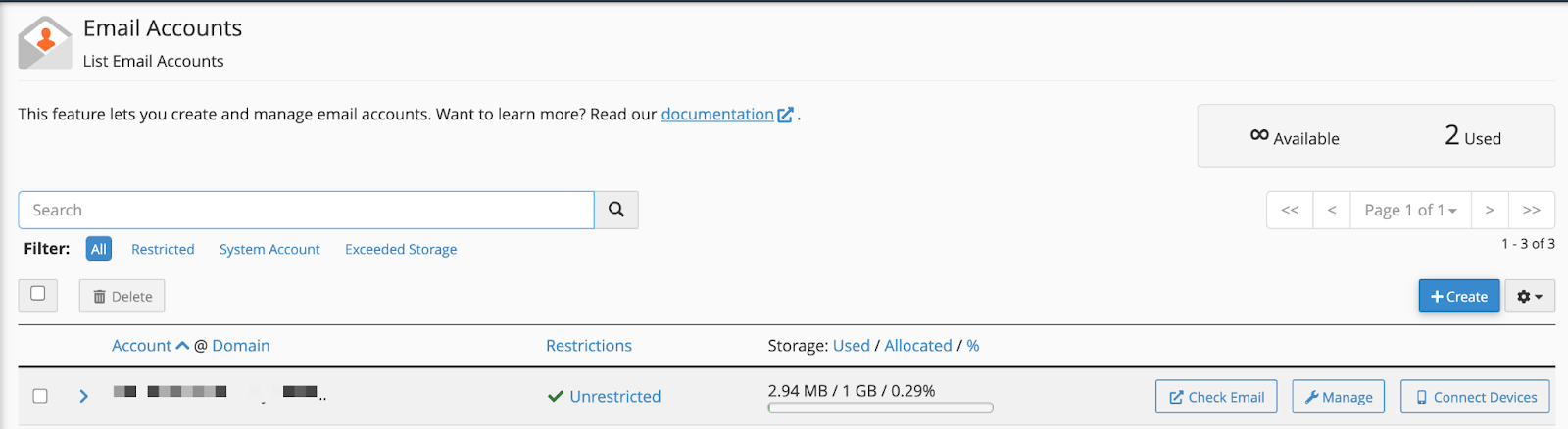

If you are using a panel like Cpanel, check the e-mail accounts opened without your knowledge. With these e-mail addresses, they can use all the services of your site without your knowledge and cause your site to return a 5xx status code. After all, your site has a certain disk and Bandwidth limit:

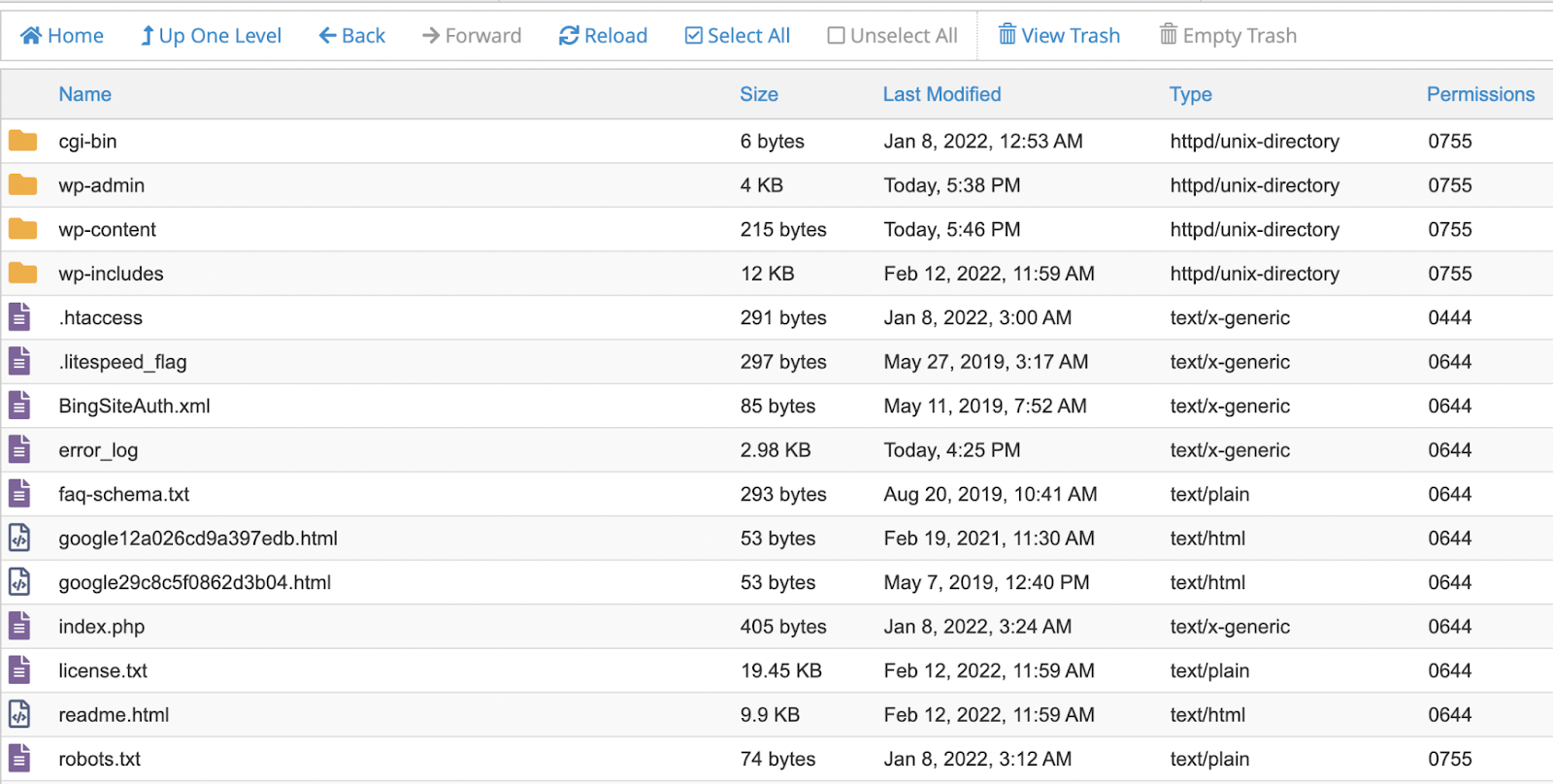

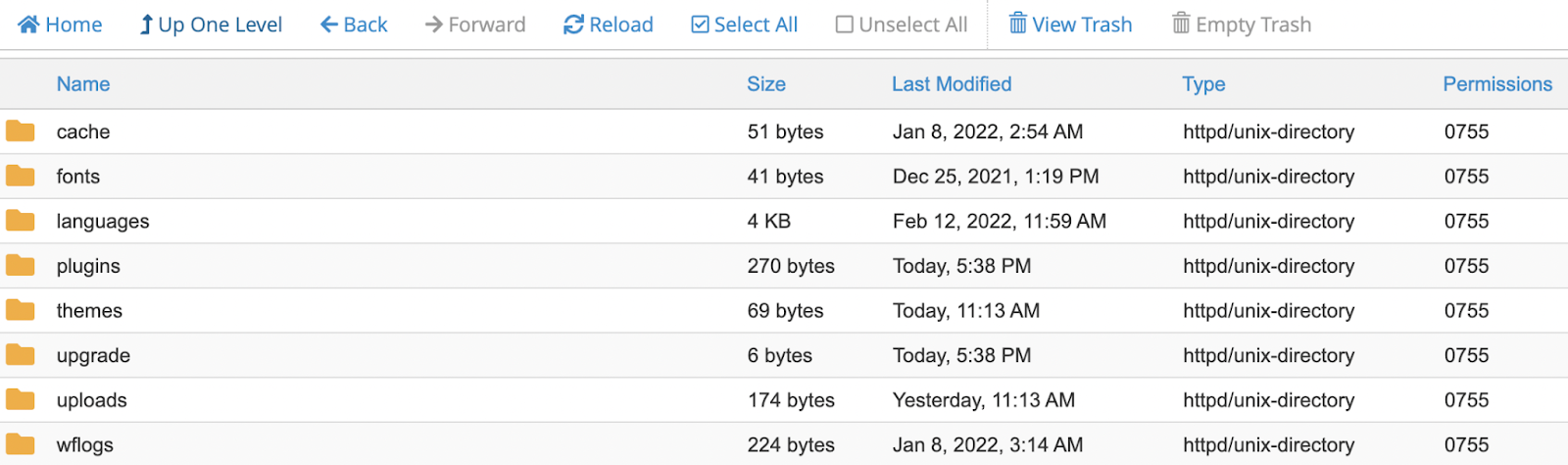

Access all your site files via the FTP program or Cpanel File Manager. Here you can re-examine recently modified files by checking the "Last modified" sections. You can clean up files added with different names or create with very similar names:

Check your .htaccess file. Since many redirects are kept here, take a look at the codes there and remove the redirect codes to different addresses. After editing, check the www/non-www and HTTPS versions of all versions of your site:

Always manually inspect the contents of subfolders. Consider examining different file types as well as files that look suspicious to you:

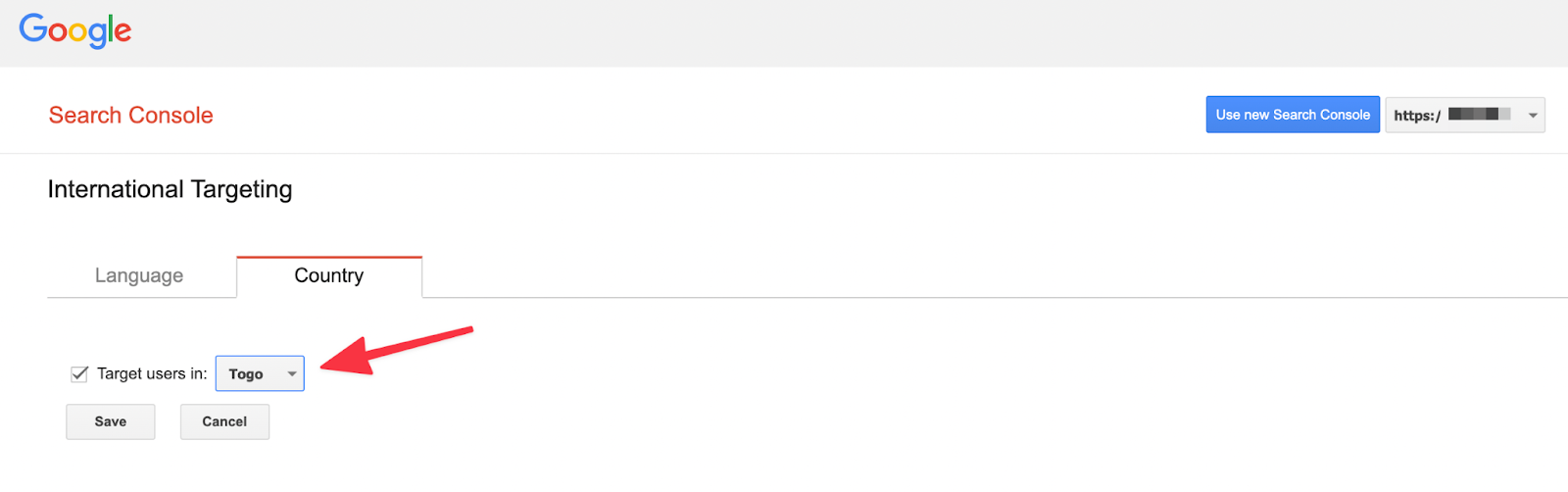

Check the International Targeting report to ensure final checks. In some cases, this setting can be changed to allow your site to target a different country:

As Google states in its own documents, you can quarantine your site while doing these operations. You can redirect your site to a different page that opens with a 503 response code. When you are offline, you can do less harm to your users and prevent your visitors from being affected. Blocking these pages with robots.txt alone is not a sufficient action, your visitors can still reach these pages.

As I conclude this blog, I would like to emphasize that this situation is quite serious and it takes time to fix its possible problems. After these actions, which I can summarize under the name "hack", your SEO performance may suffer, your turnover may decrease and your competitors may get ahead of you. Recovering the declines can be expensive.

I recommend that you make sure your site is secure and take precautions at all points where you think you may be attacked, I wish you virus-free and clean SEO projects :)