How to Use Google Sheets as a Browser

Technical SEO analysis of websites is a critical step to optimize a website's performance and rank high in search engines. However, this process often requires expensive tools and sophisticated software. So, is it possible to easily perform the same processes for free with the help of artificial intelligence? The answer is yes! By adding AI-powered Apps Script code blocks to Google Sheets, you can get a powerful, flexible, and free solution for pulling and analyzing technical SEO data on websites!

Google Sheets' user-friendly interface combines with Apps Script's automation and customization capabilities. This combination addresses the needs of both individual SEO professionals and small businesses that have just created a website. It's an ideal alternative for teams that don't have access to expensive tools, as well as a quick analysis and prototyping environment for professionals.

In this blog post, we will show you step-by-step how to perform a crawl of a website with Google Sheets. From status codes to canonical tags, from hreflang data to meta tags, you will learn how to collect SEO-critical data of a website! You will also find a sample Google Sheets file that will make this process easier.

Apps Script Solutions with AI for Users without Coding Skills

Apps Script uses a JavaScript-based language and integrates with Google services. However, not everyone is fluent in the language or has a basic understanding of it. This is where artificial intelligence comes in, making it easier to write complex code in a meaningful way:

- Code Recommendations: AI-powered tools can generate Apps Script code for you just by telling you what you want to do. For example, if you say, “Create an Apps Script function on Google Sheets so that I can pull the meta robots tag from the source code of a URL,” AI can write an accurate script based on that command.

- Fast Error Resolution: You can get AI guidance to solve or optimize bugs that arise during coding. This saves time and speeds up the learning process.

How Can You Determine Which Code You Need to Print?

Artificial intelligence provides a huge advantage when it comes to creating scripts that fit your personal or project-based needs. For example:

- If you want to pull specific meta tags such as meta title or meta description, you can ask AI to write a custom code on how to get this data.

- For more complex operations, such as checking canonical relationships between URLs, AI-based solutions can help even beginners achieve a professional result.

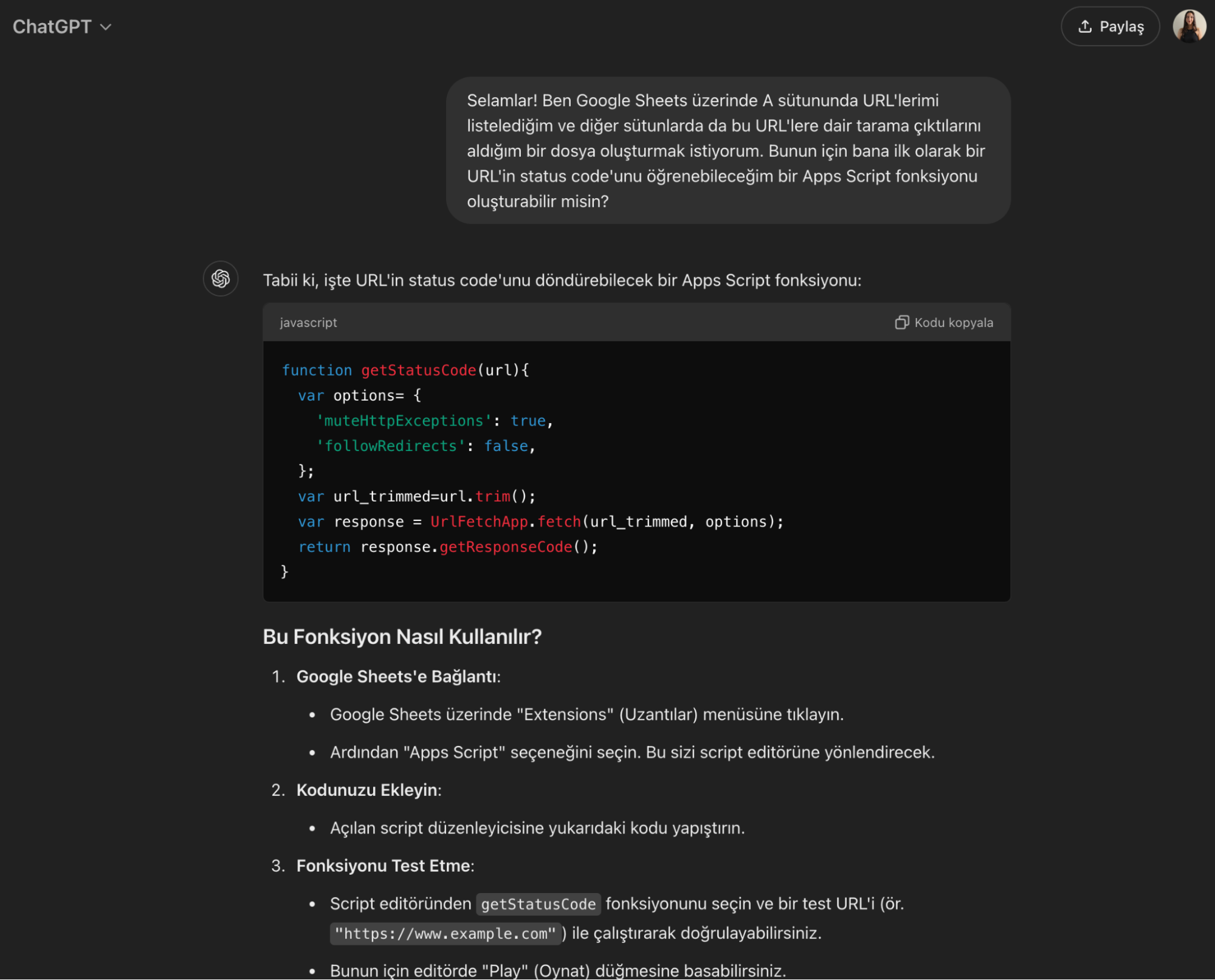

For example, I first print a block of code to ChatGPT using the following prompt to check the status code of URLs:

After testing the working of this code in the following steps, I had a sample to print the other functions I wanted. As you know, writing prompts for artificial intelligence always brings us one step closer to the result we want. You can also see the details in our article titled How to Simplify Your Work Using Chat GPT!

My prompt for printing other functions by instantiating a running code block was as follows:

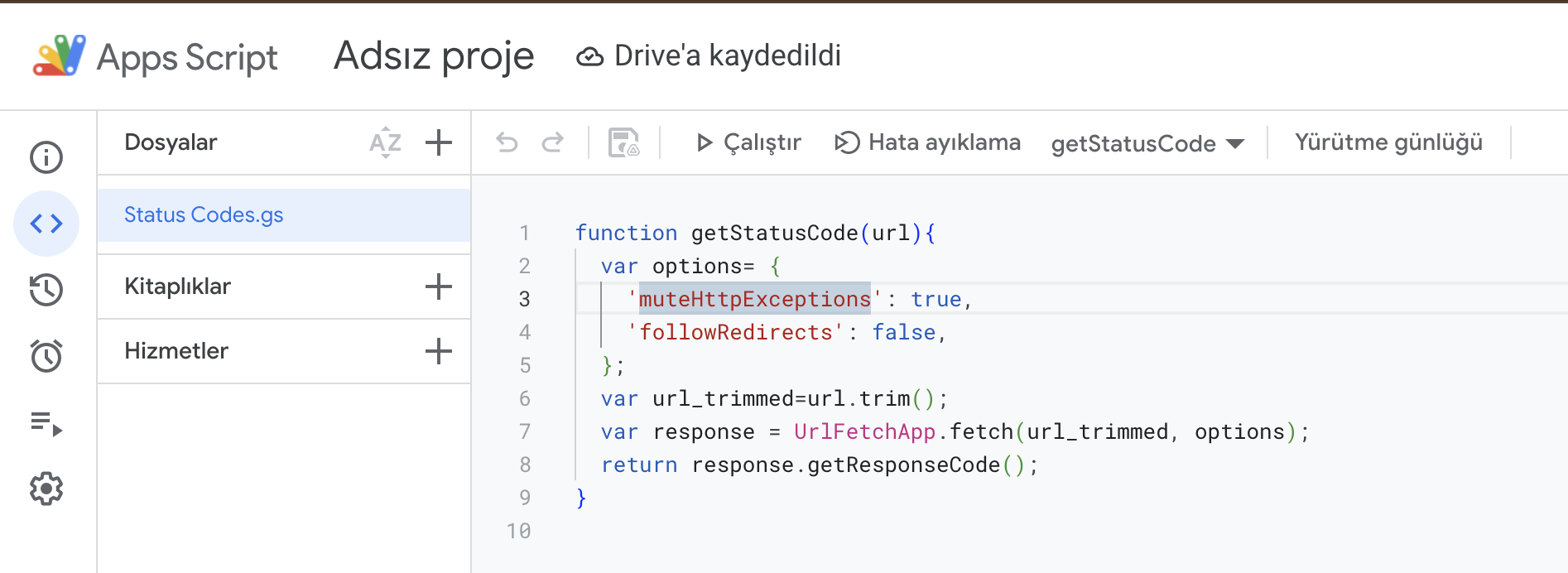

“Hi, I want to create a Google Sheets Apps Script. I am an SEO expert and I work with a lot of URLs at the same time. On the Google Sheets screen where I list my URLs, I want to be able to see the status codes, canonical tags, and the final URL to which these URLs are redirected with 301 or 302. I can see the status codes with an Apps Script that I have already written, and with a similar script, I want to see the final URL where the URLs I have are redirected with 301 or 302. For example, this is my apps script where I can pull the status codes of these URLs:function getStatusCode(url){

var options= {

'muteHttpExceptions': true,

'followRedirects': false,

};

var url_trimmed=url.trim();

var response = UrlFetchApp.fetch(url_trimmed, options);

return response.getResponseCode();

}

In parallel with this, create an apps script that will pull the pages to which the URLs I have are redirected, if any, and if not, it will write “No Redirect”.”

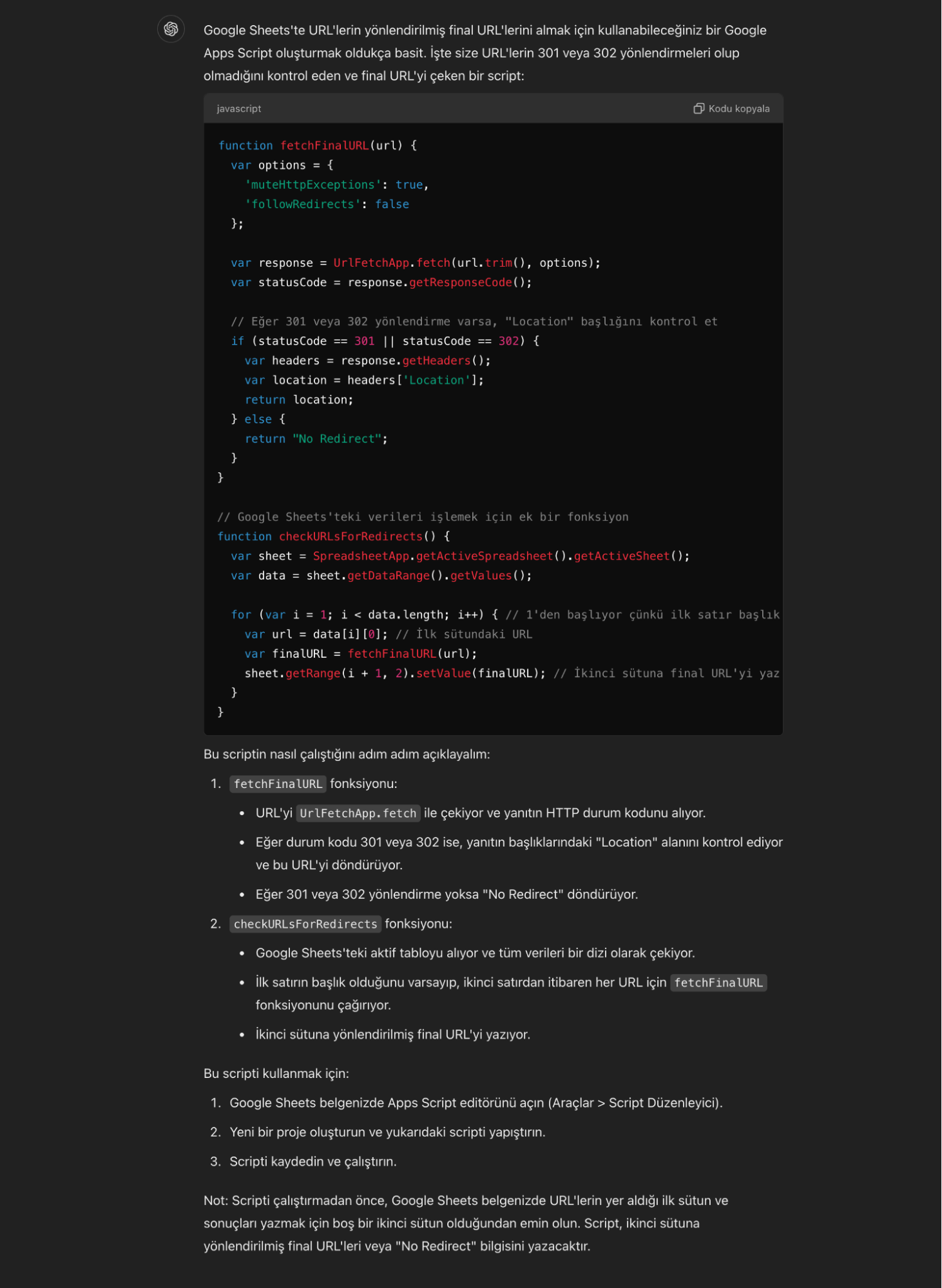

The result I got from ChatGPT in response to this prompt and it worked was as follows:

Voila! It's that easy to print a block of JS-based code that works with AI. Whatever crawl results you want to see for a URL, you can print a function that works with AI and enjoy your free web crawler.

Preparations for Web Crawl with Google Sheets

Before you start web crawling with Google Sheets, you need to complete a few basic preparatory steps. These steps will enable you to use Google Sheets' Apps Script feature effectively and prepare the environment for the crawl process. Here is a step-by-step guide:

Prepare Google Sheets

- Create a New Google Sheets File: First, create a new spreadsheet on the Google Sheets platform. This file will be a basic workspace for your crawl.

- Table Layout: Specify column headings for the data you want to pull (e.g. status code, canonical tag, meta descriptions). For example:

Column A: URL Listesi

Column B: Meta Robots

Column C: Status Code

Column D: Redirected URLs

Column E: Canonical Tag

Column F: H1

Column G: Title

Column H: Description

Column I: Hreflang

Column J: Pagination

Access Google Apps Script

Once your Google Sheets file is ready, you can start working with Apps Script:

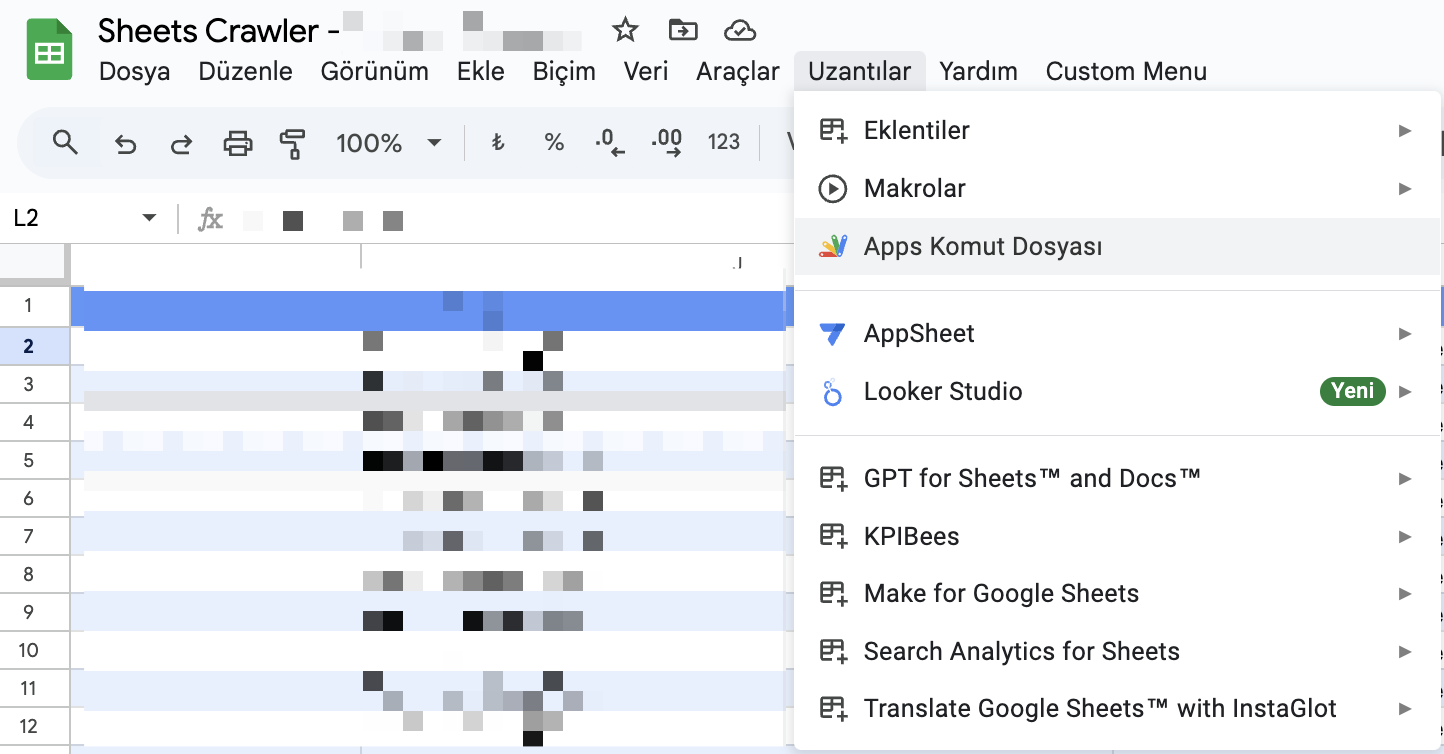

1. Go to Menu: From the top menu of Sheets, click on the “Extensions” tab.

2. Open Apps Script: Click “Apps Script” from the drop-down menu. This will redirect you to the Apps Script editor.

Create Your First Script

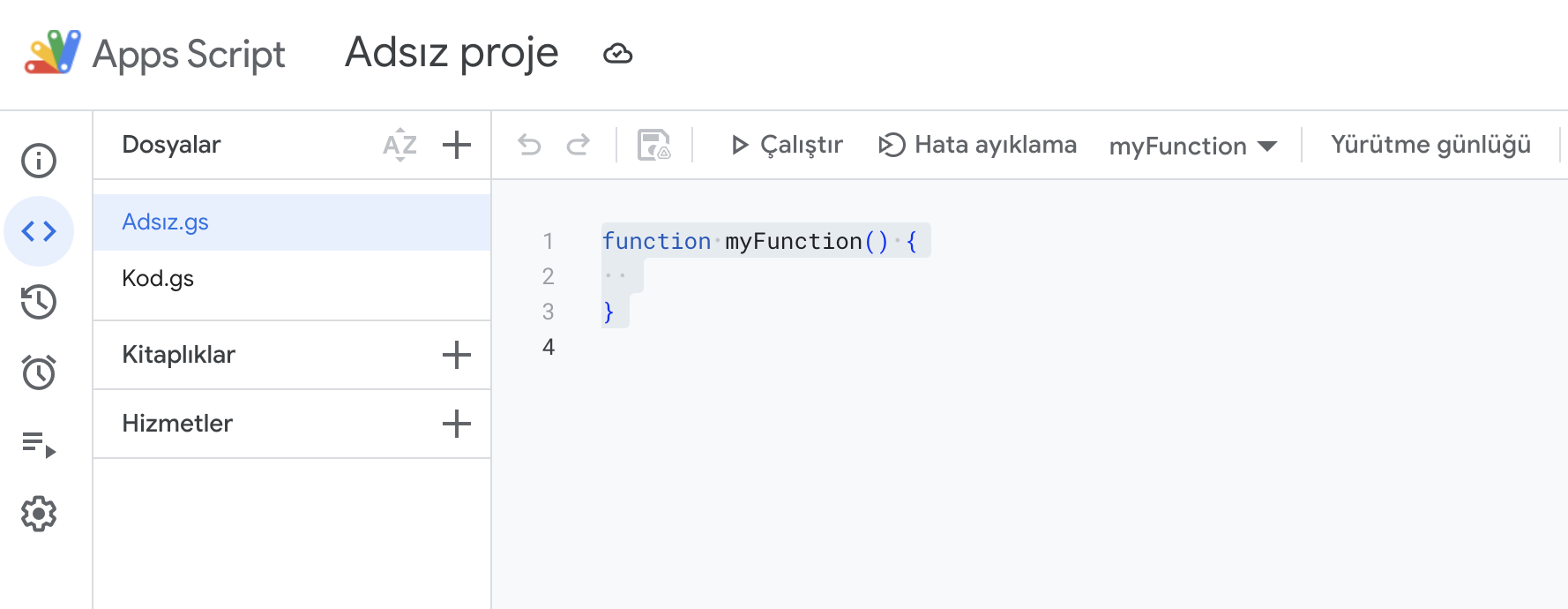

When the Apps Script editor opens, follow the steps below:

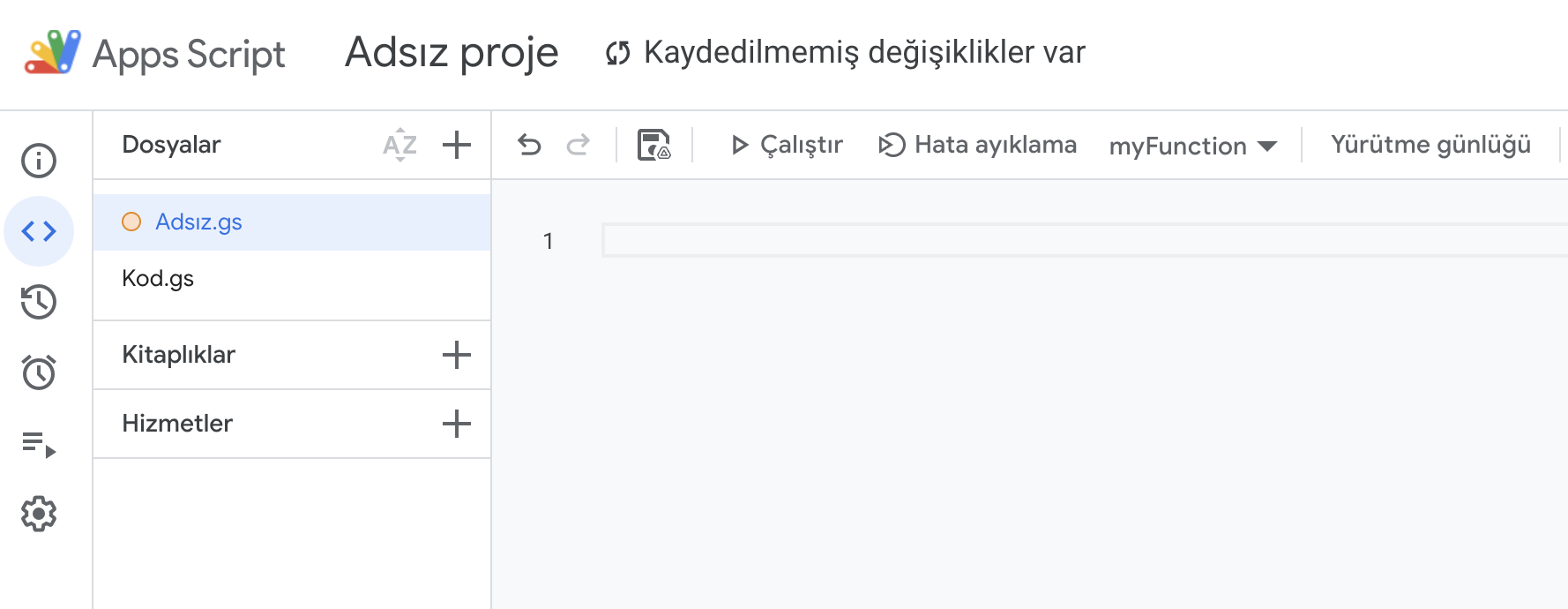

1. Delete Default Code: You can delete the default codes in the opened editor:

2. Paste Your Script: Paste here the script you created with the help of ChatGPT, which is required for the crawl process. For example, a basic script that provides the status code of a URL as an output, like the one above:

function getStatusCode(url){

var options= {

'muteHttpExceptions': true,

'followRedirects': false,

};

var url_trimmed=url.trim();

var response = UrlFetchApp.fetch(url_trimmed, options);

return response.getResponseCode();

}

3. Save and Name it: Give your script file a meaningful name, for example, “Status Codes”.

4. Confirm Authorization Requirements: When running the script, Google will ask you to authorize your account. Give the necessary permissions by following the steps on the screen.

Integrate Apps Script with Google Sheets

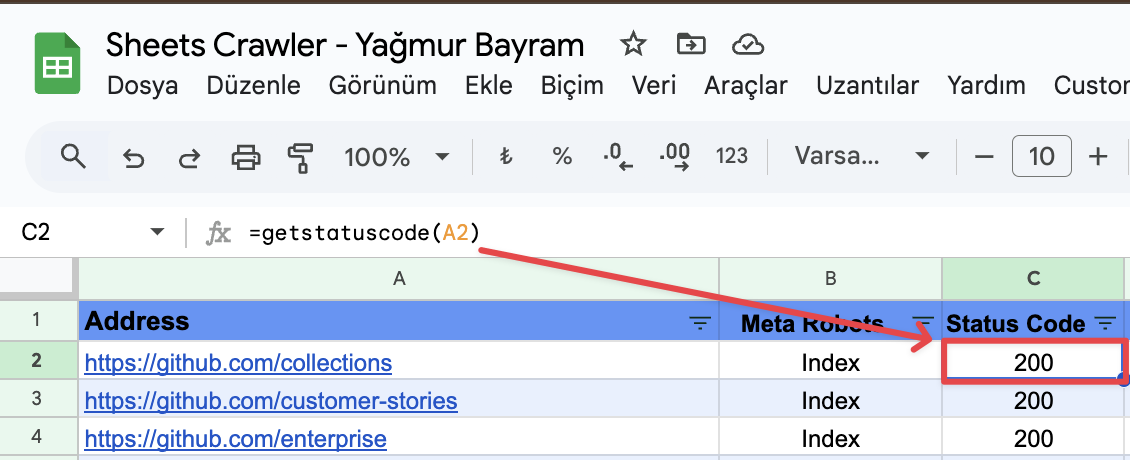

Custom Functions:

The scripts you write can be used as custom formulas in Google Sheets. For example, to check the existing meta robots tags of a URL, you can type the following formula in a cell:

=getStatusCode(A2)

- This formula will return the HTTP status code for the URL in cell A2.

Test and Optimize

- Test URLs: It is useful to add a few test URLs to see if your crawl is working correctly. For example, try a 404 error page and a correct redirect URL.

- Error Checks: How does your script react to errors? You can perform revisions by checking the messages returned when the URL is unreachable or incorrect.

Conclusion: You are Now Ready!

After completing these preparatory steps, you can now write more advanced scripts for SEO analytics or extend existing code.

You can access the sample Google Sheets Crawler file we have created for you here, and you can use the working codes in your cases by examining the Apps Script area.