The Complete List of Google Penalties - Manual Actions Guide

Websites whose actions violate Google's guidelines can get various penalties. While many webmasters report "Google has banned my website" or "I am banned by Google", this situation can be defined as a Google Penalty or manual action in general. Mentioning Google penalties and discussing the authorities' explanations about manual actions in it, I want this article to serve as a handbook.

The purpose of the penalties is to "clean up" search algorithms by removing the spammy content so that they become more useful to users. The release of the algorithm called Penguin in April 2012 has been a critical step towards comprehending web spam techniques.

Web Spam Overview

Google has published several statistics to show how to deal with spam in the past years. It has occasionally reminded webmasters of some warnings in the blogs.

To summarize the spam statistics in 2019;

- More than 25 billion spammy pages.

- 230,000 spam reports were received every day, and Google was able to take action on 82% of those reports. This number was 180,000 in 2018, and action was taken on 64% of them.

- 4.3 million manual action messages were generated to website owners.

- While the amount of spammy content produced by users increased in 2018, it did not increase in 2019.

- Backlink spam has become the most popular spam type.

- The effect of spammy content on users in 2019 was reduced in 2018 by 60%.

- 90% of backlink violations were detected by algorithms.

- New explanations were made about the use of nofollow.

- The spam generated through hacked websites was stable compared to 2019.

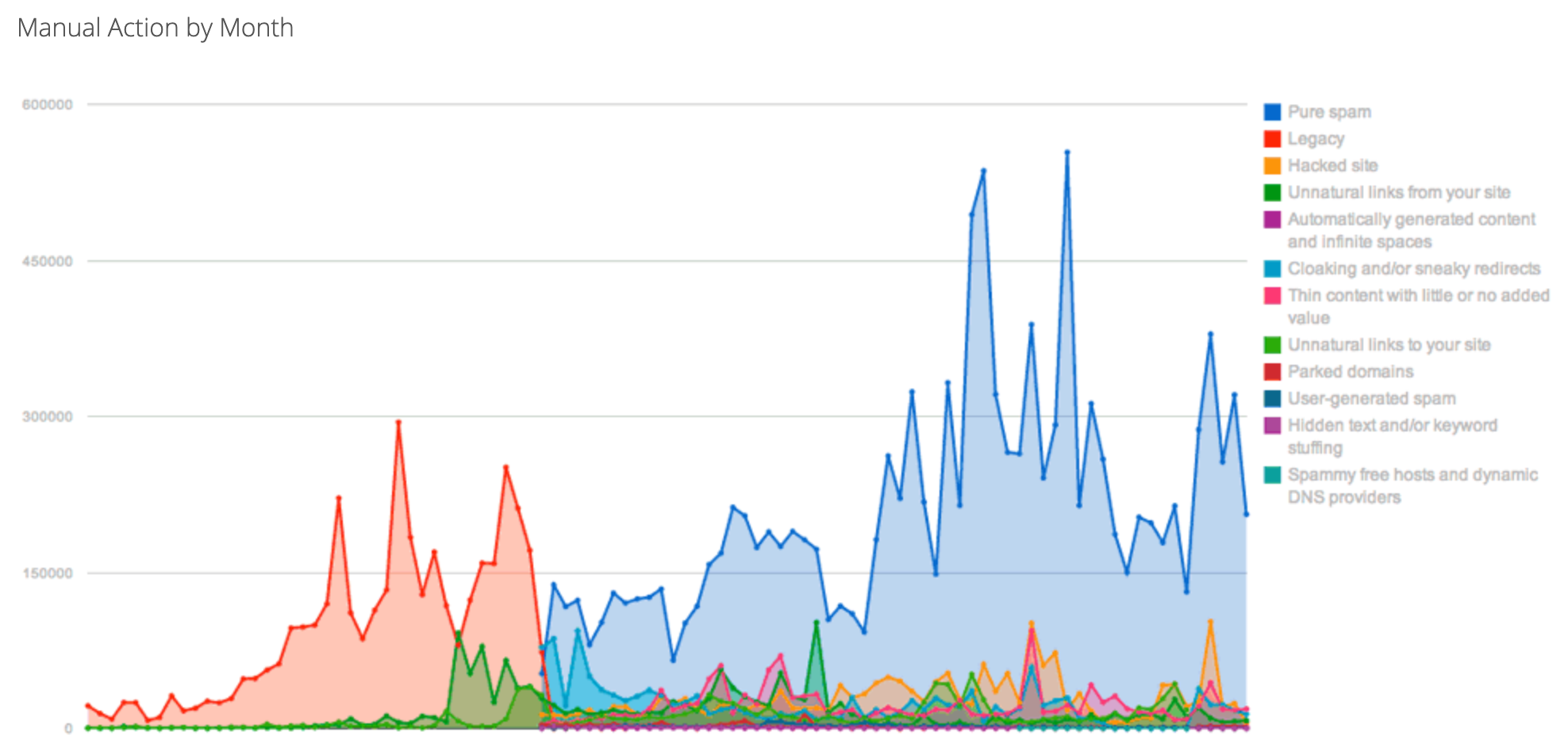

Since the statistics page of Google fighting spam is directed, I've had a quick look at the latest data on the web archive to present you. A diagram showing that 0.22% of sites in the index were given manual action: (2018)

Pure spam is one of the most common types of spam. You can find out more about pure spam below in this article.

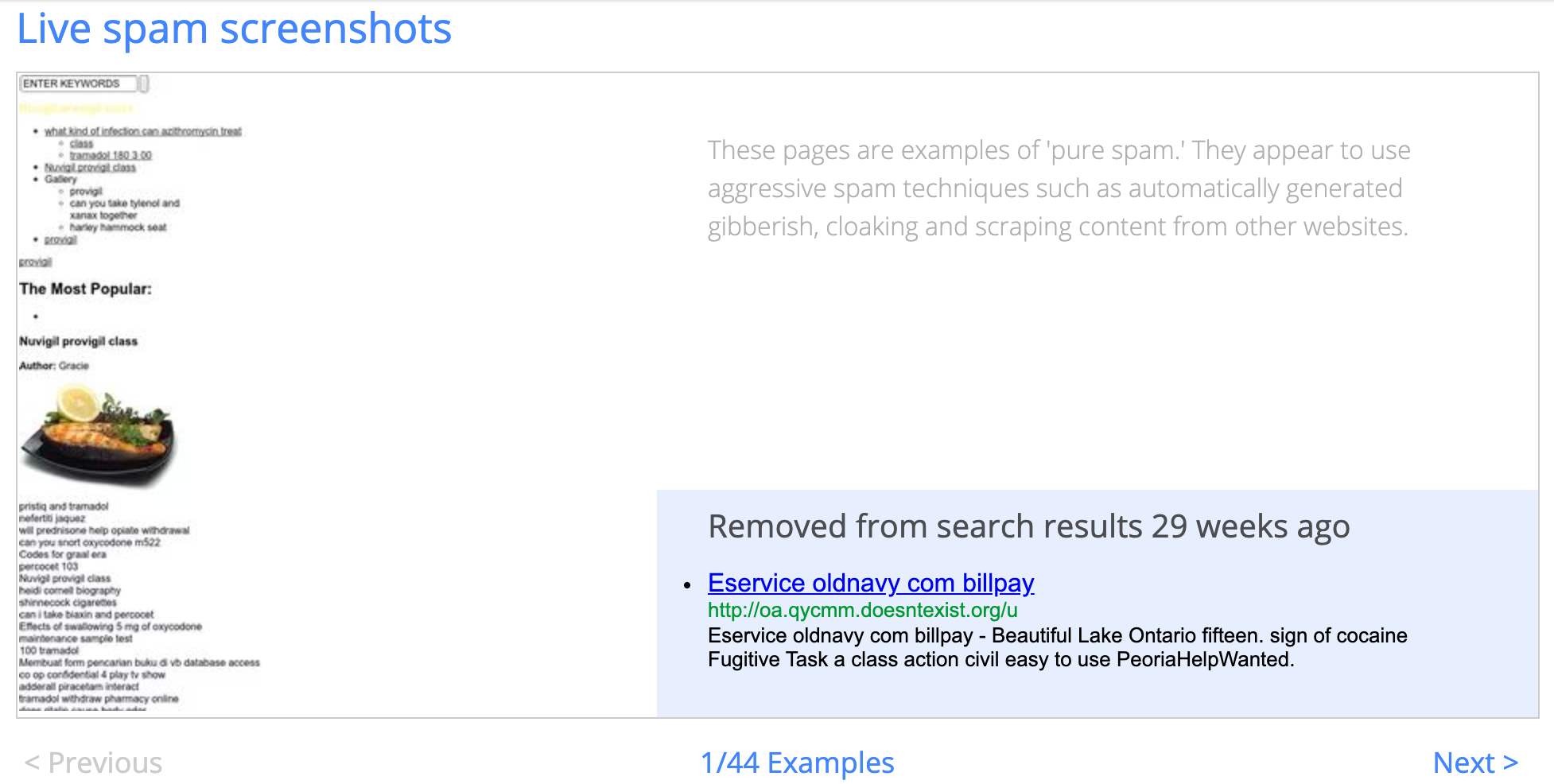

In the previous years, Google shared some examples of the websites that were given manual action:

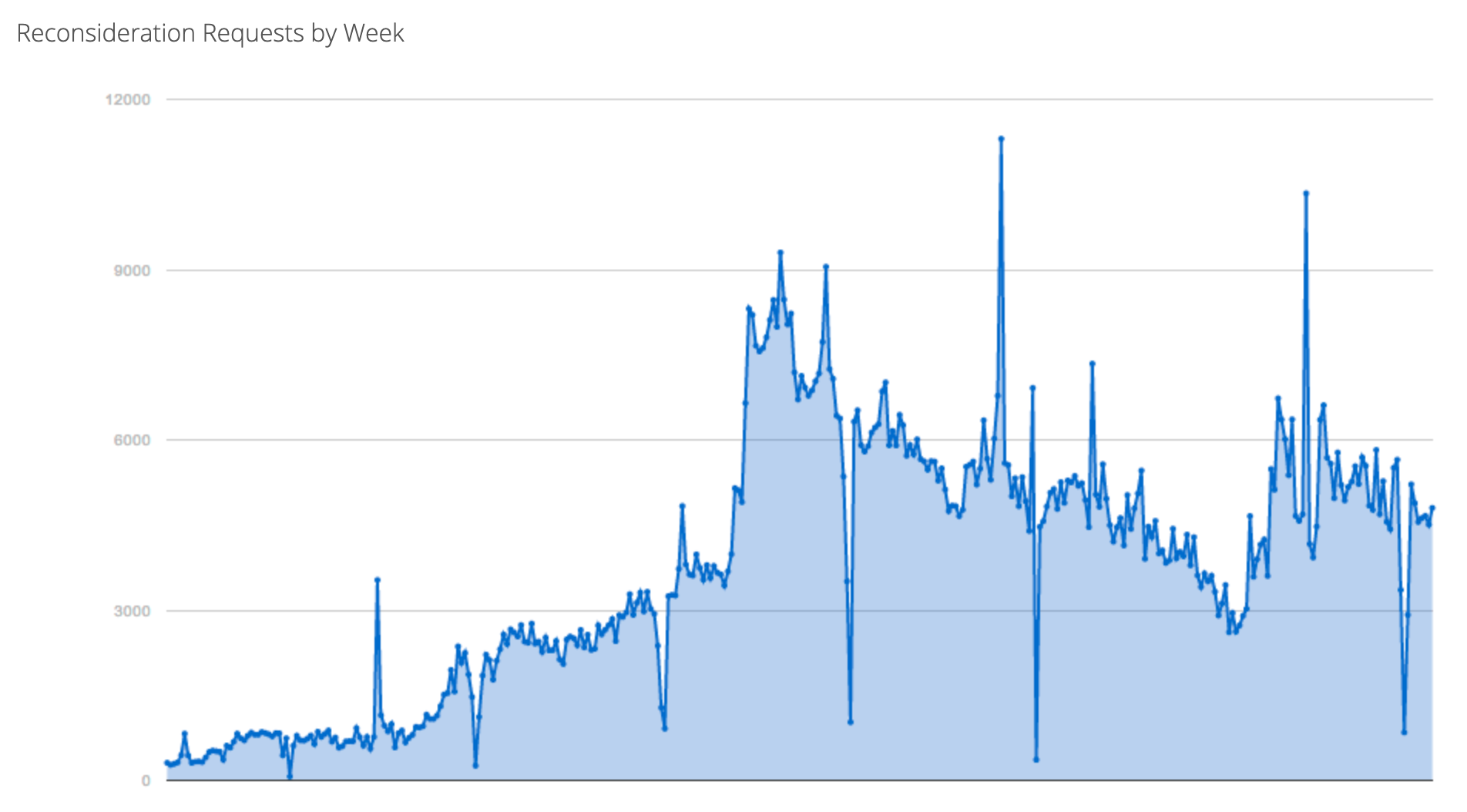

The screenshot I took in February 2018 shows the reconsideration requests by week from 2006 to that date:

With the use of machine learning, Google was able to detect spam types through manual actions and algorithms more quickly. Google's explanations indicate that algorithms can't prevent all the spammy behavior and the system is not perfect:

It is explained in another blog post that the systems used to detect spam are not always flawless and open to improvement. You can access 2018 spam statistics here. We will find out how Google will fight spam in 2021.

Google uses spam reports to improve its spam detection algorithms.

You can report webspam by filling out the spam report form. It is almost impossible to tell if the spam reports are processed. Google can take manual action only on specific URLs or website-wide.

What is a Google Manual Action Penalty?

As the name indicates, manual action is the penalty given out to some pages or websites by a human reviewer.

It is almost impossible to detect the penalties given by Google. The owners of accounts in Search Console can see the penalties issued by Google. Because of this, I advise you not to rely on systems such as Google ban questioning.

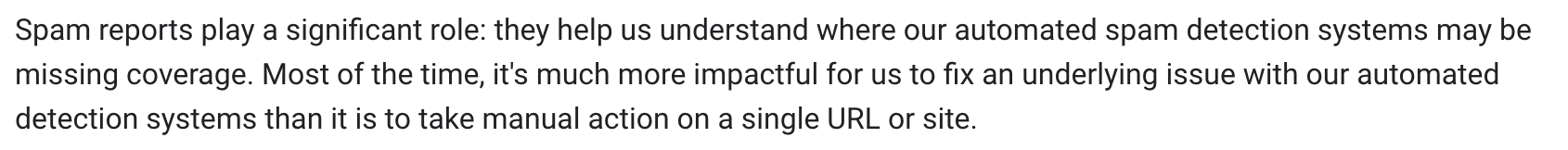

J. Mueller stated that most of the manual actions were done algorithmically and they were probably no more needed:

What Happens When a Penalty is Received from Google?

When Google applies a manual action to your site:

- Your site's ranking in search results may drop,

- Your rich results may not be displayed in SERP,

- Your site may be removed from the Google index,

- Your site's organic conversion rate may drop,

- Your site's organic traffic may decrease.

- If you're on platforms such as Google News, the traffic may flatline.

Noting the dates on which a manual action has been issued and removed will be helpful for future analyses. Your site's traffic may suddenly drop after a manual action.

What is the Duration of a Manual Action Penalty?

There is not a time limit for manual actions. Some might be removed in a few days, while others might cost you 1 or 2 years. Reconsideration requests and fixing problems will shorten this period.

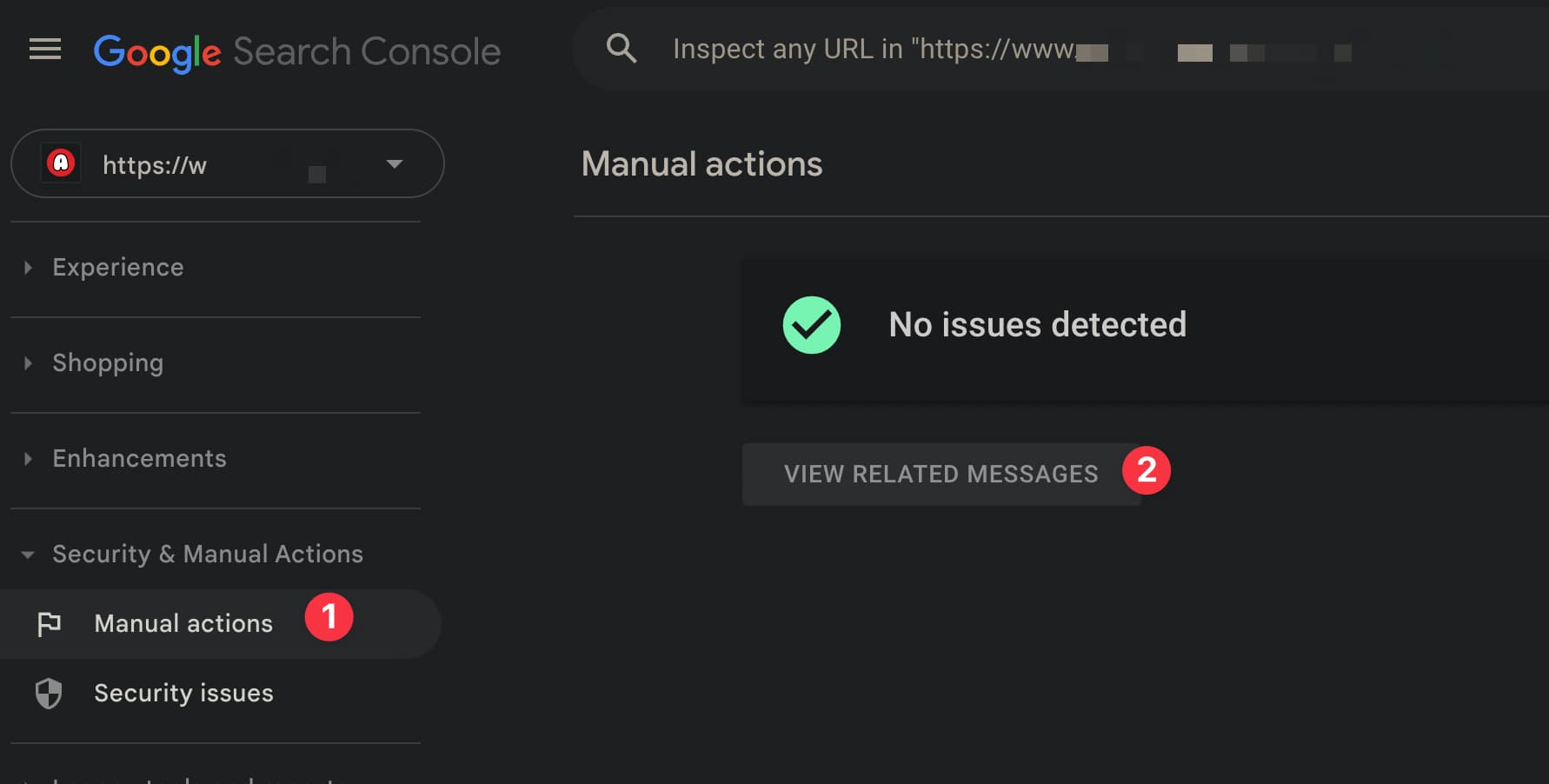

You can log into Search Console to find out if your site has been penalized in the previous years:

Manual Action Types (Google Penalties)

Google's manual actions on websites differ. I would like to discuss it in detail. Unlike algorithmic penalties, the website owners receive an e-mail in case of a manual action.

Keyword stuffing

Keyword stuffing, which means using irrelevant keywords on a page just to increase traffic rather than inform the users, is a reason for manual action.

Examples of keyword stuffing:

- Pages that have only phone numbers but no content with value,

- Pages that have been created for spammy purposes with random content such as the names of cities or districts.

- Using the same keywords both on the page and in meta description tags to generate spam, as in: "We sell custom coffee tables. Our coffee tables are handmade. If you're thinking of buying a custom coffee table, please contact our custom coffee table specialist at custom.coffee.tables.@thewebsitename.com."

Website owners should create content in a way that the keywords they want to use to be listed with would not cause spam. Not to be penalized due to this spam type, which is a Black-hat SEO method, a page should not contain such activities. However, it does not necessarily mean that websites with such content comply with the guidelines.

In some cases, Googlebot can skip pages stuffed with keywords and scan other pages of the site instead. Also, if the site has some other kind of value, Google can ignore keyword stuffing.

Your pages might rank low or underperform without being given manual action because Google may ignore them.

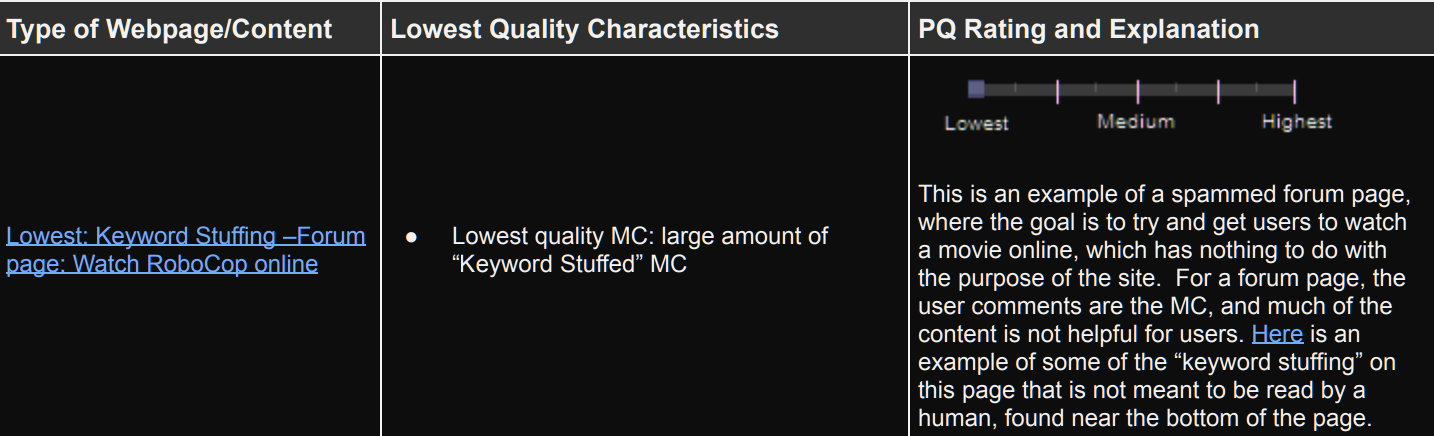

This type of spam matters especially to EAT. Guidelines include an example of "Keyword Stuffed":

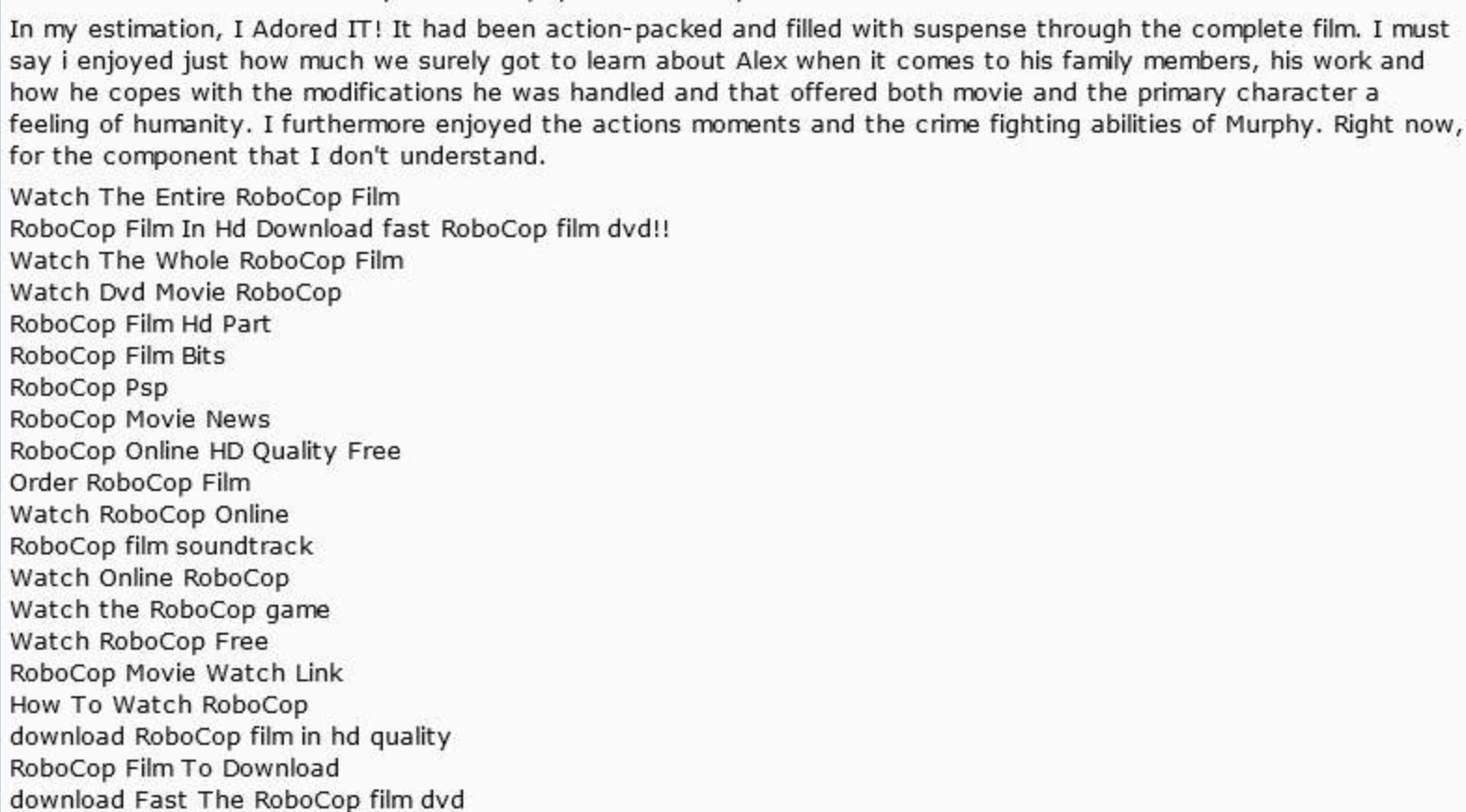

You can see an example of keyword stuffing below:

Please be reminded that there is no ideal keyword density for the use of keywords.

When he was asked why websites with stuffed keywords ranked highest, John Mueller explained "Usually, we're just ranking them because of other factors. Getting one thing wrong doesn't mean you'll never show up in search":

Usually we're just ranking them because of other factors - getting one thing wrong doesn't mean you'll never show up in search (lots of sites get things wrong, but they're still useful).

— 🍌 John 🍌 (@JohnMu) November 23, 2018

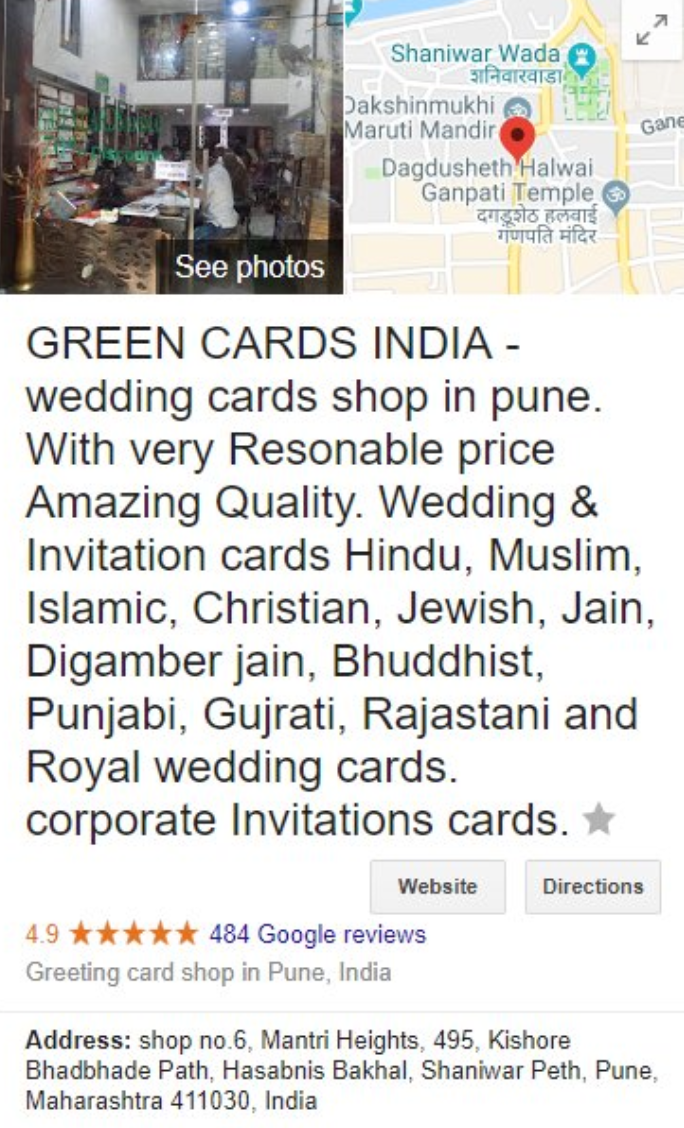

Spammy behavior should be avoided in Google My Business (GMB) names. Your business accounts might be suspended because of such manipulative behavior frequently observed in local SEO:

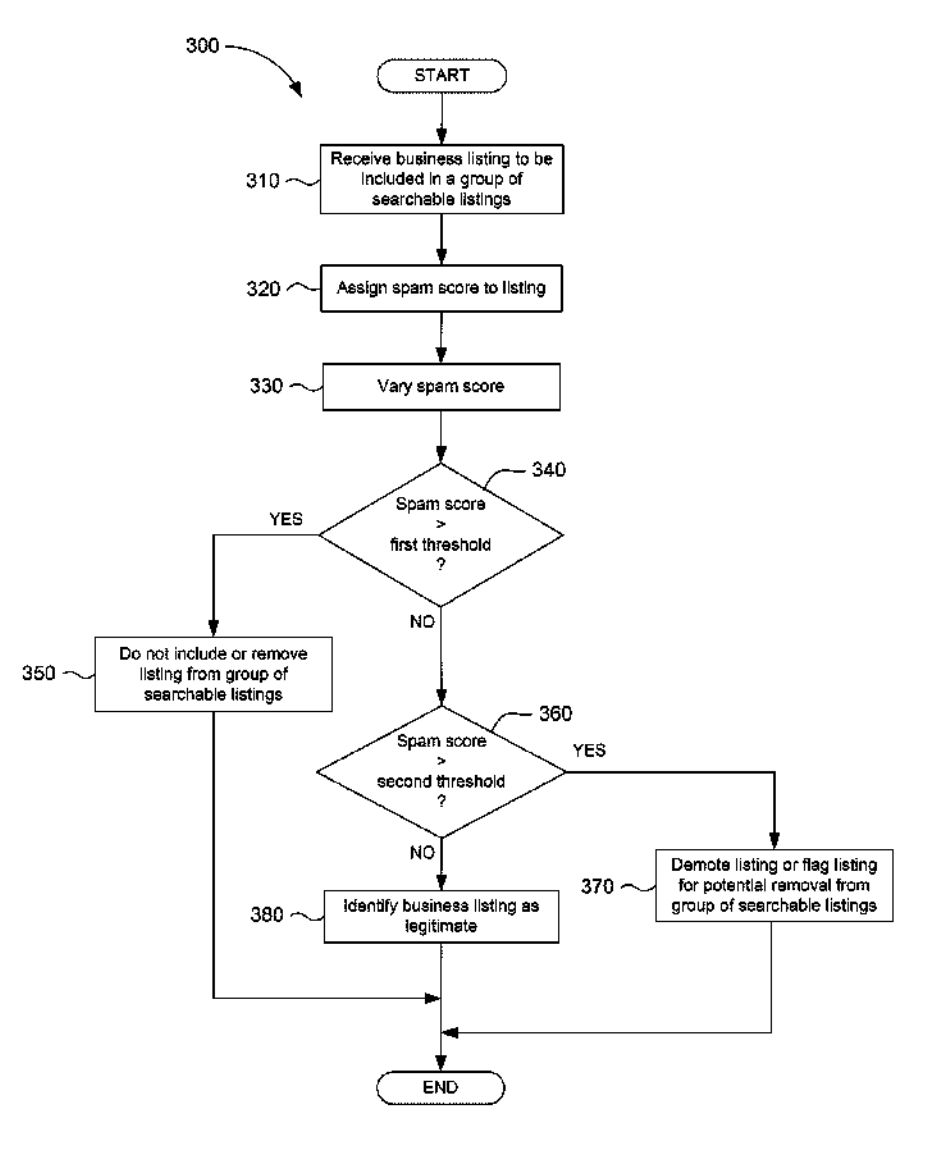

In a patent issued in 2016, Google explains how it detects fraudulent business profiles:

Comment Spam

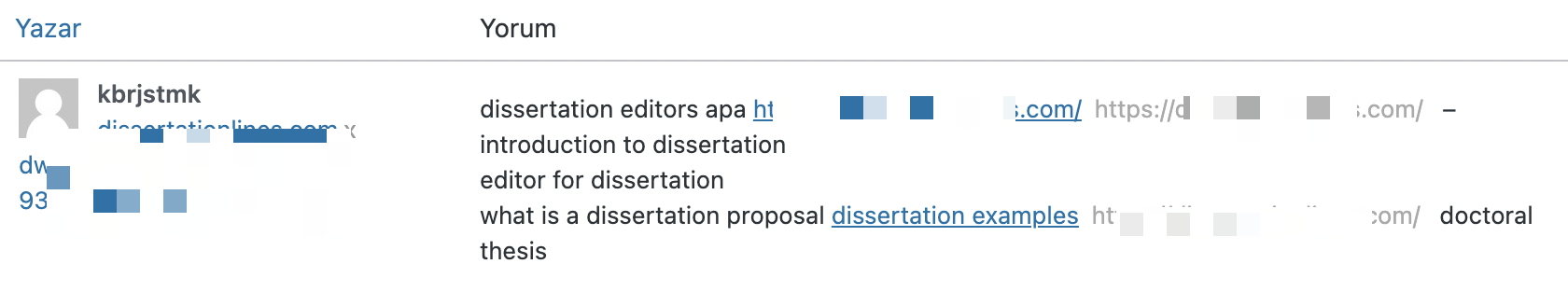

Some users that post comments might be ill-intentioned. Google started to make explanations about this type of spam in 2015. Such users may cause spam in your content with malicious links they add to their usernames or comments, which is against Google guidelines.

If there are spammy comments on each page of your website, it lets Google view those comments and may cause it to think that you encourage spammy content. If you allowed all the comments without checking before, I recommend that you go back to the relevant pages and read each comment. Not only the blogs but also the websites with a guest book should be careful about it.

If you allow spammy comments on your site without moderation and receive a manual action notification:

- Your pages might rank lower,

- You direct your loyal users to irrelevant or malicious websites,

- Pages with spammy comments may be removed from the index.

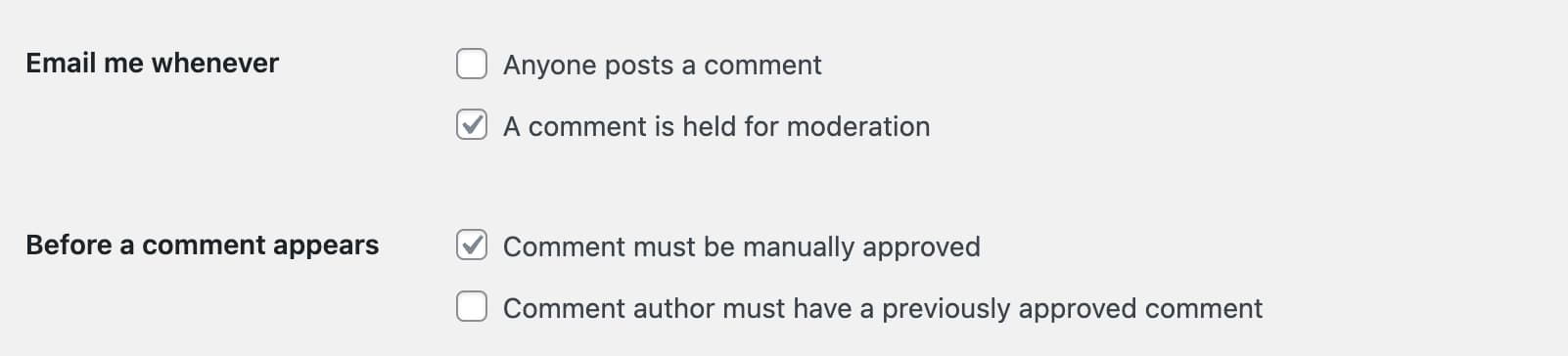

You can view comments on CMSs like Wordpress and delete the spammy ones before posting them to your website. If your site is attacked by spammy comments, you may consider disabling comments temporarily:

You may take additional safety measures for commenting on Wordpress. You may also consider using reCAPTCHA:

You can add nofollow attribute to comment links outbound from your site so that links that could be unnoticed no longer pass Pagerank and the spammers may stop commenting. It is default in systems such as Wordpress:

It could also be useful for forums to disable the crawling of pages such as profile pages and use robots.txt to block them. As the software solutions are improved, this spam type is getting less prevalent now:

For further information regarding this type of manual action, go to this page: Ways to Prevent Comment Spam.

User-Generated Spam

Sometimes when necessary precautions are not taken, spammy pages generated by users can cause you to get manual action.

What is user-generated spam?

This is the type of spam that happens on forums, blogs, or basically any page on which users are allowed to leave a comment or a link.

Examples of user-generated content spam:

- Malicious sites generated within free hosting sites,

- Unmoderated forum messages,

- Signatures in forum profiles,

- Malicious links in Q&A sites.

Example:

To Prevent UGC Spam:

- If the forums on your site are too old or outdated, or not adding much value to your users, consider turning them off.

- If you are complaining about a certain type of spam, you can add the related words such as "watch movie" to the blocklist.

- Improve your moderation team.

- Set limits to adding links to messages.

- Monitor the messages of the most active users at times.

- You can also add nofollow attribute to the links shared by users.

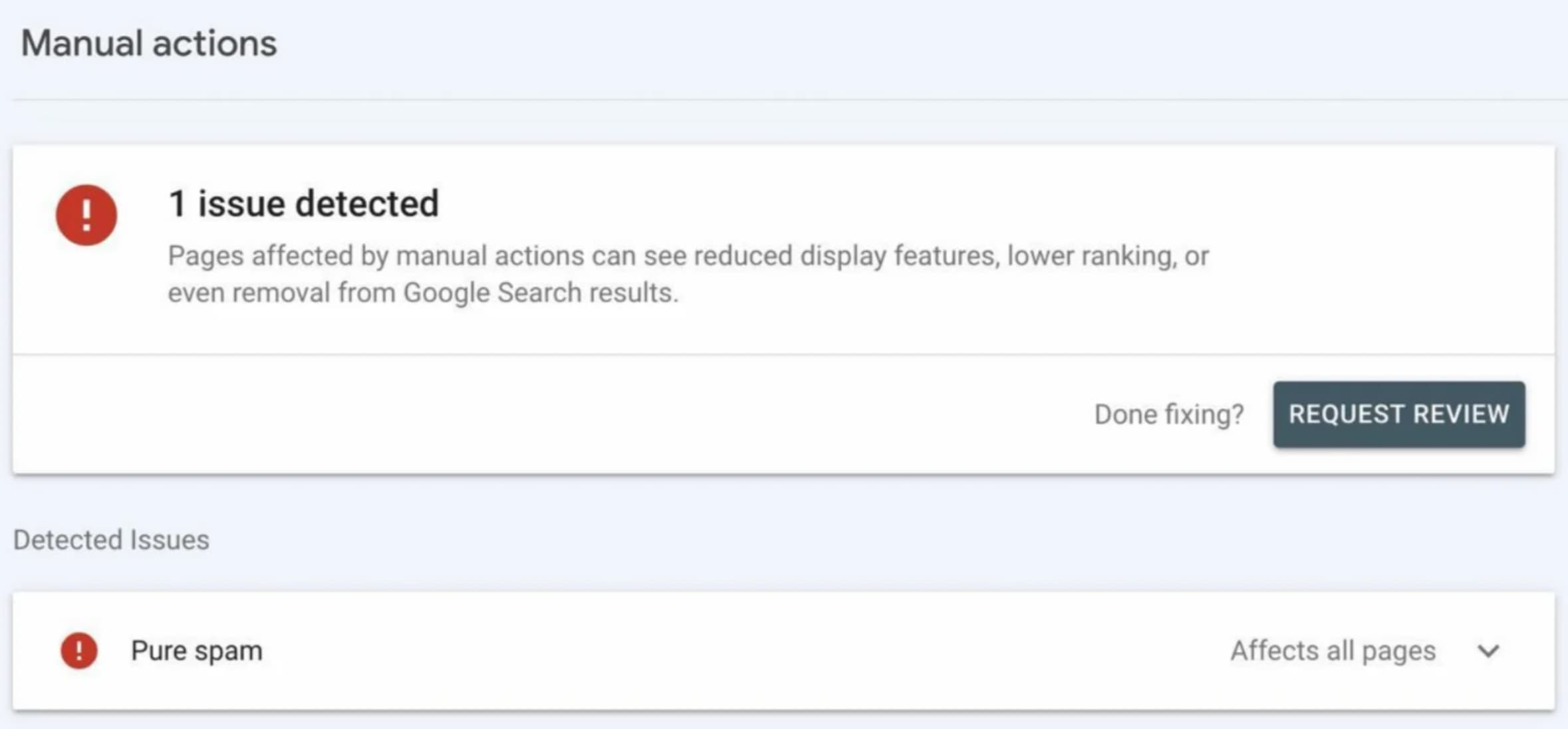

Pure Spam Notification on Google Search Console:

You should identify spam types that could be generated by users and take precautions. Websites that do not comply with those rules might be given manual action.

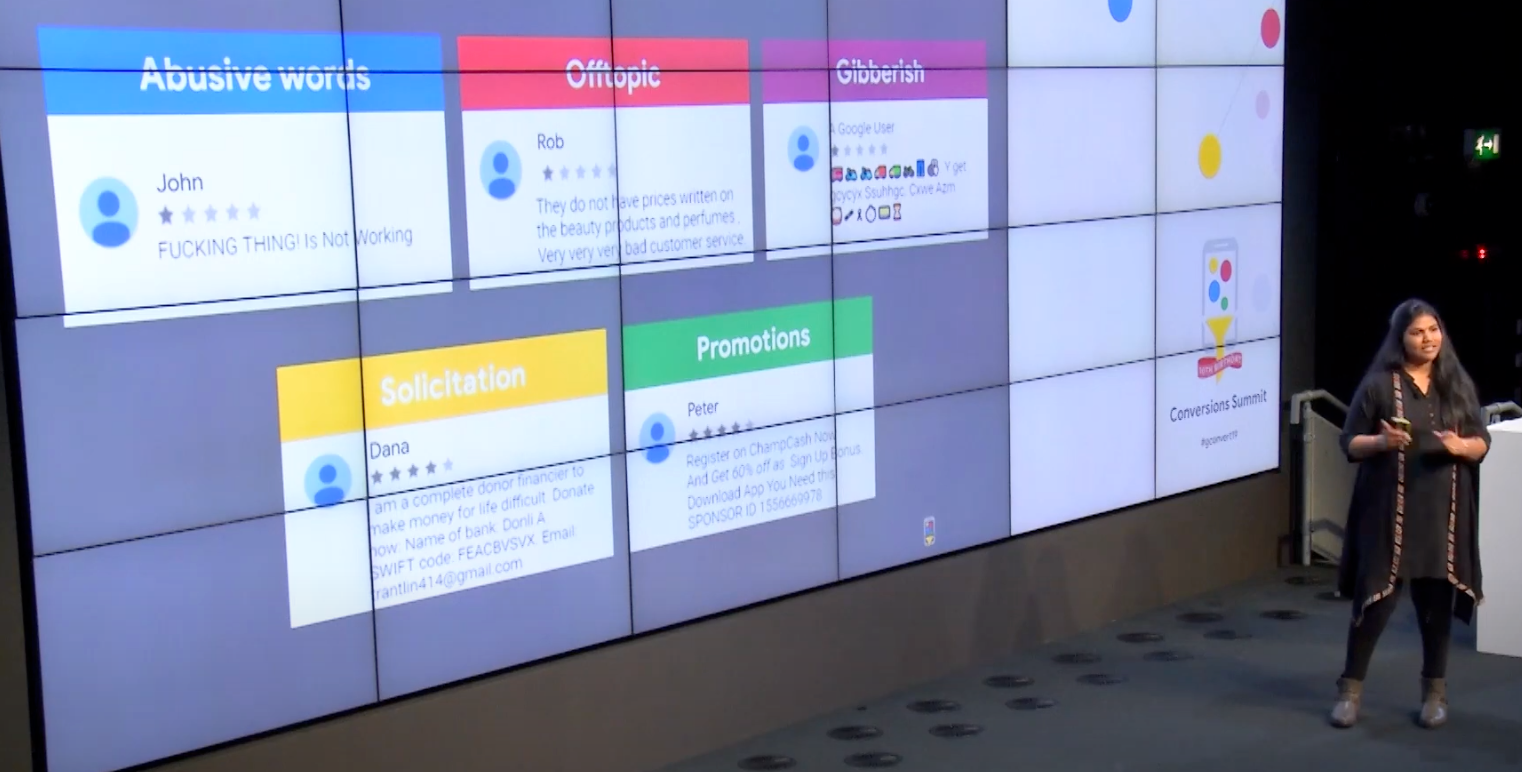

Google has published a study on how user-generated content (UGC) spam affects the user experience. The study was conducted on 3300 participants from India, South Korea, and the USA through Google Play. 1100 people from each country participated in the study. I won't go into detail; the study included these 5 UGC spam types:

•Gibberish: "asdsad jksjfs sdhd"

•Irrelevant: "Review of a movie app for a gaming app"

•Social Media: "Follow me on social media @sss"

•Abusive language: "e.g. idiot"

•Promotions: "Instant cash discount, register now”

You can watch the video here:

The effort required to reduce this spam type from 5% to 0% is a lot more than the effort required to reduce it from 10% to 5%. I am sure that Google will continue making quite an effort to improve UGC.

Automatically Generated Content

Automatically generated content, as the name suggests, includes actions on content that is generated through certain programs or systems without adding any value.

These include but are not limited to:

- Texts that are gibberish and translated by an automated tool,

- Texts that only include keywords within no comprehensible context,

- Texts generated through automatic processes such as Markov Chains,

- Meaningless content generated using synonymous words,

- Pages generated through retrieving data from RSS feeds,

- Content generated through combining other content from different websites without adding any value or moderation.

Google presents limited examples of automatically generated content and explains that manual action might be taken on completely different behavior. Also, note that Google has not made any updates on the document page of this manual action type for many years. There is an action type taken on automatic queries as well.

Sneaky Redirects

Sneaky redirect is the act of sending a user to a different page than the one they saw on the search results. The underlying reason is to display different content to the user and Googlebot. The first announcement regarding such behavior was made on April 30, 2014.

When the user is redirected to a page related to "x movie" while the content they saw was about "structured data" in the search results, it means that the webmaster directed the user to a different content intentionally.

Another example that may lead to a similar mistake is redirecting mobile users to different pages than desktop users. You should make sure that the aim of redirecting (Javascript & 301) reflects the truth not to be affected by this type of manual action.

You can check whether your website has a sneaky redirect using the code below:

<script type="text/javascript">location.replace("https://domain.com");</script>

Not only the device used for web browsing but also the source from which the user is redirected, in other words, the search engine or social media platform, matters in sneaky redirects. The source should be detected before the redirect. I would like to remind you that unreliable advertising companies caused publisher websites such problems in the past.

Apart from being given manual action, you might lose your users' trust in your site because of this.

Affiliate Programs

Affiliation comes from the nature of web, which is quite normal. However, website owners in affiliate marketing business should be more sensitive.

If affiliate websites create pages without adding value to users, Google might consider those pages unworthy. The existence of poor content with no value in SERP leads to a negative user experience.

Examples:

Pages with product affiliate links on which the product descriptions and reviews are directly copied without any added value,

Affiliate websites with a limited amount of original content.

I recommend that you do your best for your site to perform better than the original merchant's website. Instead of copying content from the original merchant's website, you can produce original content with high added value to make more sales. Click here for further information.

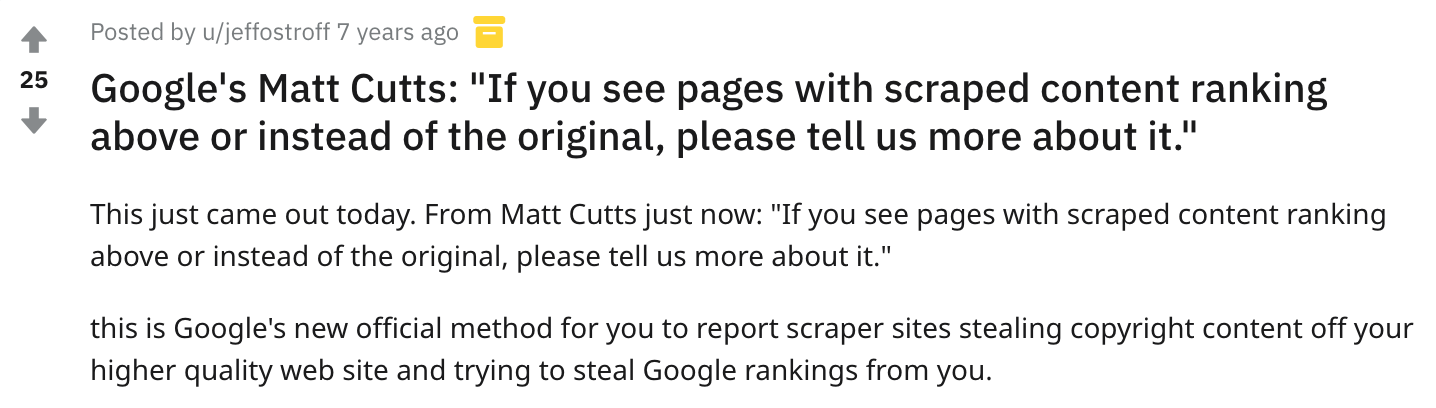

Scraped Content

Websites with no or very little original content copied from other sites without adding no values might be given manual action under the name of "scraped content".

Content that is directly copied from even the most qualified sources such as Wikipedia will be poor. In addition, the act of such scraping can sometimes cause copyright problems.

Examples:

- Sites that copy content through bots or manually without adding any value,

- Sites that copy content from different sites and modify it slightly or substituting synonyms,

- Sites that take content such as images or videos from other sites without any other activity might be penalized with manual action.

Matt Cutts from Google made an explanation about it on Reddit 7 years ago:

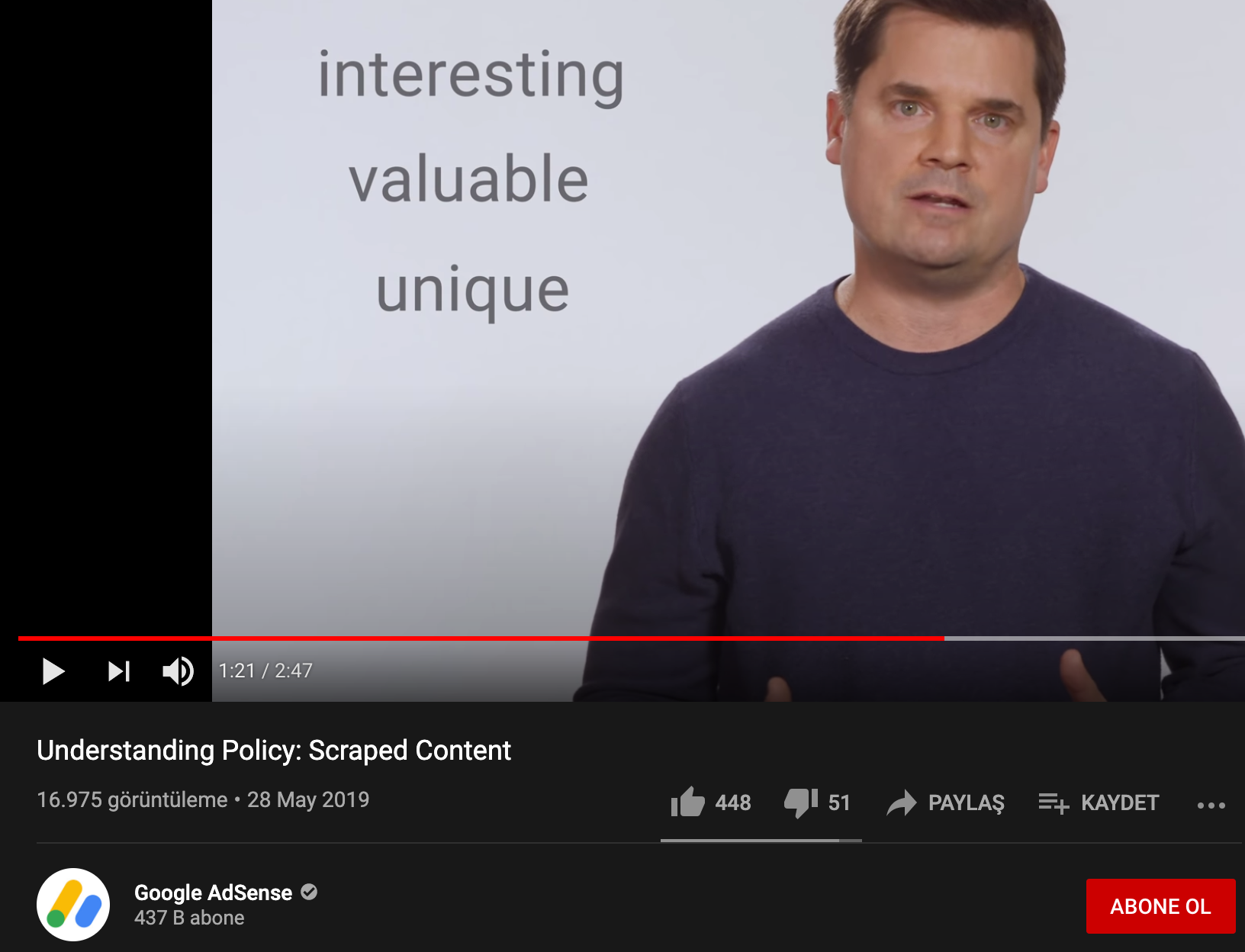

This is also against (Valuable Inventory: Scraped Content) Google Adsense policy:

Doorway Pages

Doorway pages can be best defined as poor intermediate pages created to rank highly for specific search queries, which violates quality guidelines.

New York Times has published news about poor search results for 10 years. This manual action, doorway pages, first started to be used on March 16, 2015.

Doorway pages are malicious pages that only target certain queries and generated, for instance, with district names such as "Chelsea SEO Agency" or "Westminster SEO Agency". Actually, these pages direct users to the same purpose. The main problem of doorway pages emerges when no value is added to the users. This problem is usually caused by the act of constantly generating the same pages by slightly changing the keywords.

That sounds like doorway pages, not something I'd recommend.

— 🍌 John 🍌 (@JohnMu) December 10, 2019

While it can be seen on multiple pages of only one site, it is also possible to see it in different domains. At this point, it is important to fully understand the purpose of users and the content that is presented. Click here for further details.

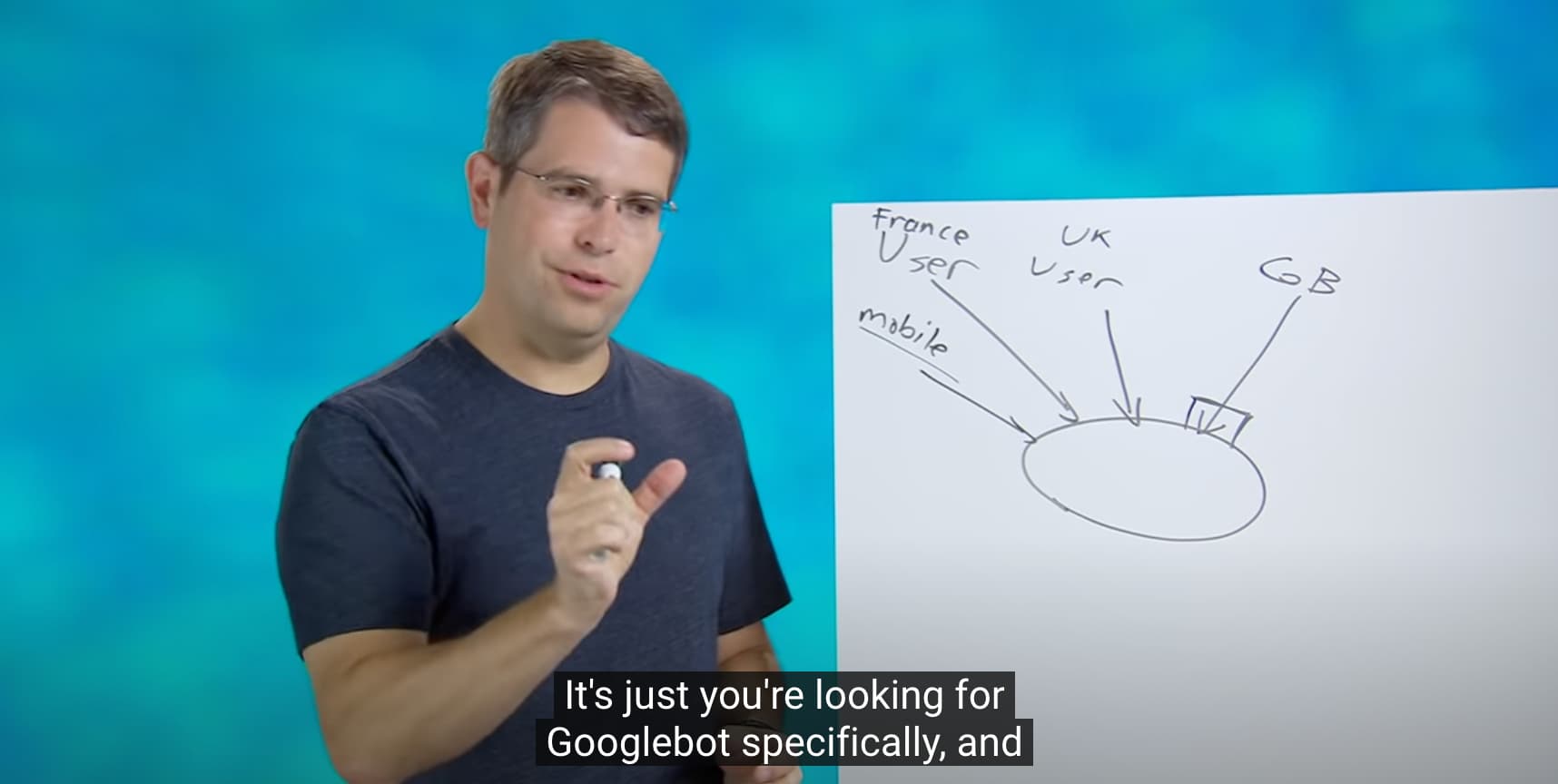

Cloaking / Hidden Texts and Links

Cloaking is the act of presenting different content to the users and search engines, which violates Google's guidelines.

Google publishes these two violations separately but I would like to explain them together. Examples of cloaking:

- Displaying HTML to Googlebot while the users can only see the images and videos,

- Displaying search keywords to Googlebot only when the user agent is detected while not serving the keywords used in ranking to human users.

- Displaying keywords to users as they expect to see, while displaying different words to Googlebot by overusing the keywords in HTML,

- Hiding text behind an image intentionally,

- Using white text on a white background to make sure it won't be realized,

- Setting the font size to 0.

You can watch the video prepared by Matt Cutts here.

It is also important not to display a different version to Googlebot for IP redirect. It won't be a problem unless you serve different content to users from the USA and the G-bot, since G-bot crawls IP addresses based in the USA. Hidden keywords may also harm your site.

Not every method of cloaking should be considered a violation. Sometimes extra text can be used to explain HTML text on Javascript-based sites, which may not be considered cloaking. It is best to look for manipulative behavior to detect cloaking as a violation.

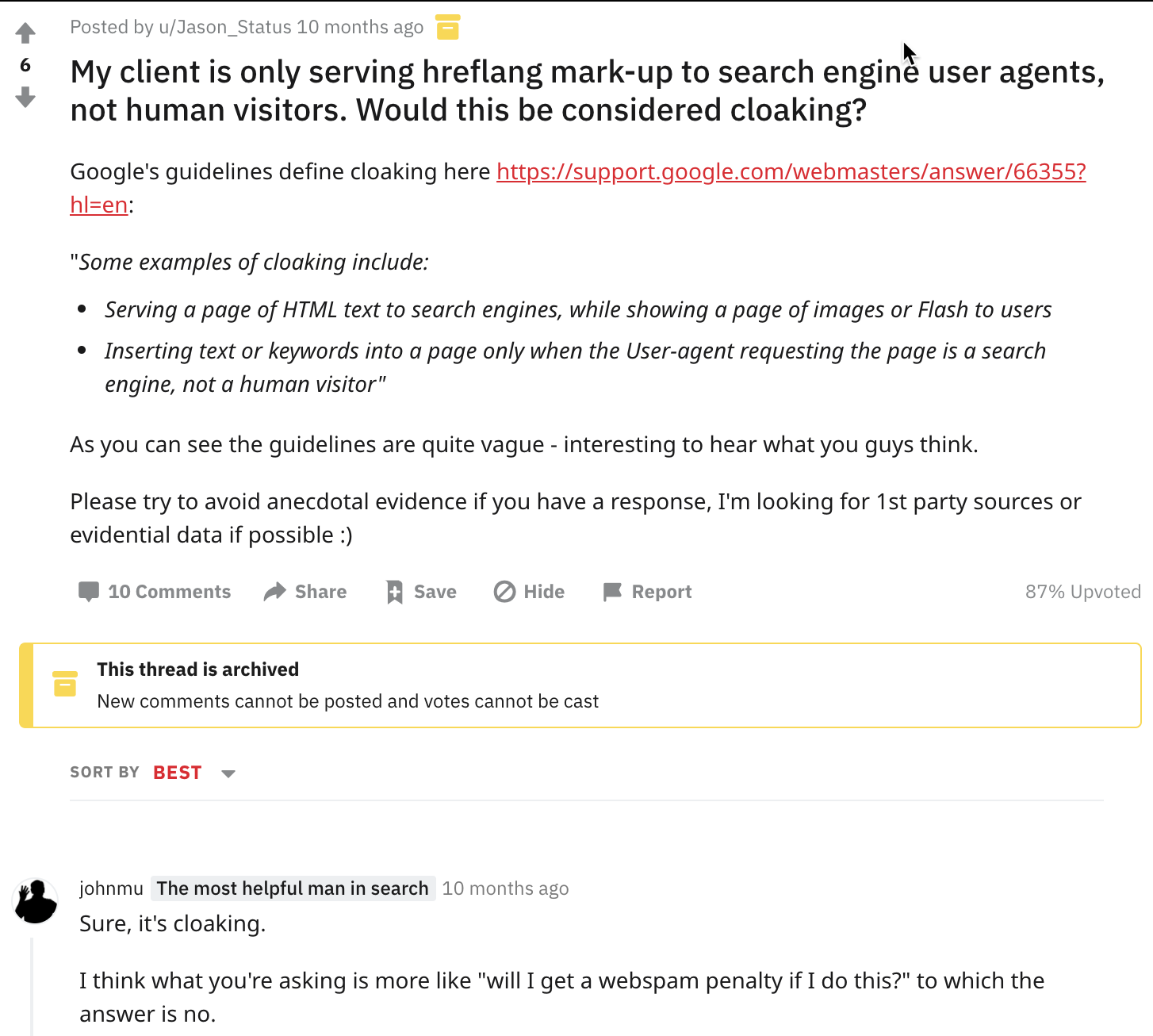

Mueller was asked whether using hreflang is considered cloaking or not on Reddit, his answer to this was a "no":

You should also be careful when you do an A/B test. It is emphasized that it won't be a problem unless you treat Googlebot differently and that A/B testing should be given a limited lifetime:

Websites that offer subscription-based access to some of their content may consider using the relevant structured data in order to avoid cloaking.

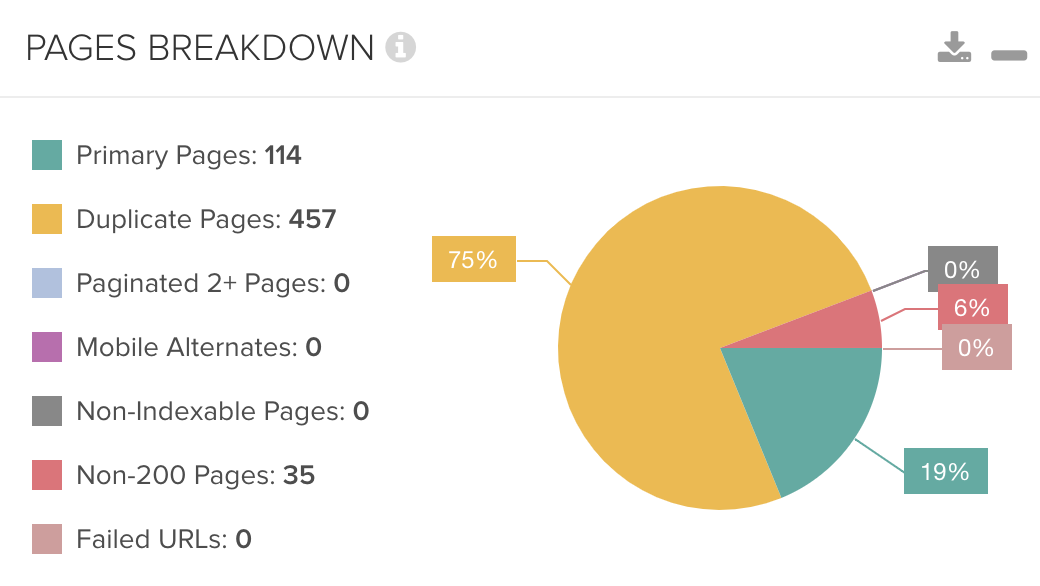

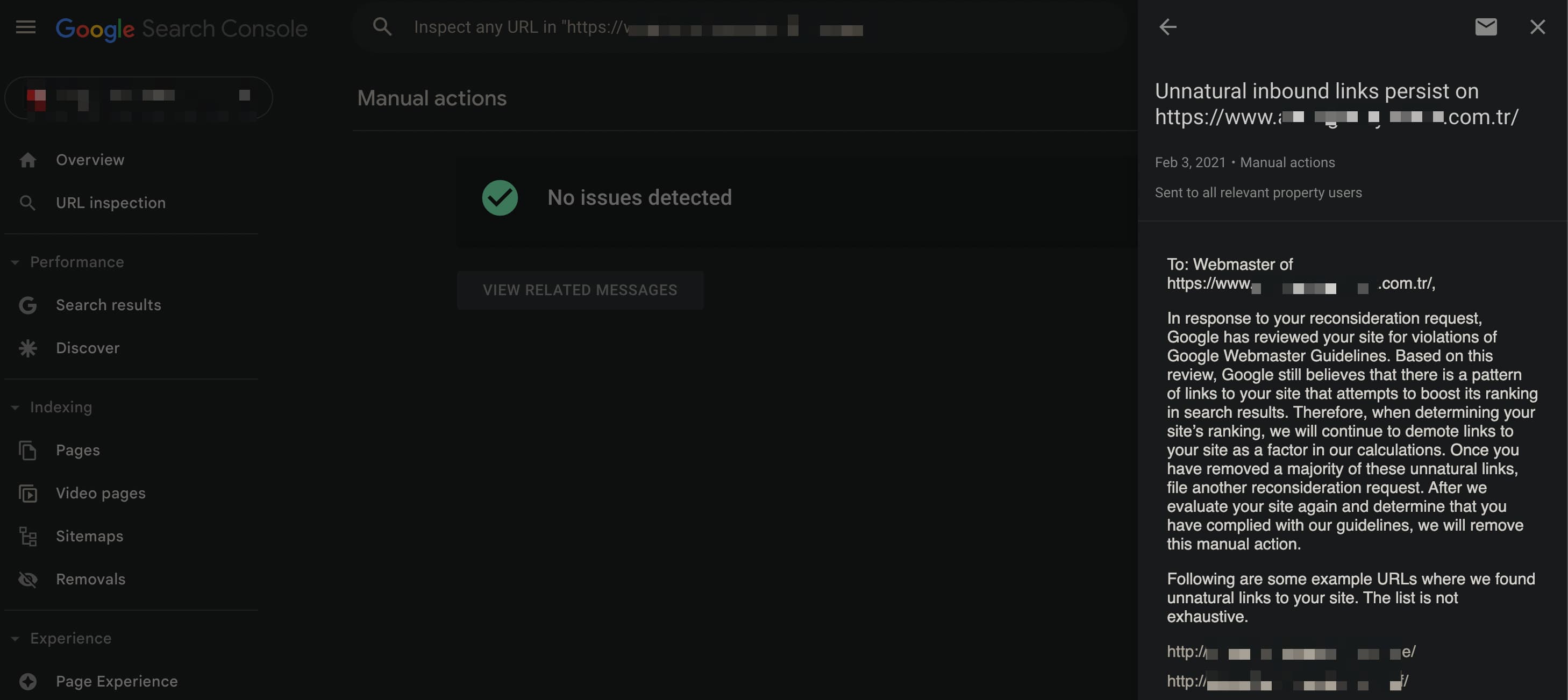

Backlink Violations

Paid backlinks and the link schemes violating the guidelines might cause your website to be penalized.

Google finds it quite natural to pay for advertising websites. However, it recommends adding nofollow to backlinks or adding sponsored to sponsored links.

Examples of link schemes that may be considered violation:

- The act of exchanging links,

- Paid links without nofollow attribute,

- Aggressive use of anchor text,

- Not stating that the backlinks are sponsored,

- Getting backlinks to your site, which are automatically generated through programs designed to do so,

- Low quality web directories,

- Unnatural backlinks from footers.

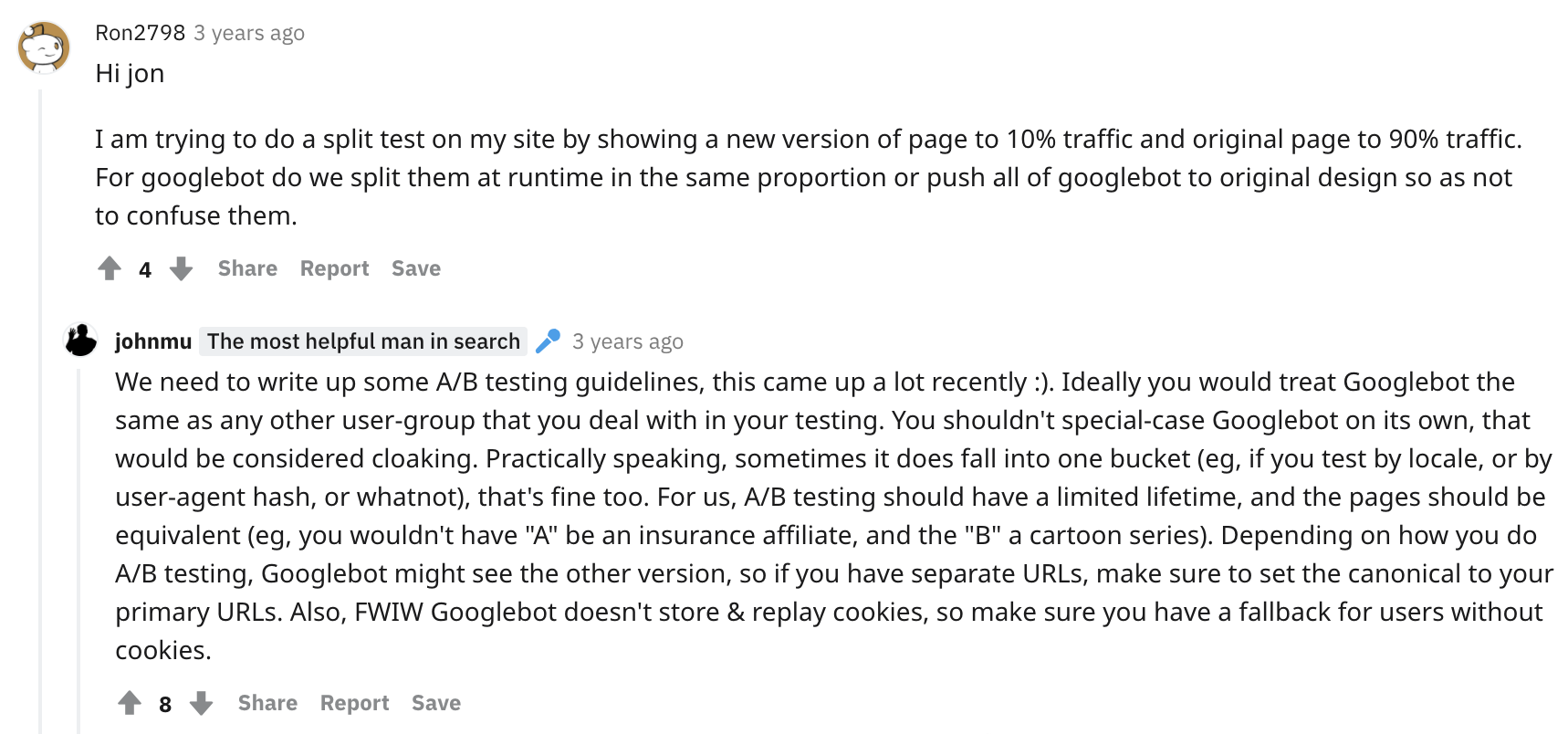

You can benefit from SEO tools that provide strong data for backlink, such as CognitiveSEO. They may help you differentiate between natural and unnatural links:

You can easily detect where backlinks come from. By checking "unnatural link" sites specified by such tools, you can also create the best link profile for you:

You may consider adding nofollow to links in your work such as press releases:

Unnatural links or links without the nofollow attribute may be considered a violation by Google. While manual action can be taken in some cases, sometimes algorithms may prefer not to use the signals coming from these links in ranking.

Google explains that random backlinks to your site may be ignored:

Random links collected over the years aren't necessarily harmful, we've seen them for a long time too and can ignore all of those weird pieces of web-graffiti from long ago. Disavow links that were really paid for (or otherwise actively unnaturally placed), don't fret the cruft.

— 🍌 John 🍌 (@JohnMu) January 25, 2019

So, what does it mean to get natural backlinks? To explain it, I would like to refer to Google's definition:

I would like to mention 2 manual action types which are unnatural links to your site and unnatural links from your site. In short, both spammy/low-quality links to and from your site may lead to manual action.

An explanation for unnatural links to your site:

An explanation for unnatural links from your site:

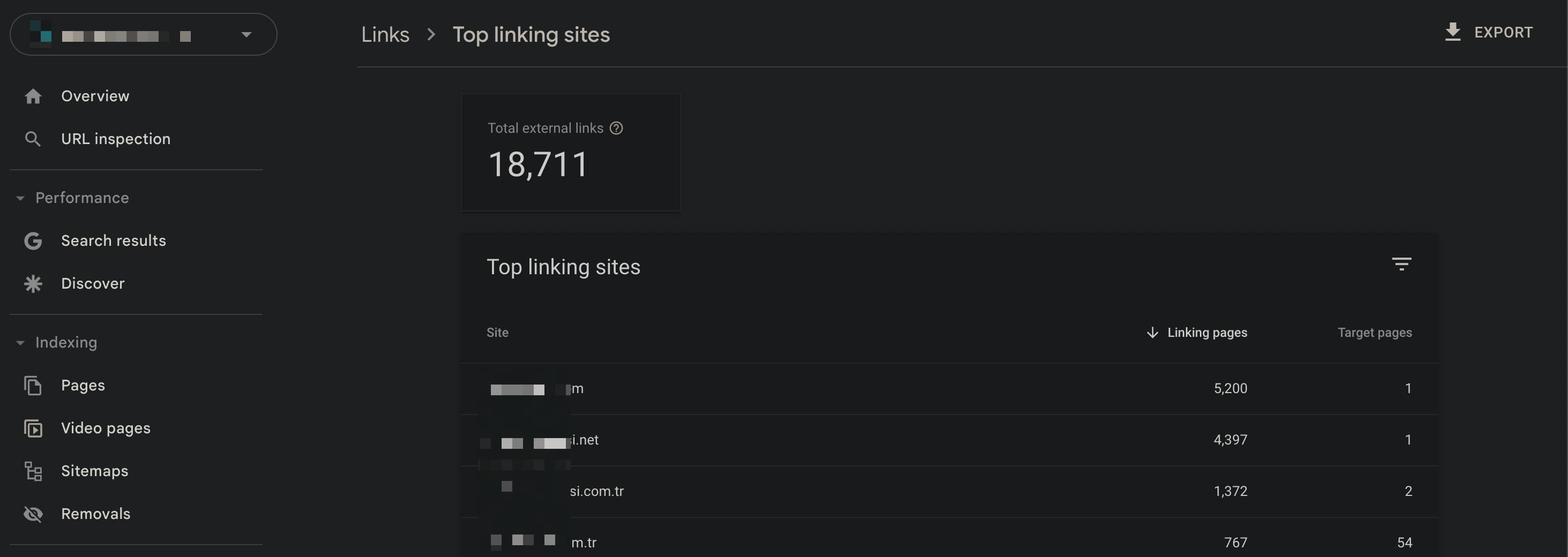

You can detect backlinks to your site through Search Console. Click links and then "top linking domains" to see all data:

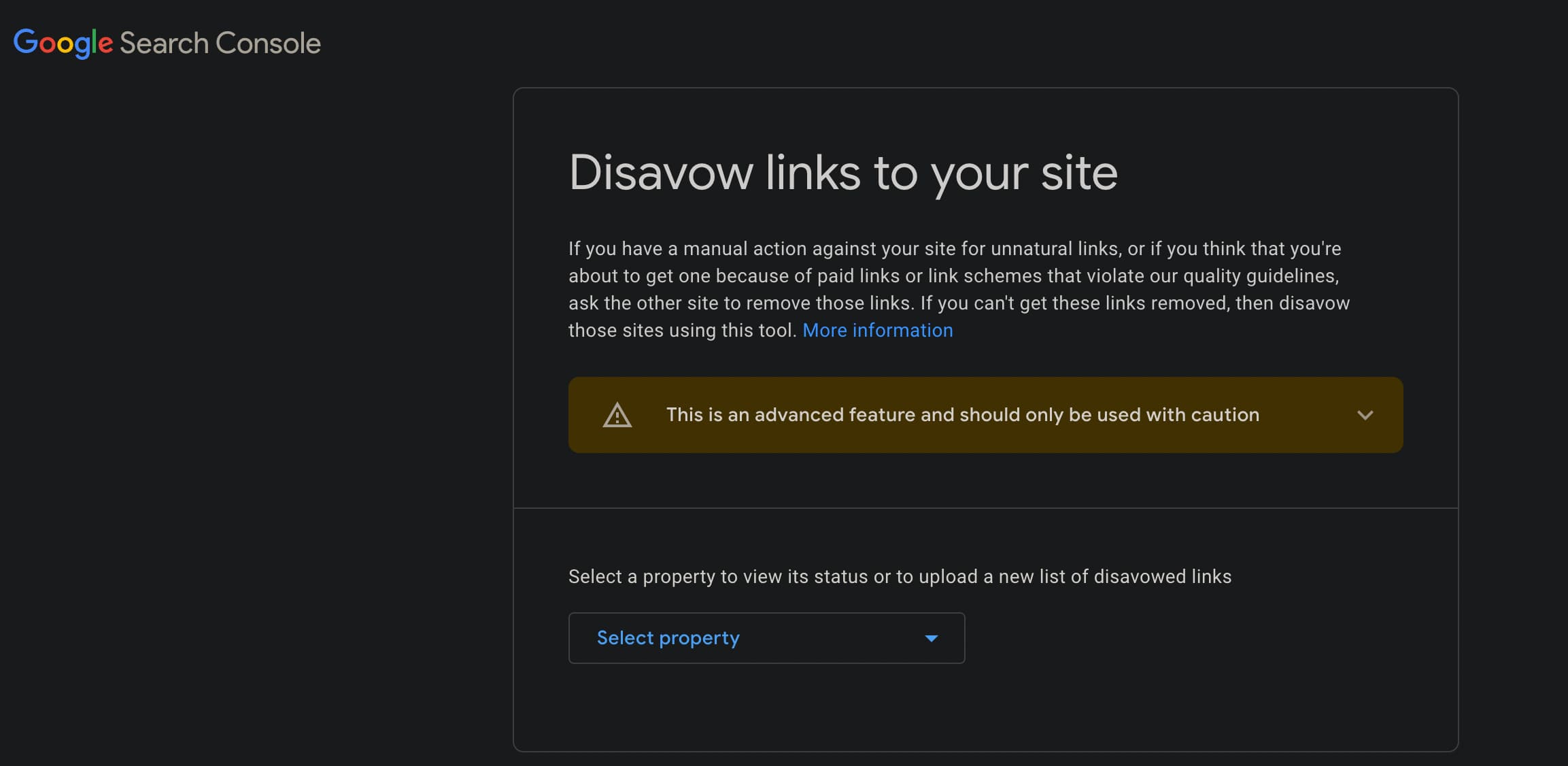

You can use tools to disavow unnatural backlinks to your site. If unnatural backlinks are the reason for the manual action against your site, I recommend that you explain the details in your reconsideration request:

Search Console will still see the disavowed backlinks but Google will process them once it crawls the relevant pages.

Structured Data Spam

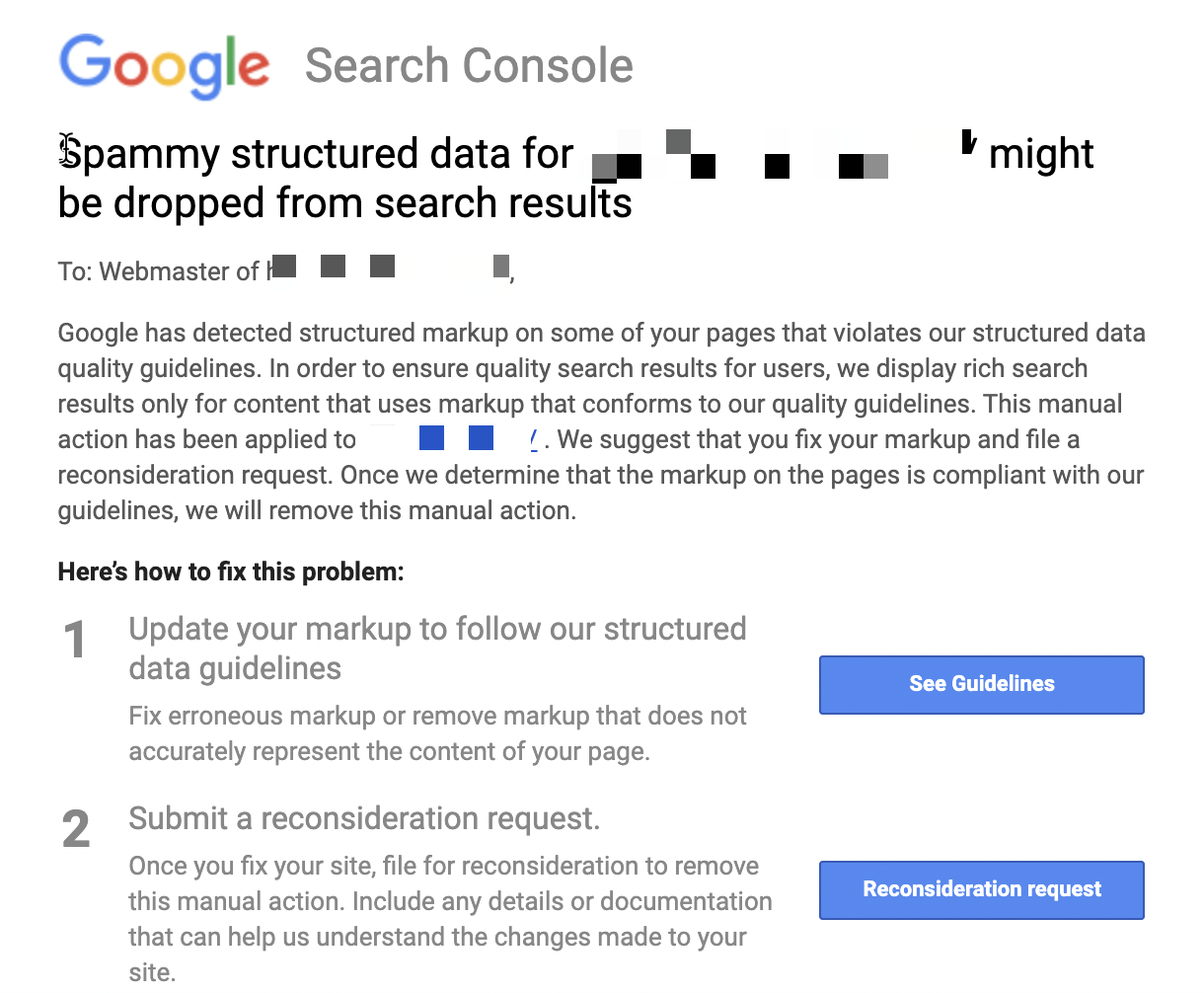

You may get manual action with or without knowing it because of the structured data added to your site. Such manual action will cause only your rich search results to be removed from the search results. For instance, your pages that use product schema markup may no longer be displayed in SERP with review stars or price. If you ever happen to violate the guidelines, you are likely to receive a manual action e-mail such as below:

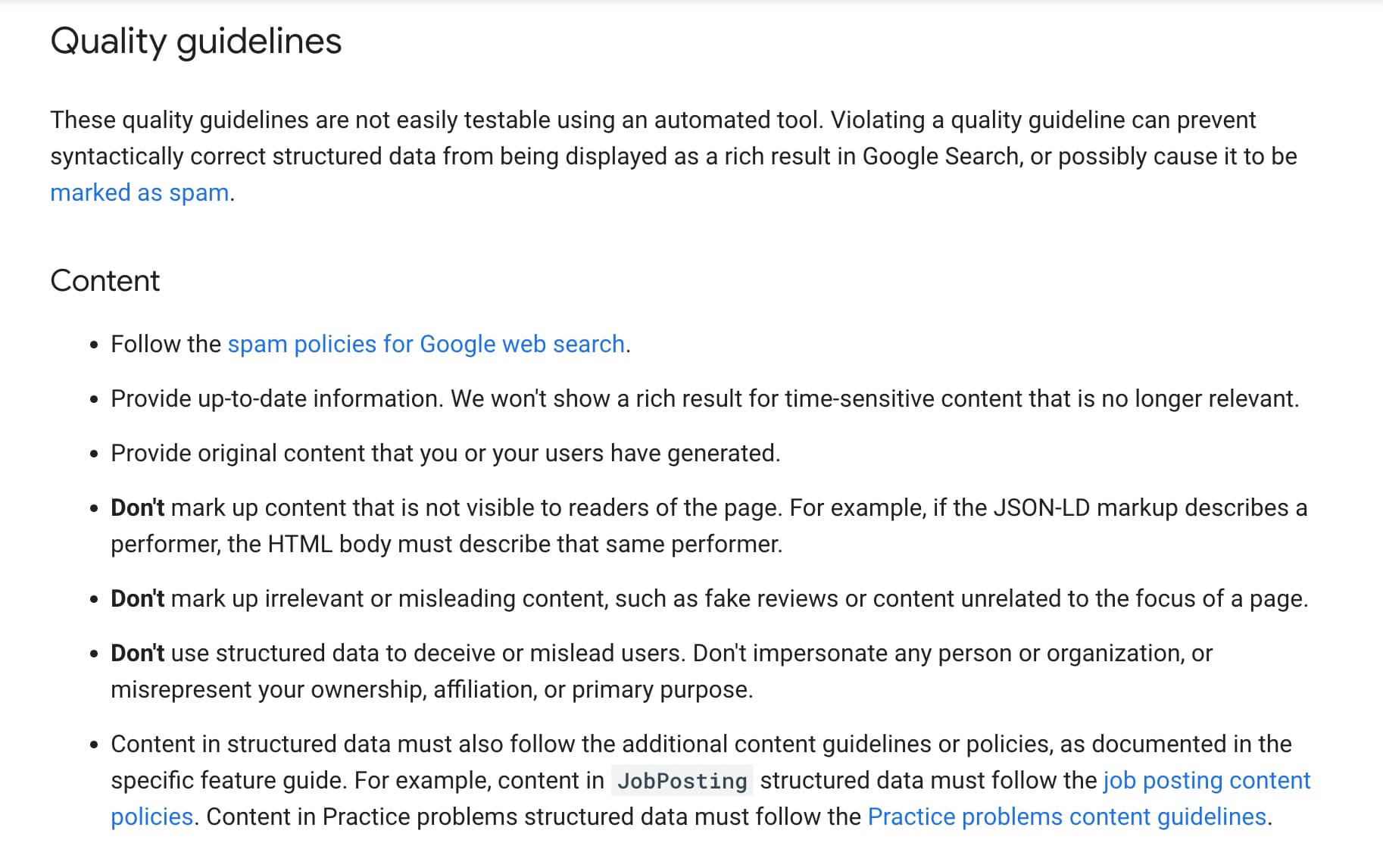

You can read the structured data guidelines and check your schema paying attention to each guideline specified for each structured data. For example, the specific guidelines for fact check schema are as follows:

If you ever get manual action because of spammy structured data, what you only need to do is to fix the errors in relevant pages and send a reconsideration request.

Content with Little Value / Thin Value

If you create pages or content with little value, all or some parts of your sites may get a manual action penalty because of little added value.

Examples of content with little added value:

- Automatically generated content,

- Doorway pages,

- Illegible pages full of punctuation and spelling errors,

- Content with no useful details and value,

- Illegible texts because of poor design,

- Scraped content,

- Insufficient unique content.

You can watch Google's video on this subject here:

Check your site for duplicate content to serve more unique and high-quality content. I recommend reading the blog on how to create high-quality content if you haven't read it yet.

Sloppy content won't bring you sufficient organic traffic. Plus, the abundance of such content may lead to manual action. Remember that you are responsible for each content on your site and consider updating your content that were created years ago but add no value right now.

Pure Spam

Pure spam is one of the harshest manual actions applied by Google against a website. Sites using aggressive spam methods may get pure spam penalty.

Getting constant manual actions on websites may also lead to pure spam penalty. For instance, if your site gets penalized for content with little value or unnatural links all the time, you are likely to see a pure spam notification in Search Console. Keyword stuffing or other aggressive spam types come under this category.

When you get a pure spam penalty, your site is removed from Google's index. Within 12 to 24 hours of the manual action notification, Google removes your pages from the index. If you get traffic from platforms such as Google News, your pages may be removed from the news index as well.

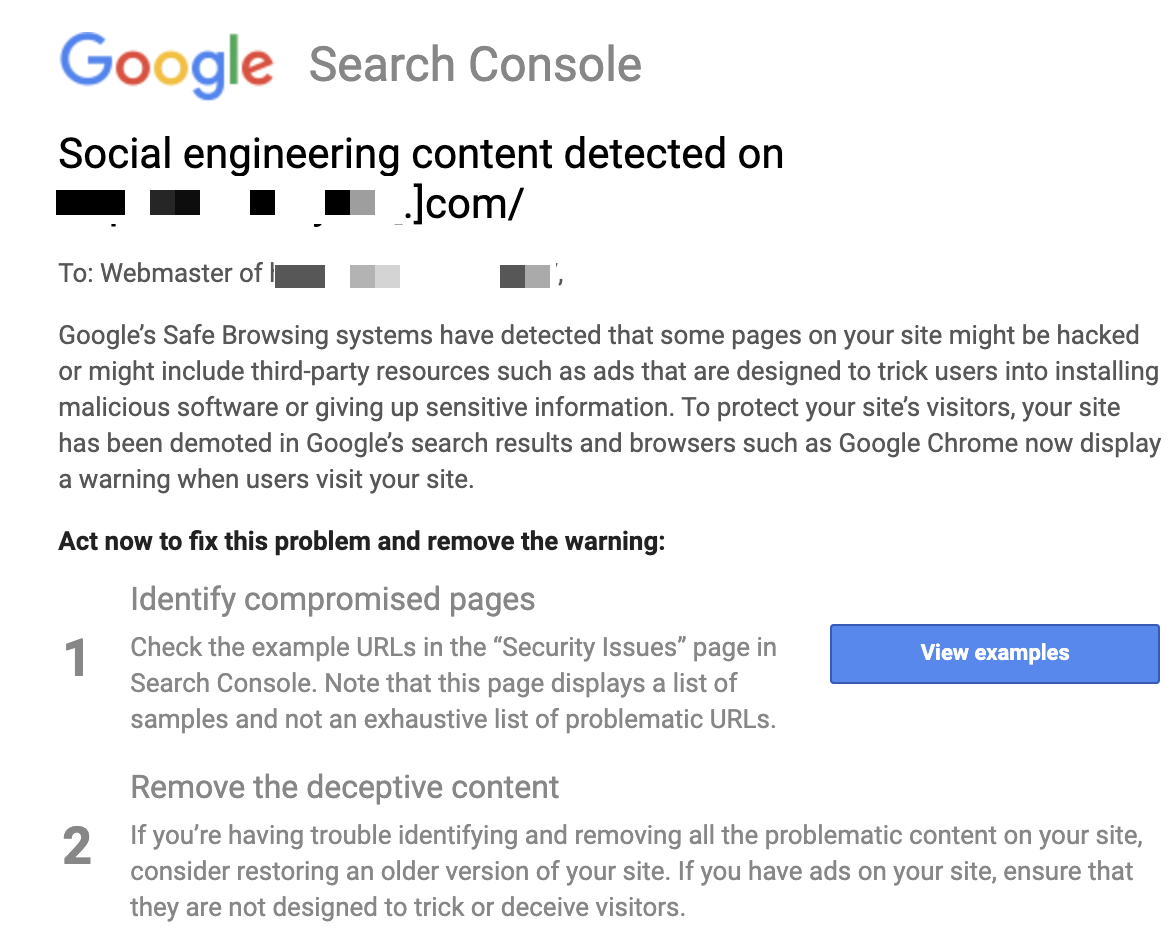

Security Issues

Security issues are different than manual actions. In order to protect and inform the users and website owners, Google may reveal to the visitors a site or some pages of it being hacked. One of the main differences between manual actions and security issues is that the latter requires spam to be reviewed manually.

You can read the article on sites with malicious behavior. If you have problems such as content injection, you will have to fix them.

You may also encounter social engineering content problem. When you receive such notifications, you should follow the steps explained by Google and consider removing relevant pages if that is required:

You can also arrange pages mentioned in the malware or unwanted software list. Especially if you have infected files on your website, you must remove them:

Google News & Discover Manual Actions

Your site may get manual action when Google News policies are violated. The reason for manual action and the affected pages will be seen in Search Console. Your site may also get manual action because of Google Discover guidelines violations. These types of manual action have been public since February 8, 2021.

The URLs that cause such a violation will be removed from discover results. This is a warning that your site violates only the news and discover policies. It won't affect Google search results.

In addition, the content of the AMP version should have the same topic as that of the canonical web page. The content does not have to be identical but the users should be reaching the same information on both the AMP and the canonical page. If a violation is detected at this point, your site can get manual action for "AMP content mismatch".

Reconsideration Requests

You need to send a reconsideration request to Google after fixing the problems to remove the manual actions mentioned in this article. You'll see this form only when your site gets a manual action.

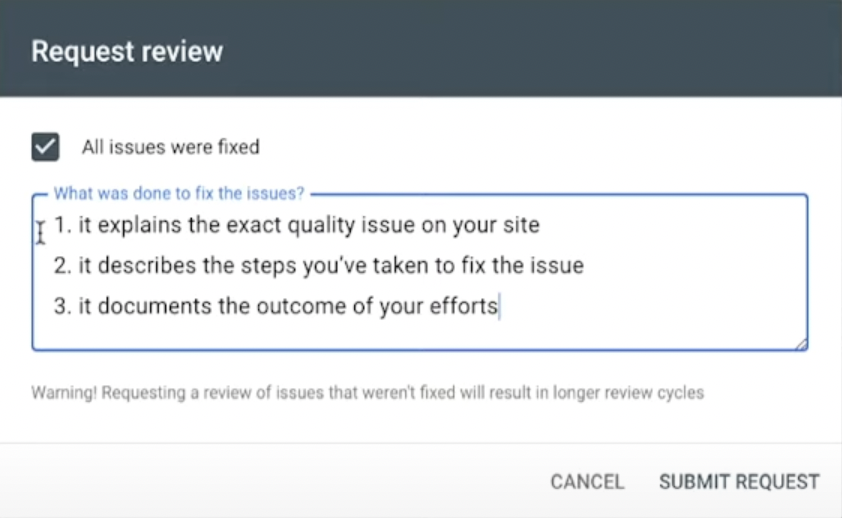

In your request review form, you should explain all the details of how you have fixed the issues and provide evidence. After that, you need to wait for the reviewers' opinion. Reconsideration requests may take a few days or a week to result. In the end, your request will be either denied or accepted. An example of how to use the form:

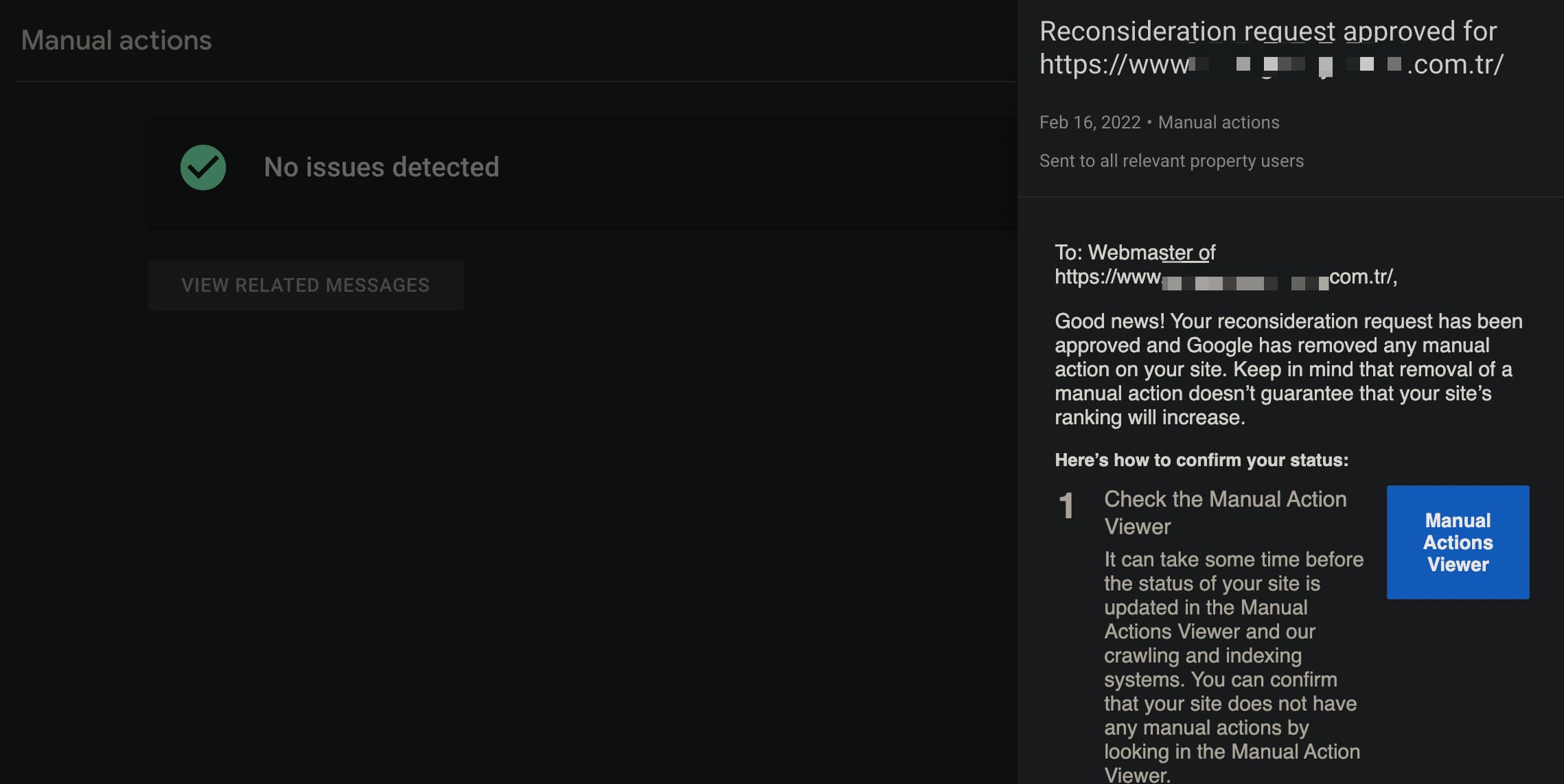

If your request is denied, I recommend that you not re-send the form before fixing all the issues. Do not re-send the form before receiving the result e-mail because your site could still be reviewed. If your request is accepted, you'll probably receive a notification such as this one:

Although rarely, reviewers may send you some notes to help fix your problems. These notes include requests such as "provide additional evidence".

I would like to answer some frequently asked questions regarding manual actions:

-My paid domain/site is banned. What should I do?

In such cases, consider explaining the situation in your reconsideration request.

-Can I get multiple manual actions?

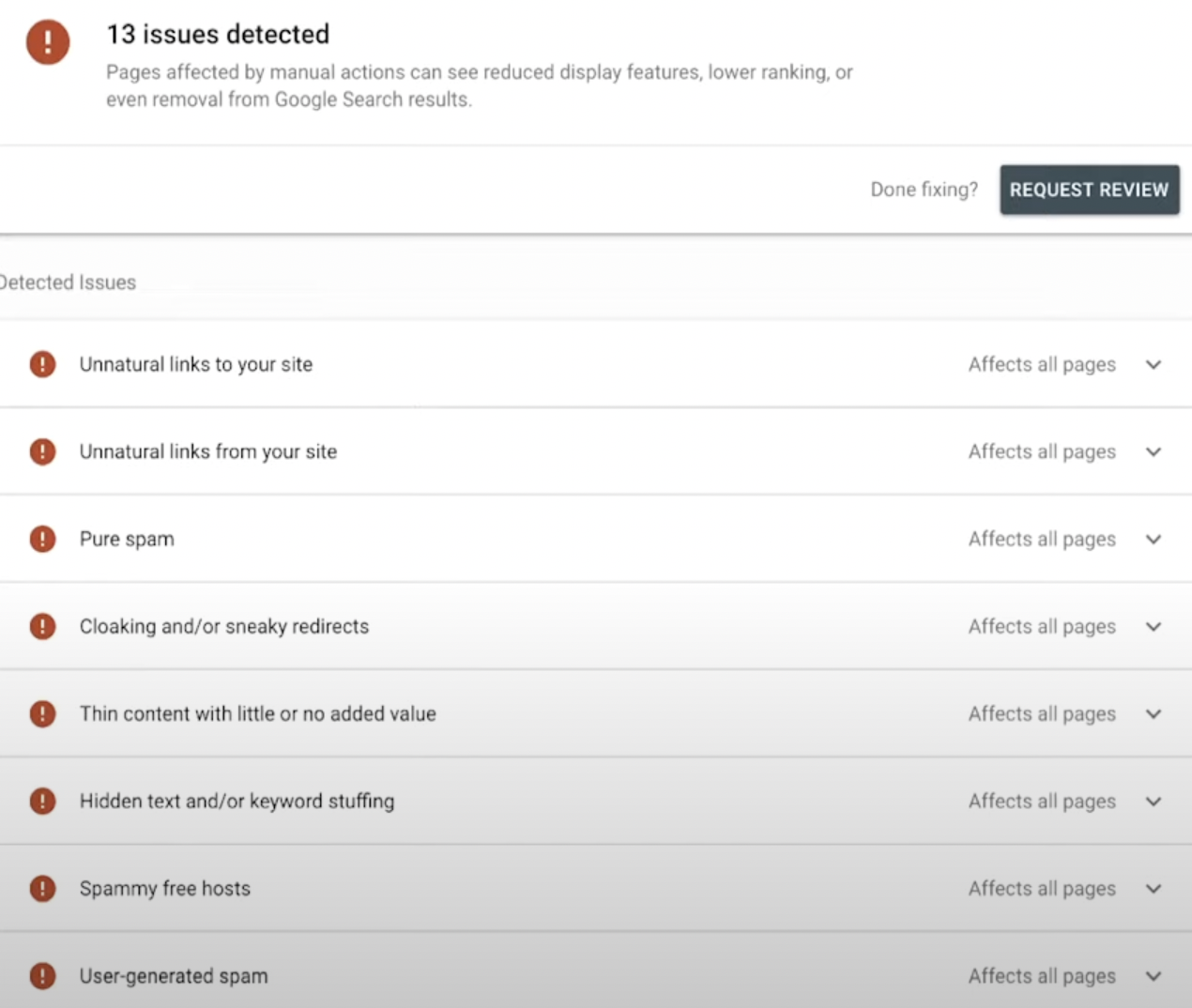

Yes, your site may get more than one manual action. You can see an example of it, which I took from a video:

-How do I get my traffic back after recovering from a manual action?

You have got more traffic through spam. So, it is quite normal that you don't get your traffic back since you remove/edit the relevant pages to recover from the manual action.

-X website has spammy behavior but does not get penalized. Why?

Google is not perfect, of course. When you just go to a site, it is not possible to tell if there is a manual action against it. For instance, X website got manual action because of content with little value but this action may have applied only to its certain pages.

-My manual action has been removed but I still have problems with indexing. What do I do?

When you recover from penalties such as pure spam, it is possible to see fluctuations in indexing for a couple of days. You should check for problems on your site that could hinder indexing and fix them.

While writing this article on Google penalties and manual actions, I tried to be as elaborative as possible and refer to evidence and specialists' explanations. In fact, each manual action is to be elaborated on its own but I wanted to present them all together. I hope it's been useful for dearest ZEO visitors who spared their time to read this article to the final lines.

That's it. I wish you all a Search Console account that says "No issues detected" :)