AI Crawlers and SEO: Optimization Strategies for Websites

With the rapid proliferation of artificial intelligence (AI) technologies, websites must evolve their SEO strategies to adapt to this change. In addition to traditional search engines, the growing share of web traffic from AI crawlers such as OpenAI's GPTBot and Anthropic's ClaudeBot means that websites must be optimized specifically for these next-generation crawlers.

Let's take a closer look at the technical features of AI crawlers and the most effective optimization strategies for websites.

Technical Specifications of AI Crawlers

Traffic Volume and Distribution

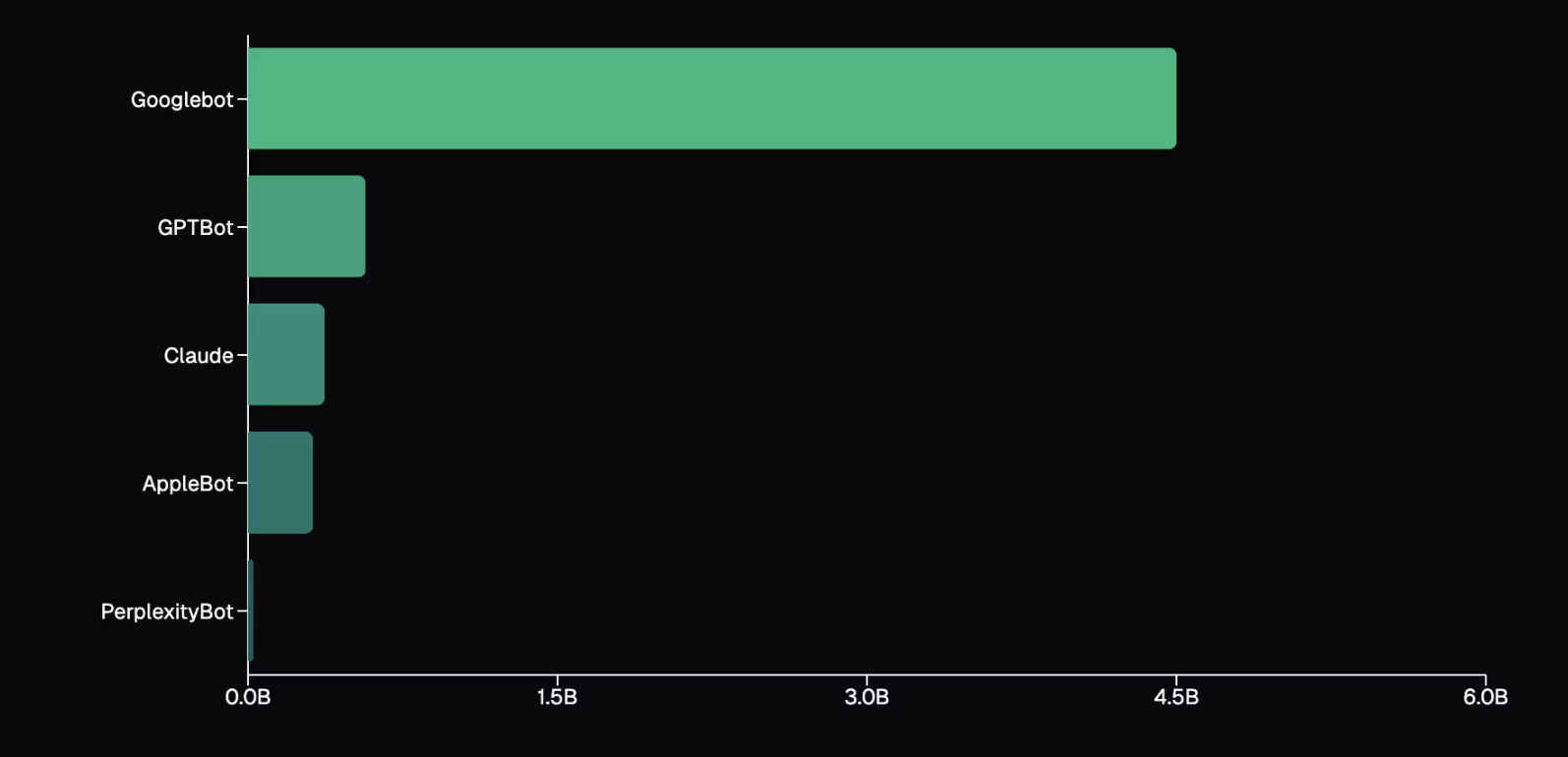

Recently, the impact of AI crawlers on the web has been increasing. OpenAI's GPTBot attracts attention with 569 million monthly requests, while Anthropic's ClaudeBot has reached 370 million. These two AI crawlers represent 939 million requests, indicating that they have a significant share of web traffic.

In addition to these, PerplexityBot also took its place in this field with 24.4 million requests. These figures reveal how much traffic volume AI-based crawlers generate compared to traditional search engines. For example, considering that Googlebot has 4.5 billion requests per month; it is understood that the total requests of AI crawlers correspond to approximately 28% of Googlebot.

Infrastructure and Access Features

AI crawlers have specific infrastructure and access features that distinguish them from traditional search engines in crawling websites and collecting content. These bots are particularly limited in JavaScript processing. While OpenAI's GPTBot and Anthropic's ClaudeBot can retrieve JavaScript files, they cannot execute them. Therefore, server-side rendering (SSR) of important content allows these bots to understand the content better.

Unlike other AI crawlers, Gemini performs web crawling using Google's infrastructure. This allows Gemini to render JavaScript fully. Leveraging Googlebot's infrastructure gives Gemini a significant advantage in crawling modern web applications more effectively and understanding their content. This allows Gemini to better evaluate the dynamic content of websites.

AppleBot uses a browser-based crawler system. This system is capable of rendering full-page renderings by processing JavaScript and other resources. While AppleBot's approach allows it to crawl the content of websites more comprehensively, it is also a factor that affects the content prioritization approaches of AI crawlers.

Content Processing Features

AI crawlers have distinct characteristics when it comes to analyzing and processing content on websites. These bots adopt different prioritization approaches based on content types.

OpenAI's ChatGPT places a heavy emphasis on HTML content, accounting for 57.70% of its total fetch requests. This reveals AI crawlers' interest in text-based content and how important it is for SEO.

Visual content stands out as another important category for AI crawlers. Bots like ClaudeBot allocate 35.17% of their total fetch requests to visual content. This shows that images play a critical role in the overall SEO performance of websites and that AI tools are increasingly capable of analyzing visual data.

The ability of AI crawlers to download JavaScript files but not execute them poses a significant limitation in the content processing process. For this reason, web developers need to present their important content with server-side rendering (SSR) to enable AI crawlers to better understand this information.

Tired of reading?

You can also listen to this blog post as a podcast we created with Google NotebookLM on Spotify.

Optimization Recommendations for AI Crawlers

Server Side Rendering Strategies

Server-side rendering (SSR) strategies are critical to improve the performance of websites and make them more accessible to AI crawlers. Presenting dynamic content with this method both improves the user experience and makes it easier to be indexed by search engines. Therefore, preferring the SSR method in the presentation of dynamic content increases the visibility of websites.

In addition to SSR, the following methods are also recommended:

- Incremental Static Regeneration (ISR): Allows certain pages to be updated.

- Static Site Generation (SSG): It allows for fast delivery of static content.

These methods not only improve the performance of websites but also enable AI crawlers to crawl content more effectively.

Optimizing meta information and navigation structures is also an important factor. Meta titles and descriptions are important signals for search engines and help users navigate through pages more easily. Having organized and clear navigation structures not only improves the user experience but also helps AI crawlers crawl your website more effectively.

Although it is predicted that AI crawlers will increase their ability to process Javascript resources in the future, it is important to present critical content or resources with SSR to keep up with this rapid change.

Crawler Control and Security

The security of websites and the protection of their content has become even more important, especially with the increasing use of artificial intelligence-based crawlers. In this context, developing crawler control and security strategies should be a priority for webmasters.

It is important to use the `robots.txt` file to control which parts of your website can be crawled by search engines and AI bots. By blocking pages with sensitive content from being crawled, you can prevent this information from being collected by bots. For example, let's say you publish your company's quarterly financial reports. These reports may become outdated after they are published. Therefore, you can prevent AI bots from crawling these reports by using the `robots.txt` file to prevent the collection of old information. Thus, you can ensure that only current and official data is available.

Web application firewalls (WAF) are an effective tool in controlling the access of unwanted AI bots and other malicious traffic to your website. Using a WAF, you can block specific crawlers or filter traffic based on certain rules. This is especially useful for websites that face unwanted bot traffic. WAF not only increases security but also improves the performance of your site.

Protecting sensitive content is a critical element of website security. This content can include important information such as user data, financial information, or private documents. Access control and encryption methods should be used to protect such content.

Caching and Content Timeliness

Caching strategies play an important role in content optimization. The caching mechanism helps websites load faster, allowing users to open pages faster. However, it is important to pay attention to the frequency of content updates when caching. It is important to clean and update the cache regularly, especially for frequently updated content. This practice ensures that users always have access to the most up-to-date information. When using caching, it is important to carefully manage the balance between content timeliness and website performance.

Future Trends and Preparation

The rapid development of web technologies leads to the continuous evolution of AI-based crawlers and JavaScript rendering capabilities. In the future, JavaScript rendering capabilities are expected to improve even further. This will allow AI crawlers to understand dynamic content more effectively and make it easier for them to analyze website content. Greater adoption of approaches such as SSR and SSG will make content more accessible and crawlable.

These expected improvements in crawler efficiency will also profoundly impact websites' SEO strategies. AI-based crawlers will crawl content faster and more accurately using more advanced algorithms, making the content optimization process easier for webmasters. These developments will contribute to better website indexing and thus increase user experience.

The increase in the capacity of artificial intelligence (AI) models to understand content also stands out as an important trend. In the future, these models are expected to understand, analyze, and interpret more complex and detailed content. This will require web developers to carefully consider the way they present their content.

Rapid developments in AI technologies are constantly evolving web standards and search engine algorithms, necessitating continuous learning and optimization processes for webmasters and SEO professionals. In the future, the development of AI's capabilities to understand and interpret content will further increase the importance of a proactive and adaptive approach to website success. Therefore, it will be vital for web developers and SEO experts to adapt to these changes and be open to continuous learning and development to stay ahead of the competition in the digital world.

Resource: https://vercel.com/blog/the-rise-of-the-ai-crawler