Automating Crawl Reports in Looker Studio

In this article, we will be discussing how to make the crawl reports we generate with Screaming Frog more understandable and traceable.

In order to do this, we will use three main tools Screaming Frog, Google Sheets and Google Looker Studio.

Screaming Frog is an SEO tool that has improved itself a lot in recent years. Here, we will use Screaming Frog to connect a planned crawl to our Google Drive account and then use a Looker Studio template to view the crawl reports on a single screen.

We can say that we do this in order to clearly see the changes experienced on the sites where we do intensive technical work from time to time and to provide more meaningful data in customer reports.

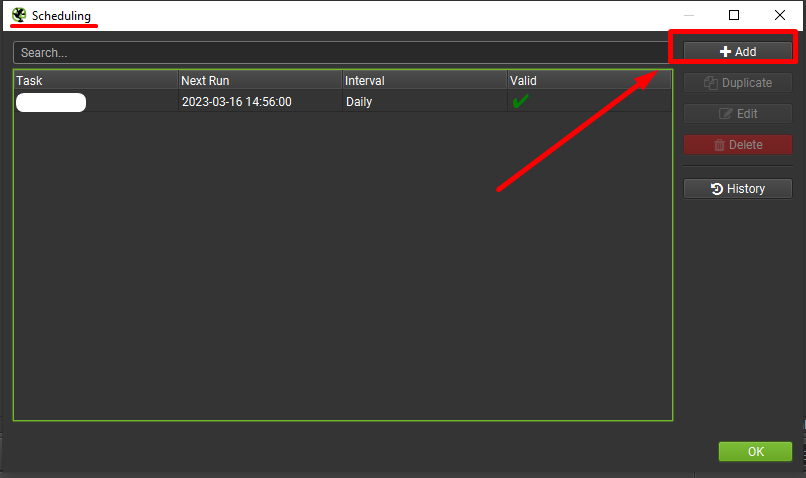

Crawl Scheduling

Looker Studio compatible export is only possible with the automatic crawl scheduling tool in the File>Scheduling section of the top menu.

To do this, we need to click on the "Add" tab in the image.

Note: If you want to do this for multiple domains, you can do it faster with the "Duplicate" tab after completing the configuration.

It is important to remember to update the source URL address here.

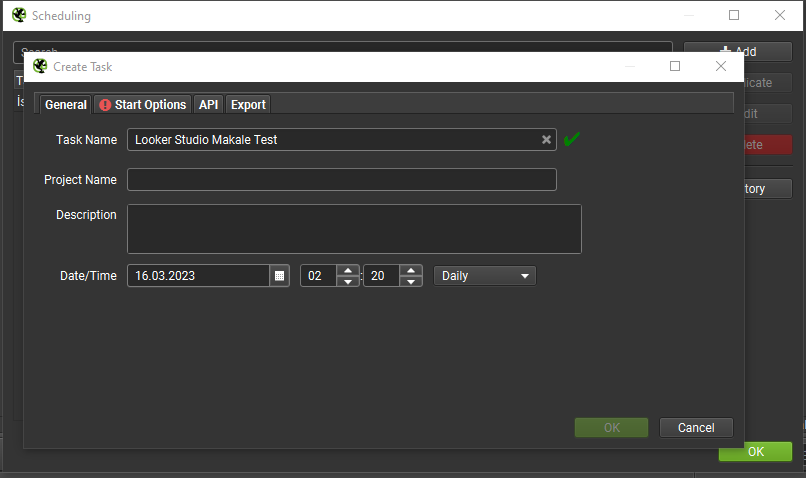

Creating Basic Configuration Settings

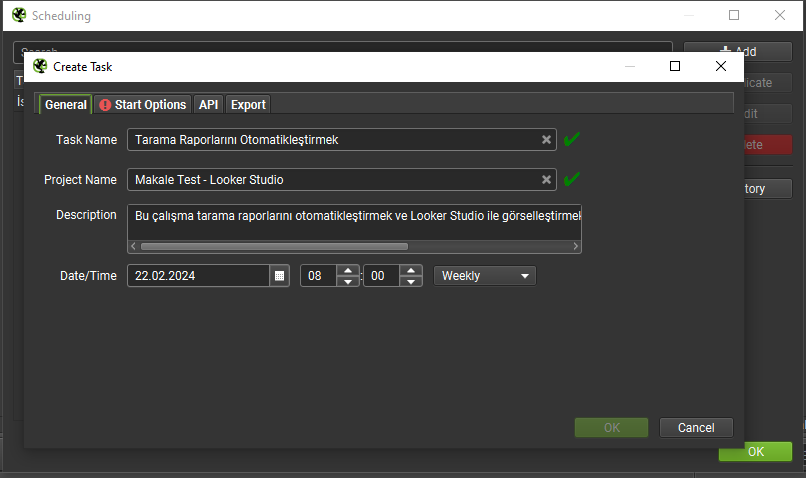

After the first process, you will see a screen as you can see below.

Here you need to fill in the relevant fields according to your wishes. It would be good to create a unique Task Name for the timer here. In order to avoid confusion in your similar works, I recommend you to specify a Project Name.

In addition, you need to determine when the scanning will first take place and then at what interval.

Setting Initial Settings

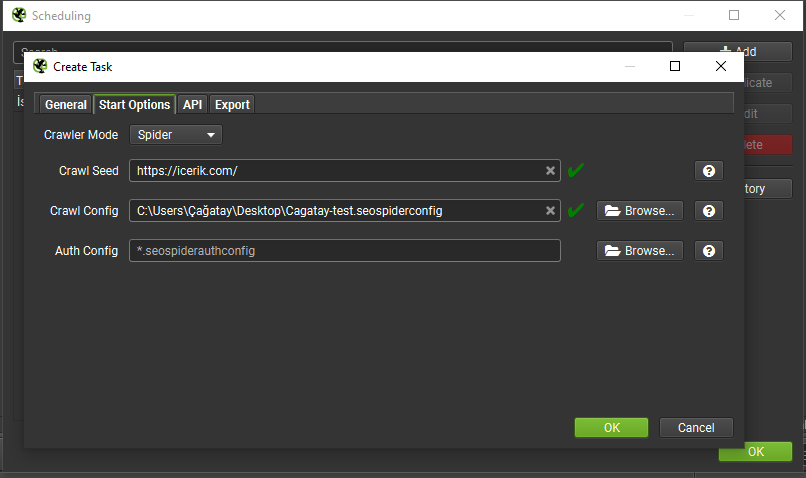

After taking care of the basic configurations, you need to configure the startup settings by clicking on the "Start Options" section on the side tab.

Here the tool actually offers us two different options. One of them is to scan via Spider and the other is to scan via List Mode.

I prefer to proceed via Spider and enter my information quickly.

Here in the Crawl Config field, if we have previously created a configuration related to Spider, we can add it.

For example, I created and saved a Configuration file for this project. I will use this file. The purpose of this file is to perform the crawl according to the settings and properties in the relevant file.

You can find detailed information about this topic here.

I would like to mention that I have activated the Pagespeed API tab in the API section.

Configuring Export Settings

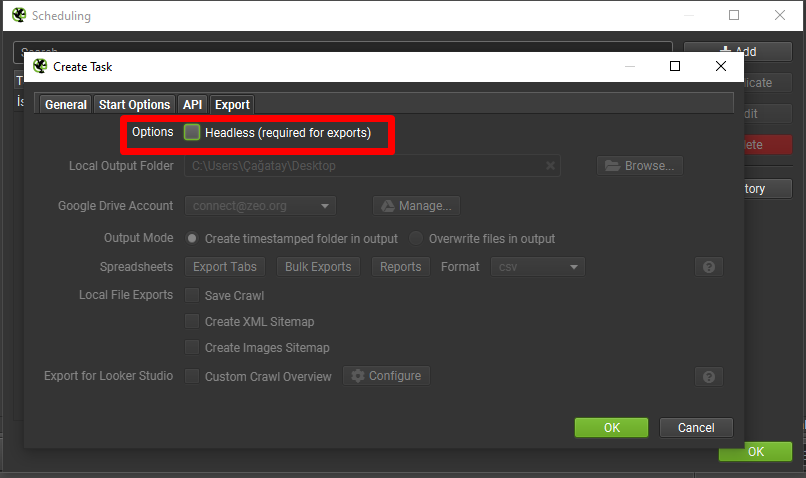

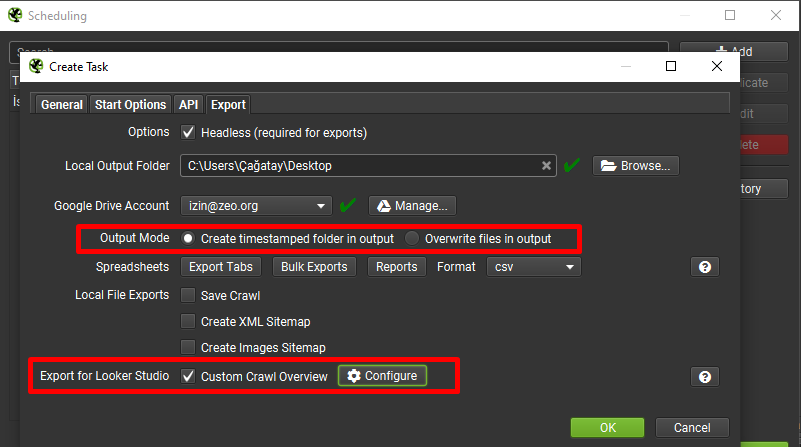

In order for Looker Studio compatible exports to run smoothly, we need to use Headless Mode. Let's not forget to enable this feature.

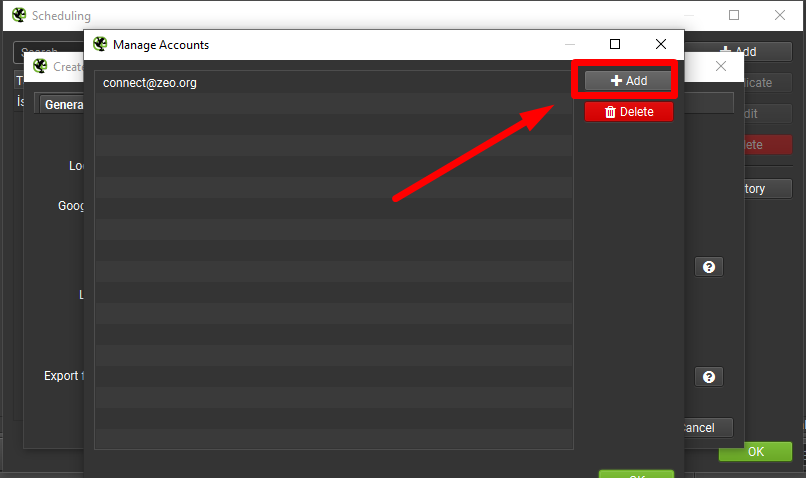

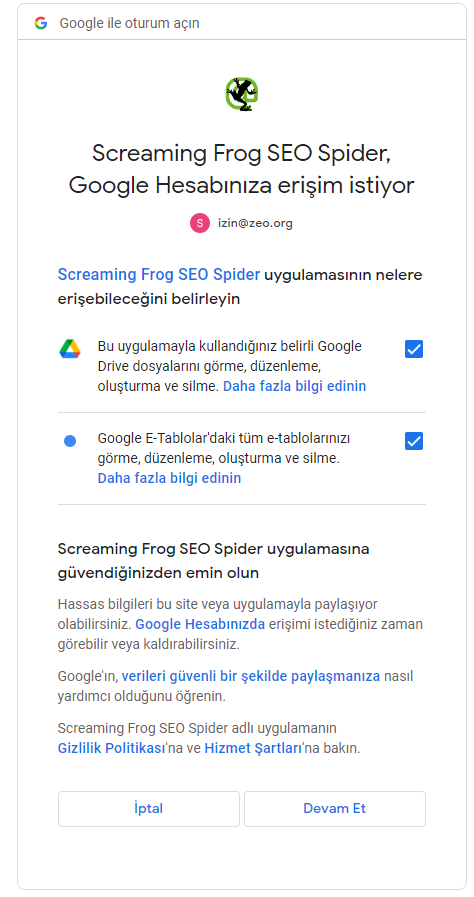

After this process, I add the Google Account by clicking the Manage tab from the Google Drive Account section.

Then I complete the binding process by defining some permissions as shown below.

After completing the SEO Spider authorization, I edit my settings as follows.

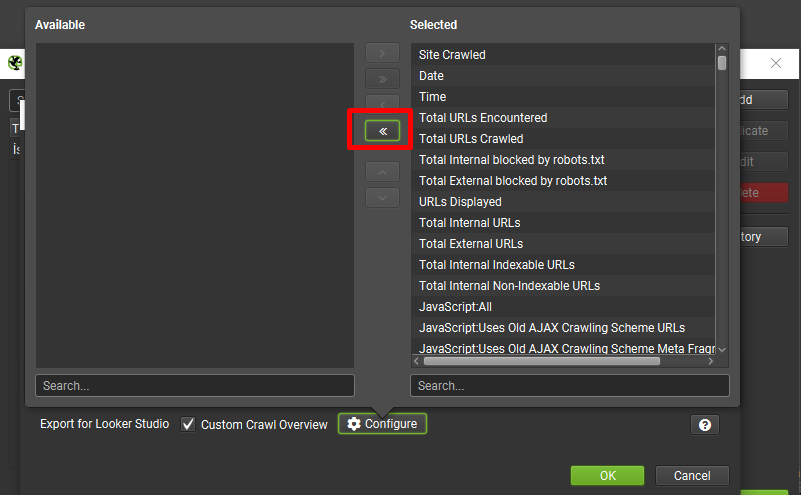

After selecting the custom scan overview report, I configure which data I want to export in the Configure section.

Since I wanted to export all the fields here, I clicked on the >> tab to quickly complete this part.

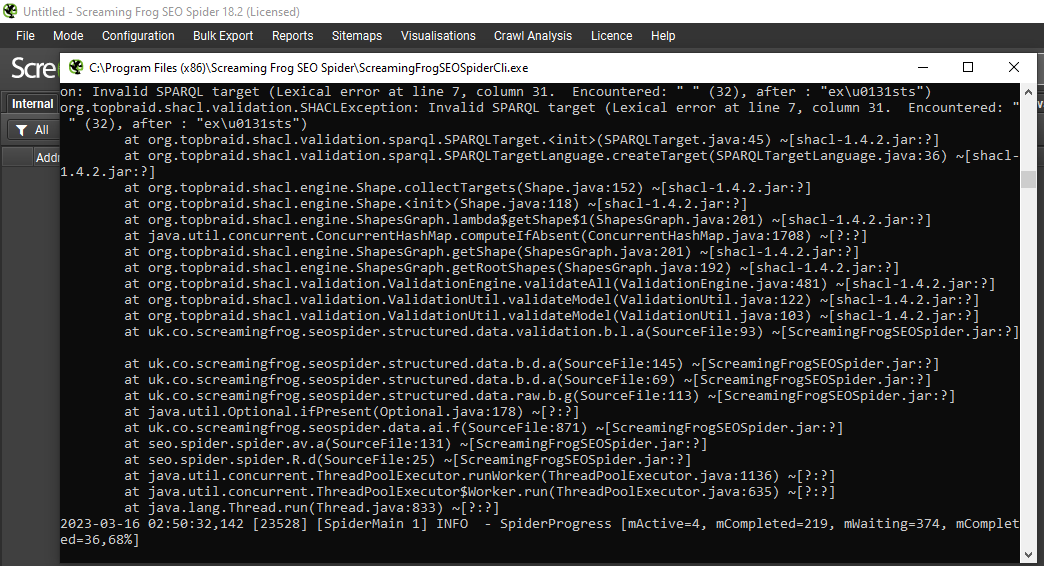

After making all the adjustments, when I say OK, a scan is performed as you can see below.

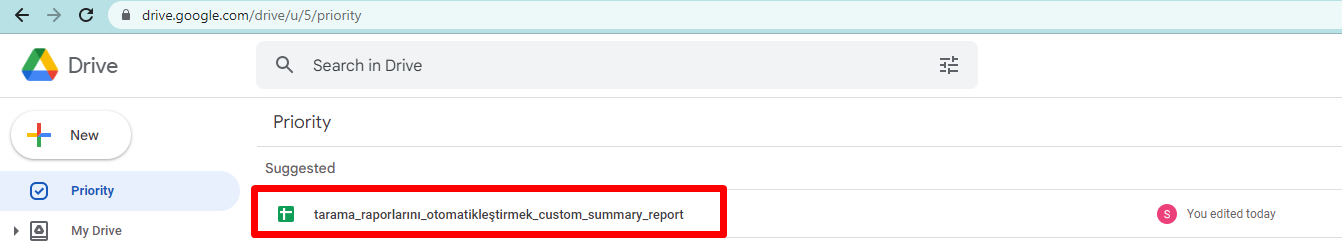

After completing this process, we need to look at the Google Drive account that we have integrated.

When we look, we see that our file is located here:

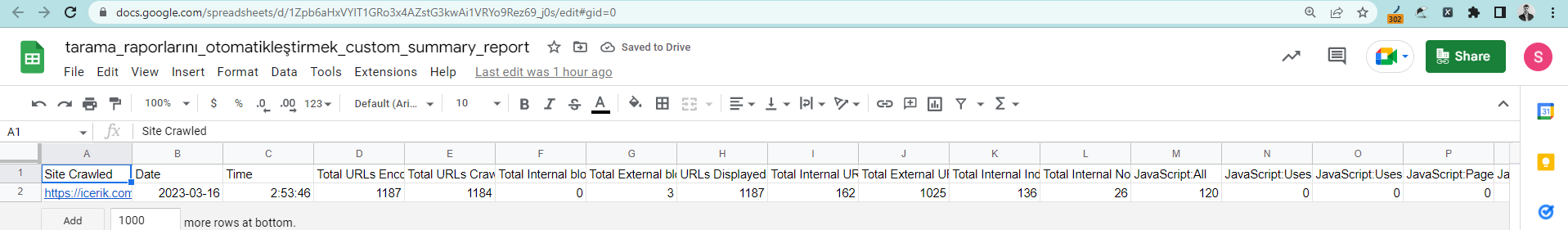

As you can see below, it has been exported in a single line.

This file will be overwritten when scans are performed on a weekly basis.

Connecting to Google Looker Studio

In fact, we have now technically completed a large part of the work.

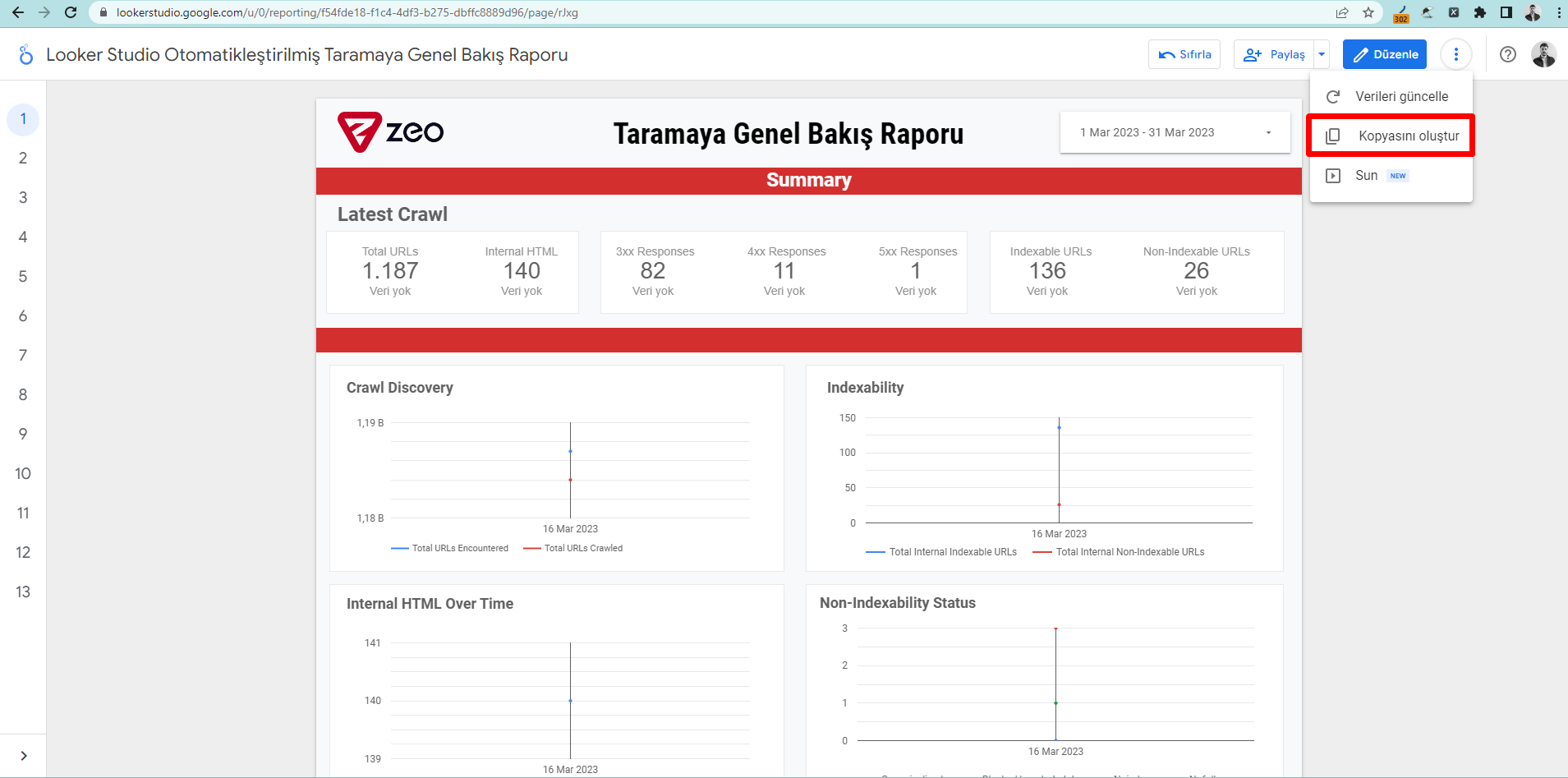

After this stage, you can now create your own Looker Studio report from scratch or you can get the report by selecting your own data by saying Create a copy of the report we have created here.

To copy the report, just click on the three dots at the top right and then click on the Create Copy tab.

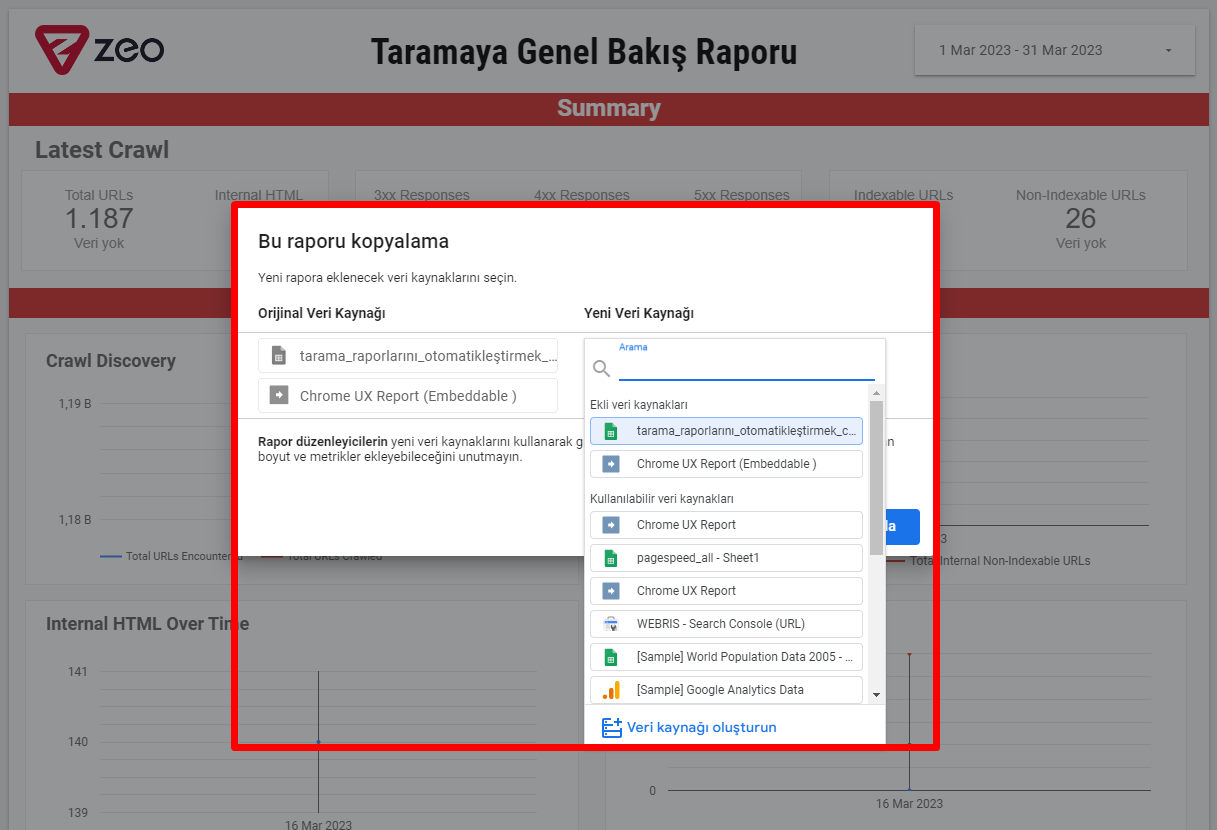

Then, as can be seen below, we need to proceed by selecting our own file as the data source against the available data sources.

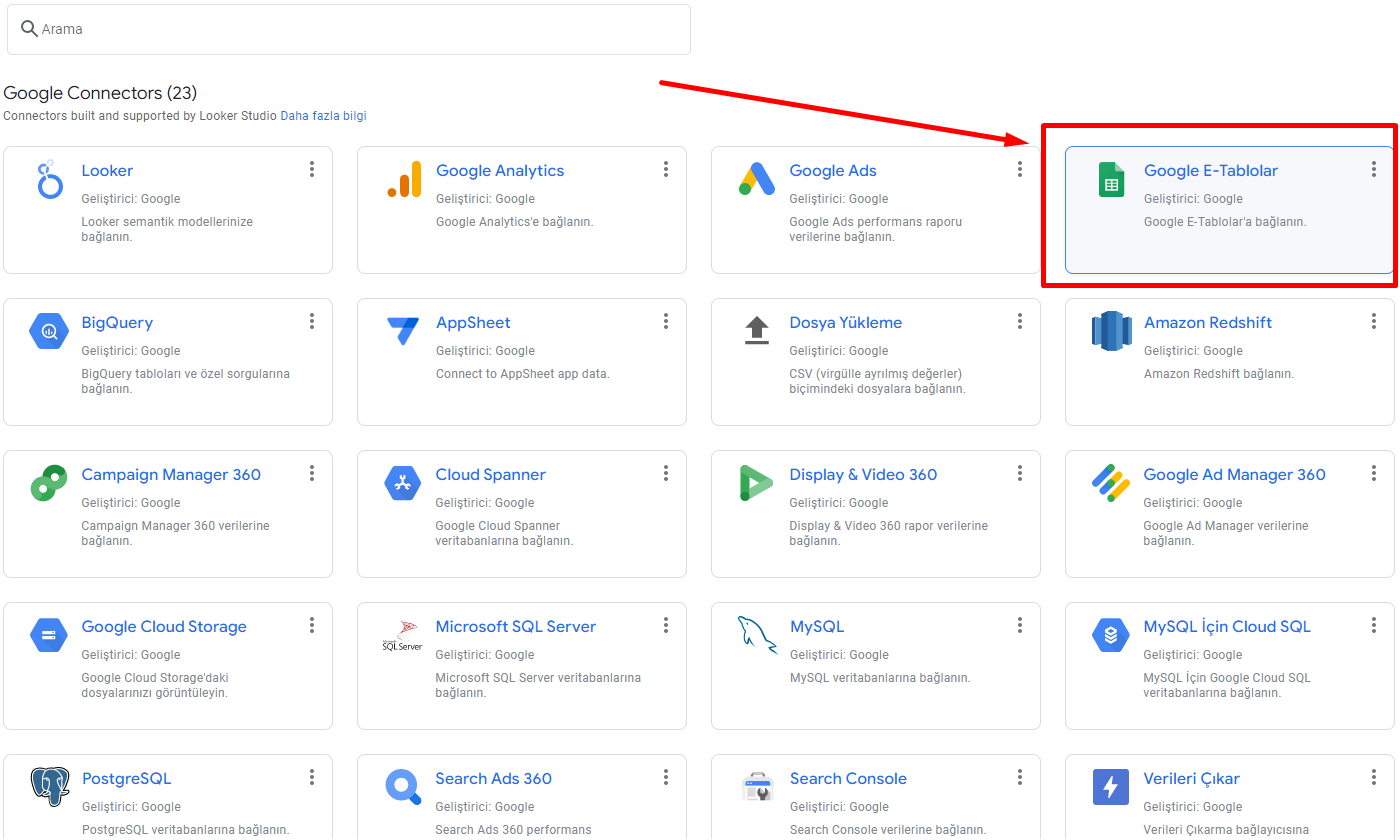

When selecting a new data source, you need to perform the following operations to select the spreadsheet respectively.

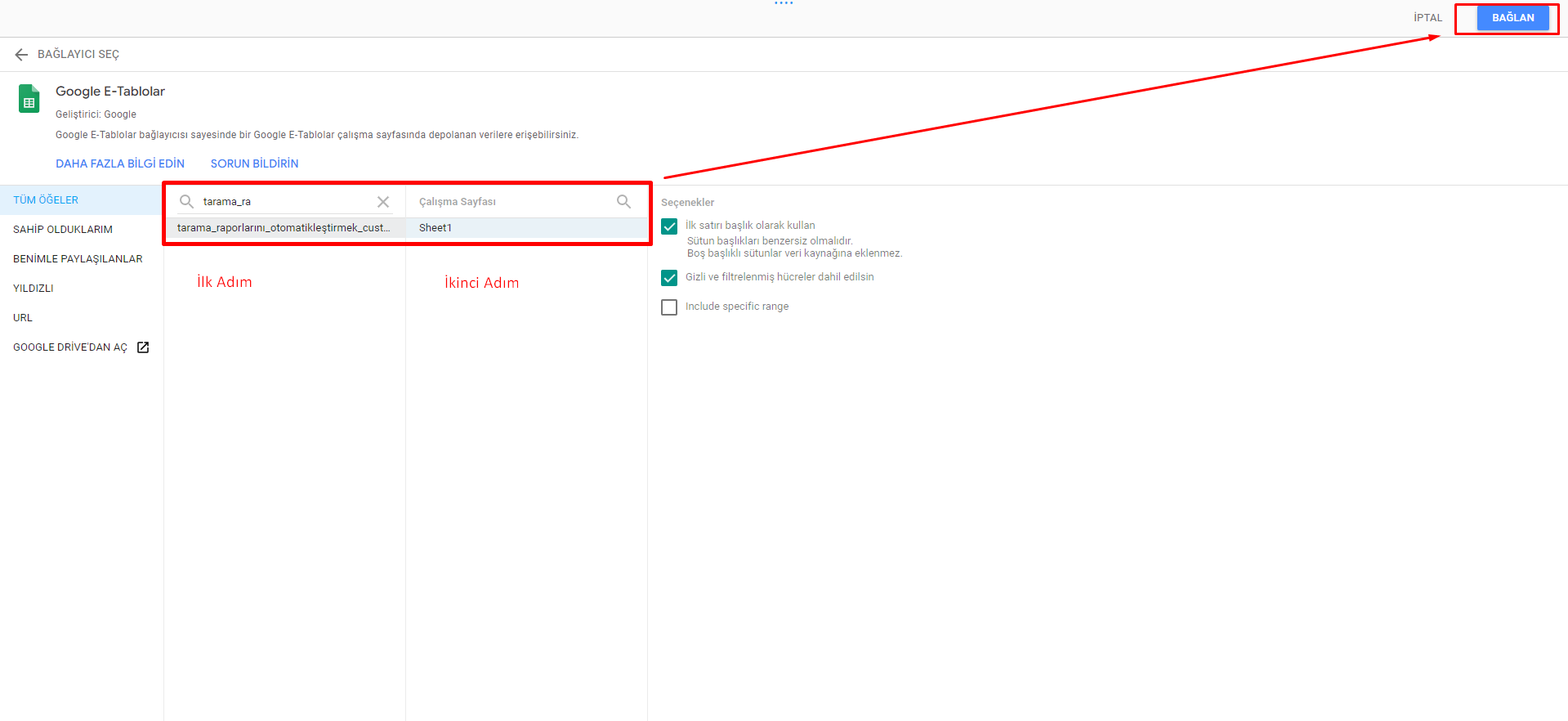

After selecting Google Sheets as the new data source, you need to follow the steps below.

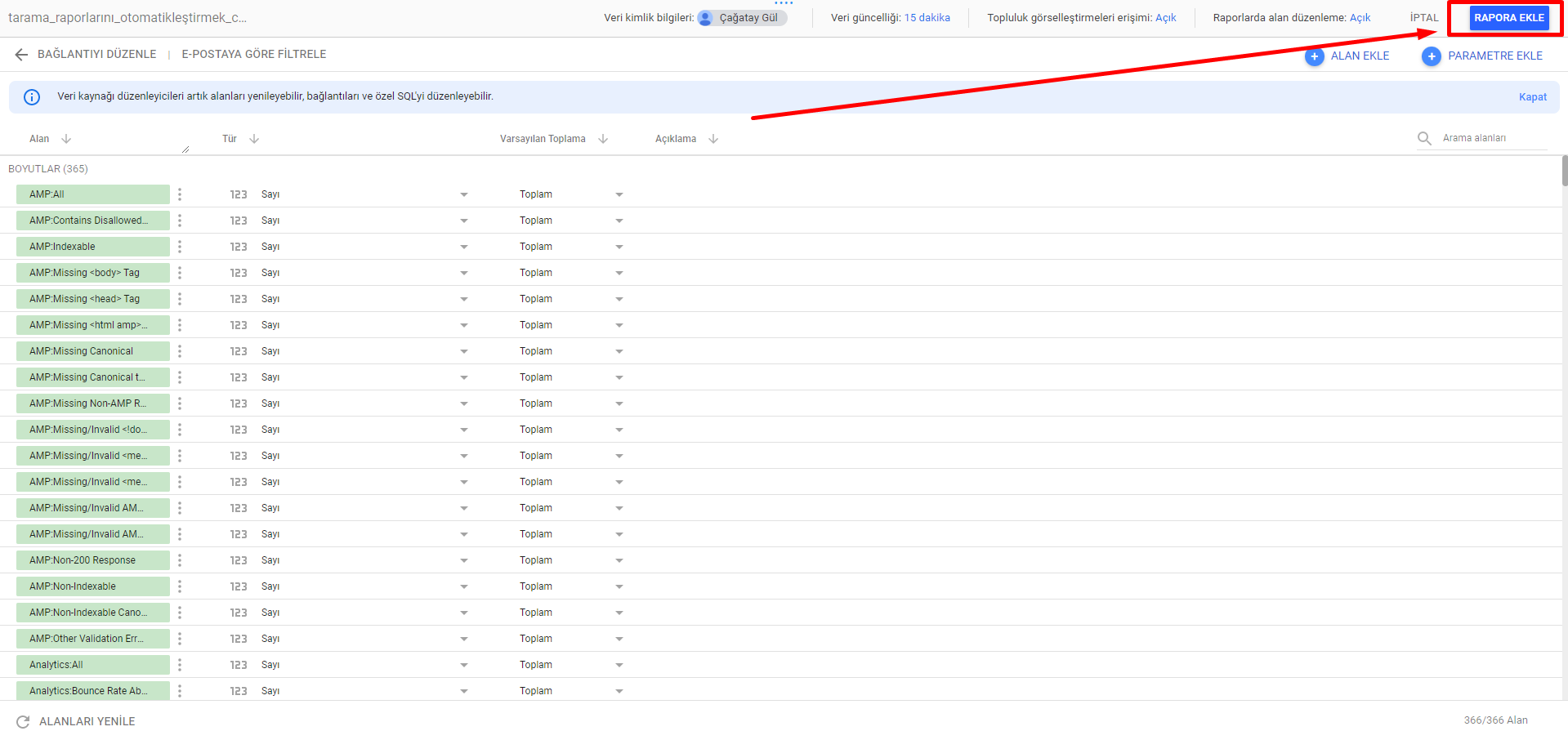

After clicking the Connect tab, the screen below will appear. Here we complete the process by adding it to the report.

Here you may get an error in some parts after adding to the report or sometimes Looker Studio may see some fields as date type instead of number. You can edit these parts manually.

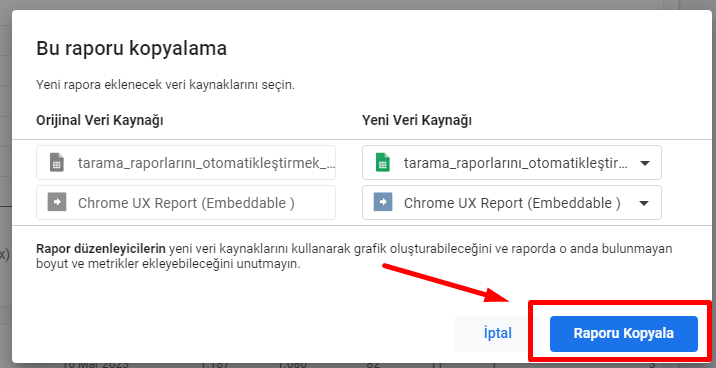

After saying Add to report, you complete the process by saying "Copy Report" as you can see in the section below.

After copying the report, the Looker Studio report will be updated in the same way every time our automatic scan runs, and you will be able to analyze the data graphically in more detail on a weekly basis.

This template, created as an example, includes elements related to general site health. We will ensure that this evolves in the future.

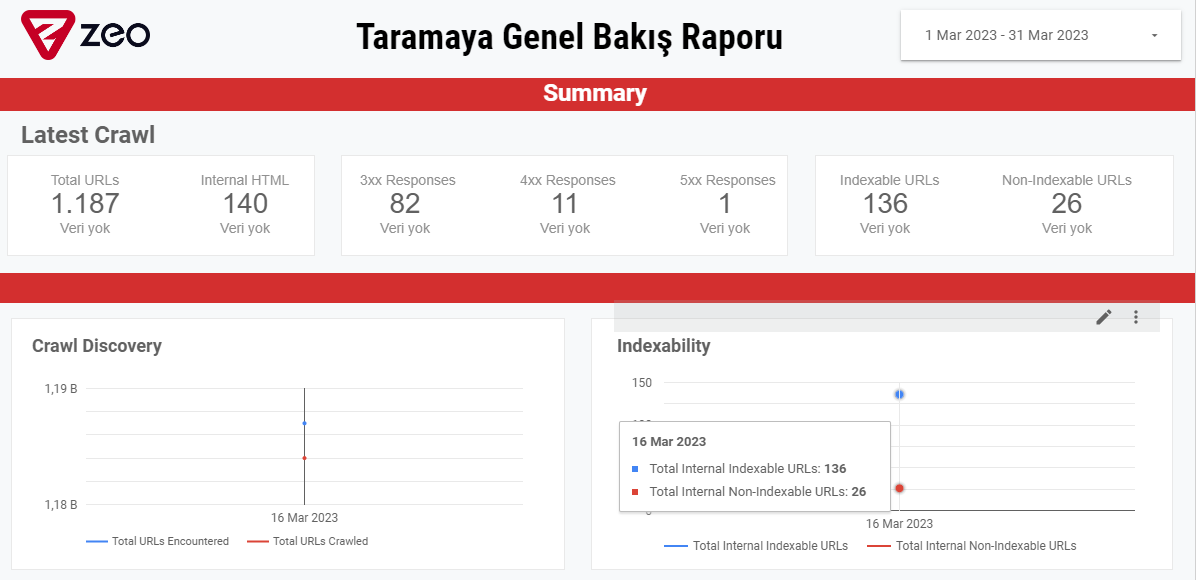

Here are the images from our report:

Genel Bakış

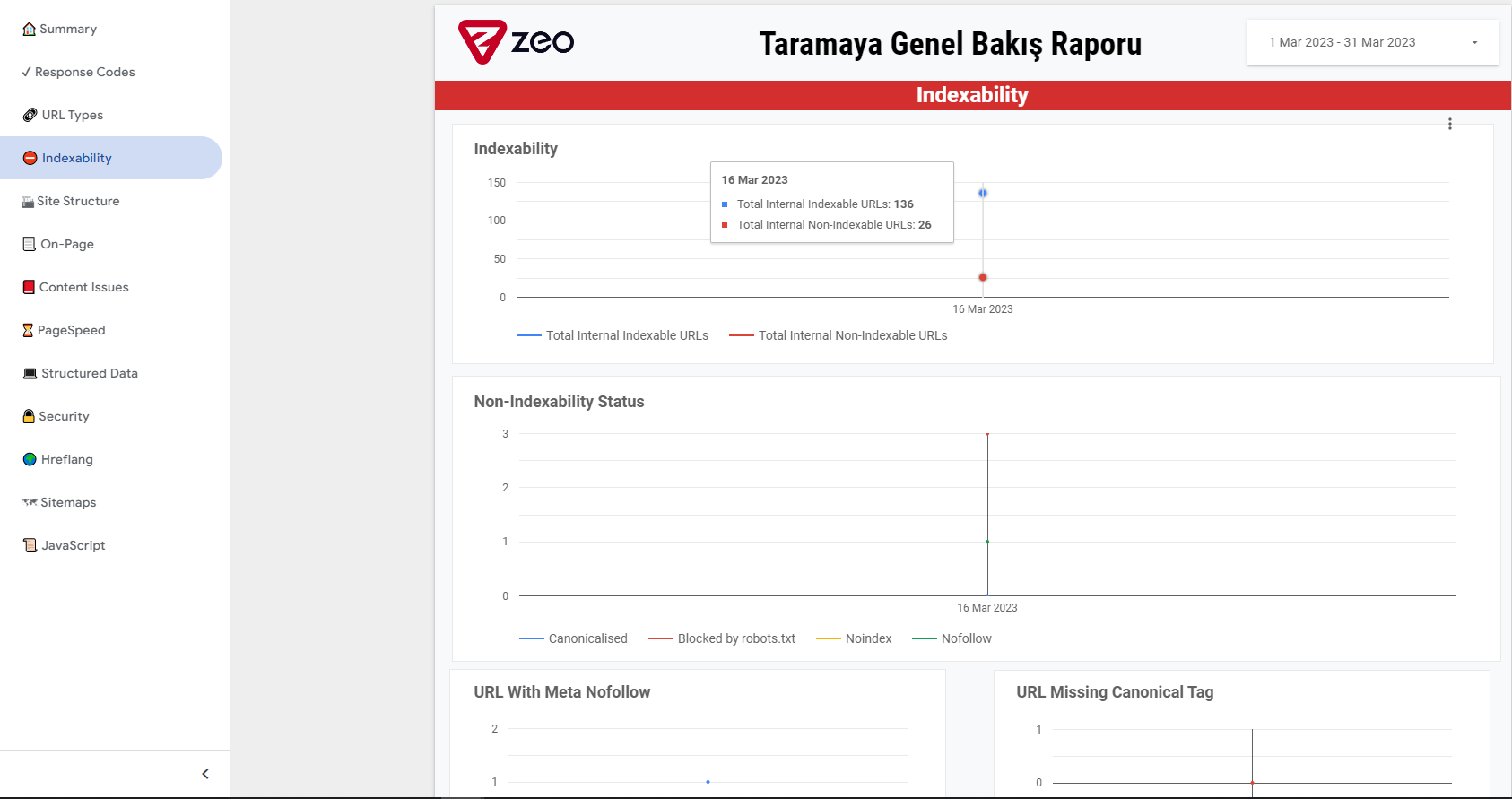

Indexability

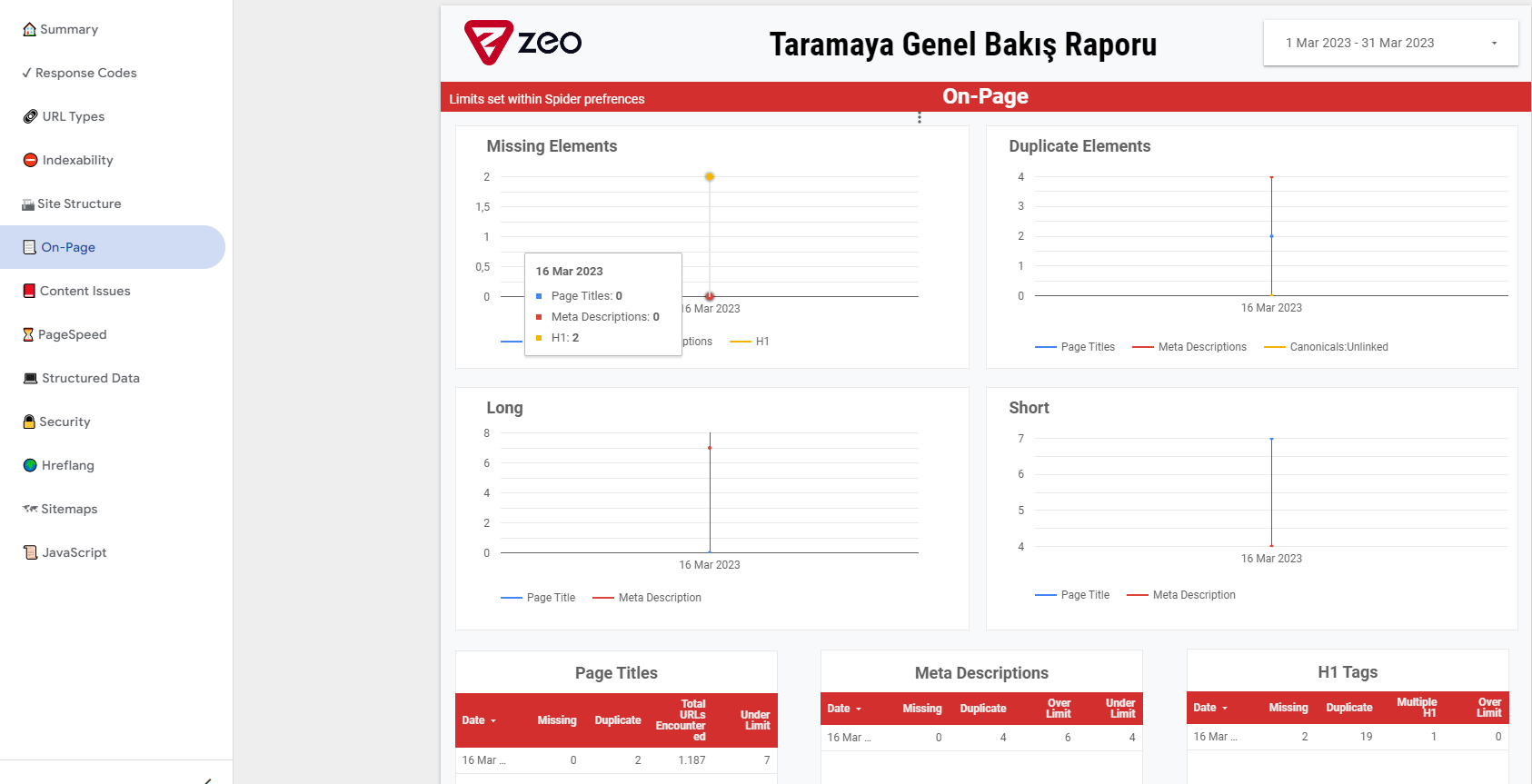

On-Page

In fact, all the elements on the above pages are generally used to quickly notify you of site problems and important changes in general.

All items in the relevant template are as follows:

- Summary - Provides an overview of related items.

- Response Codes - Allows you to get the response codes of pages and information about blocked pages.

- URL Types - Allows you to regularly view data related to internal HTML, images, js files and similar elements.

- Indexability - Allows you to check general information for site-wide index status.

- Site Structure - Typically allows you to monitor if there has been a change in internal links.

- On-Page - Allows you to track changes in on-page elements.

- Content Issues - Allows you to track issues such as duplicate content, poor content, etc.

- Page Speed - Allows you to systematically track CWV performance and the impact of your efforts.

- Structured Data - Allows you to monitor the status of structured data across the site.

- Security - Allows you to check security issues and page-based http header control across your website.

- Hreflang - Allows you to perform hreflang validations, especially at the level of multilingual sites.

- Sitemaps - Allows you to perform a sitemap check.

- JavaScript - Allows you to quickly track Javascript-related optimization items.

After updating our related template, it will additionally be included in the template to be done with the URL Auditing-Google URL Auditing API.

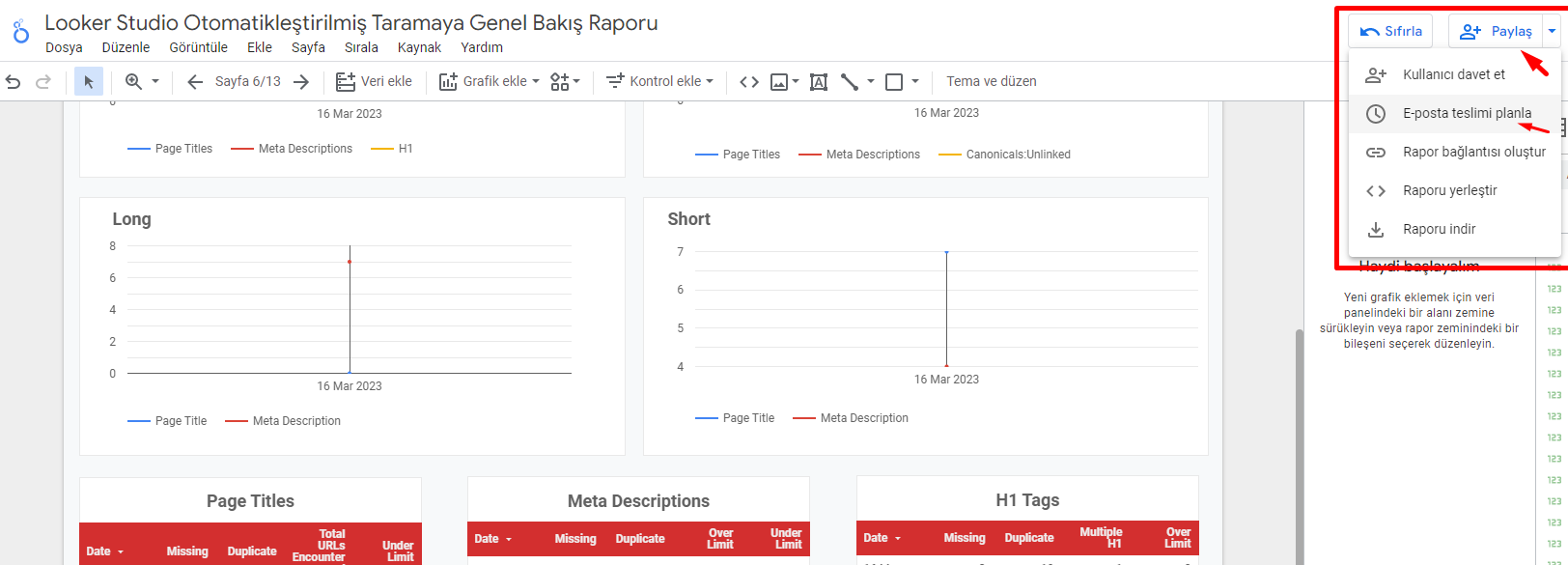

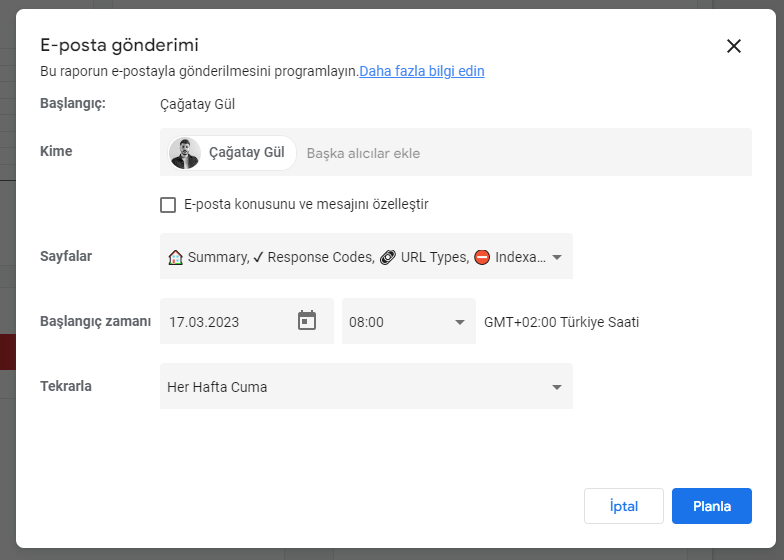

If you wish, you can enable e-mail scheduling via Looker Studio and enable the report to be shared daily, weekly or monthly to the e-mail addresses you wish.

In this way, it will be easier for you to inform brand managers every time the report is updated.

For this, you need to apply the settings as in the image below.

That's all there is to it. I hope that this study can give you much more leeway in your reports, both visually and in terms of conveying your work to brands in general.