10 Ways for Enhancing Video Content with AI

In this digital age, video content is an engaging and effective communication tool. Content creators, marketers, and business owners use video content as an essential communication tool to mobilize audiences.

In this article, I will explain how you can leverage artificial intelligence to increase engagement by creating effective and engaging video content. I will talk about 10 ways to enrich your video content, from personalized video content to real-time translations with the support of artificial intelligence. Let's take a look at 10 ways to mobilize your audience with your video content.

Personalized Recommendations

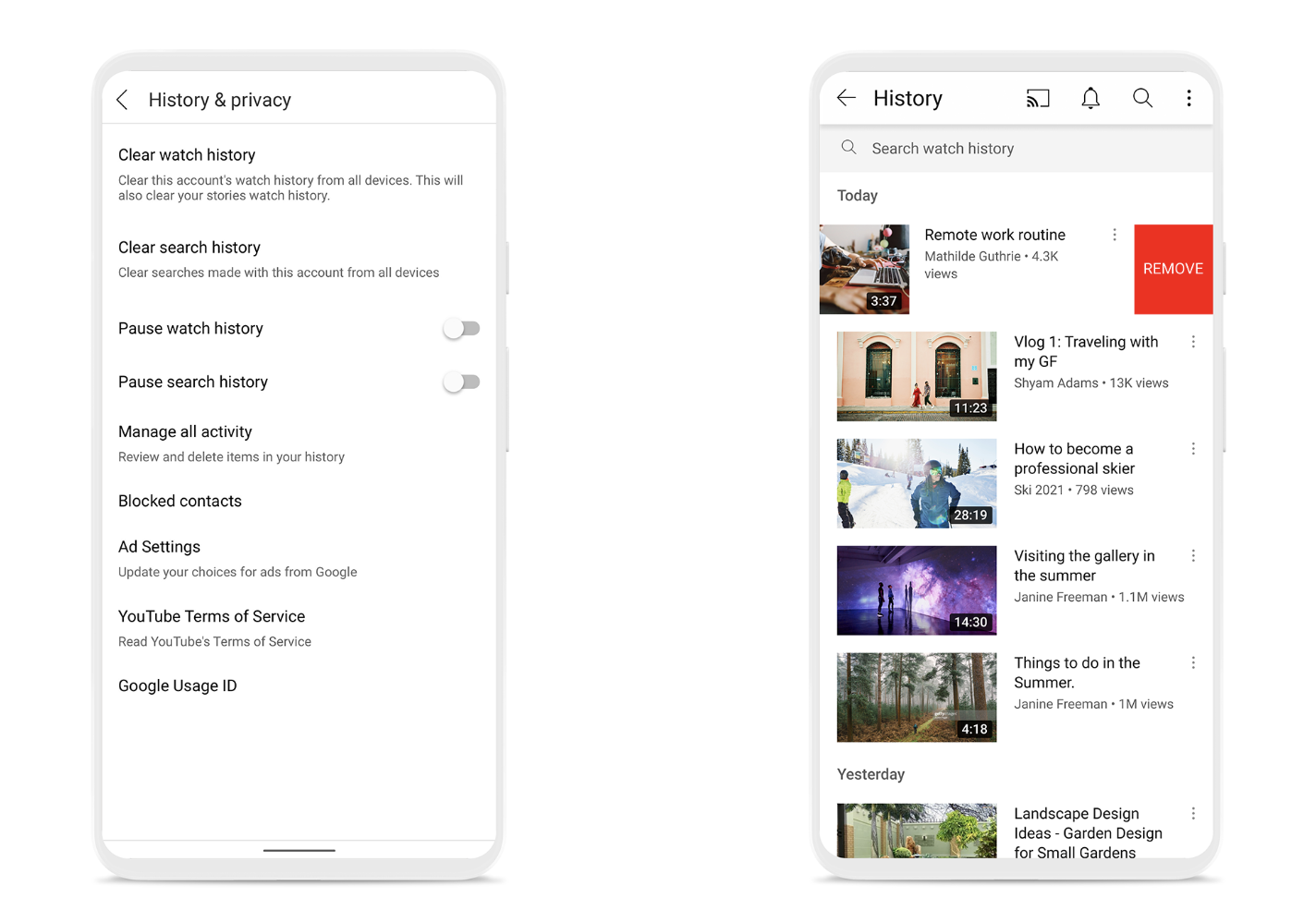

Using artificial intelligence algorithms, personalized video content is recommended based on viewers' viewing history and preferences. Personalized recommendations keep viewer interest high. You can leverage AI to improve user engagement, watch time, and user experience.

An example of this is YouTube's recommendation algorithm. YouTube's algorithm analyzes a user's viewing history and searches to suggest additional videos that may be of interest to them, keeping them on the platform and allowing them to watch more content. In addition, if you need help on how to optimize your videos on YouTube, check out this content!

Source: YouTube Official Blog

You can use YouTube's API for personalized recommendations. You can also use libraries like Surprise or LightFM and recommendation algorithms in programming languages like Python.

Automatic Video Editing

Video editing is a time-consuming task. Automated video editing is the use of artificial intelligence tools and algorithms to streamline the video post-production process. With these tools and algorithms, you can automatically trim, cut, and edit videos. You can also add video effects and improve video quality.

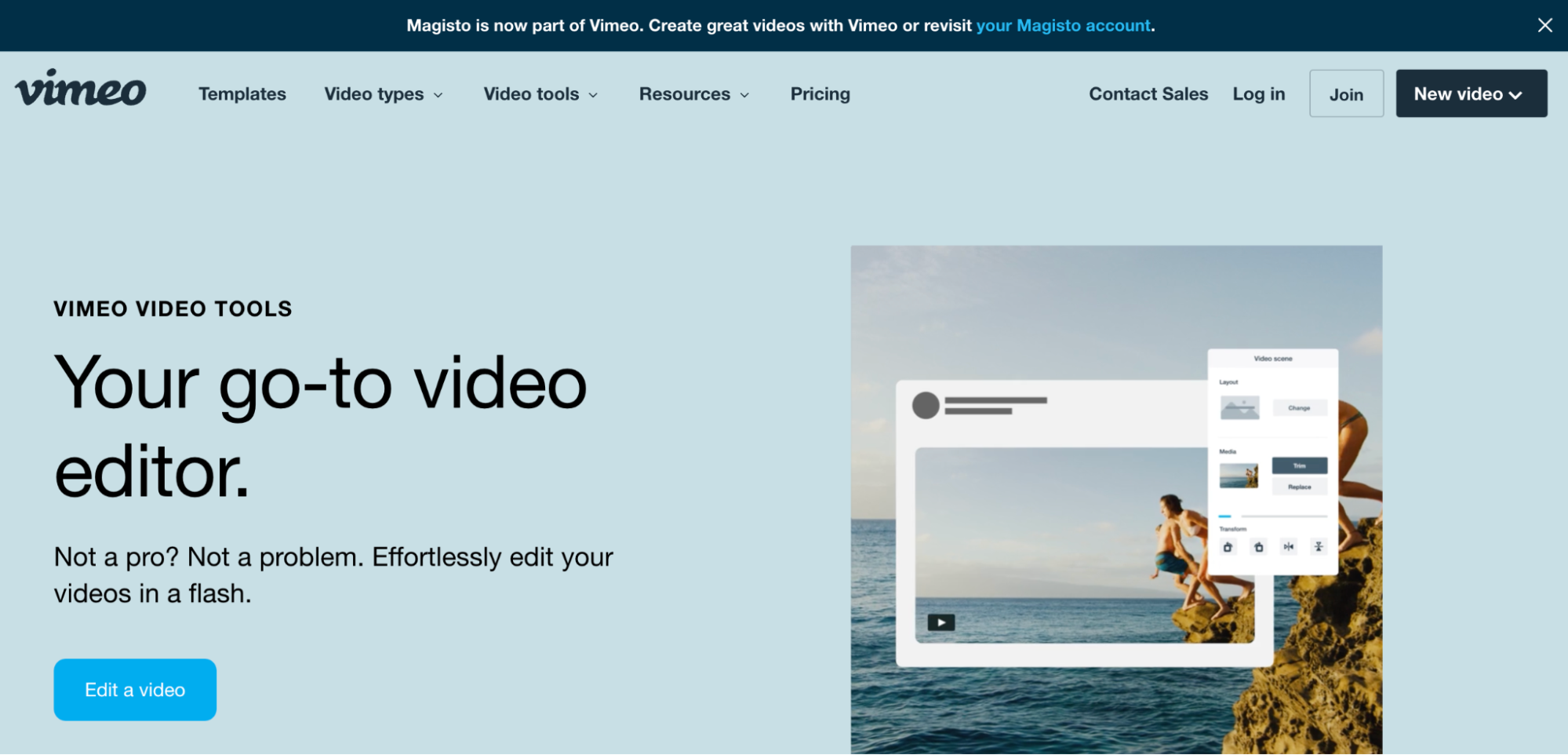

For example, Magisto is an AI-powered video editing tool connected to Vimeo. The tool uses machine learning to edit videos. Magisto can analyze a long piece of content, select the best moments, and make transitions accordingly. It also selects music appropriate to the content. Adobe Premiere Pro, another example, offers AI features such as "Automatic Reframing" to adjust video compositions for different sizes.

Using AI-supported tools in video editing prevents you from wasting time and allows you to produce content quickly. Tools that utilize artificial intelligence create a layout in accordance with the brand identity. In addition, it makes things easier for people who do not have much knowledge about video editing.

Dynamic Content Generation

Dynamic content generation with AI is the creation of video content based on various factors such as audience interaction, real-time data, and personal information. This makes videos engaging and personalized.

Lumen5 is an AI-powered platform that turns articles, blog posts, and other text content into engaging videos. After users enter the text content they want to turn into a video, artificial intelligence identifies the important parts, finds the relevant visuals, and turns them into a clip. It also selects the appropriate music for these clips.

For dynamic content generation, you can use artificial intelligence models such as TensorFlow or PyTorch. These models can be designed to analyze user data and create video content accordingly.

Dynamic content generation can provide viewers with a personalized experience by offering more relevant and engaging content. By enabling real-time interactions in video content, viewer interest is increased. In addition, dynamic content production thanks to artificial intelligence provides efficiency by reducing your manual applications.

Real-Time Language Translation

Real-time language translation in video content is the use of AI-powered tools to translate spoken words or subtitles from one language to another in a live video stream.

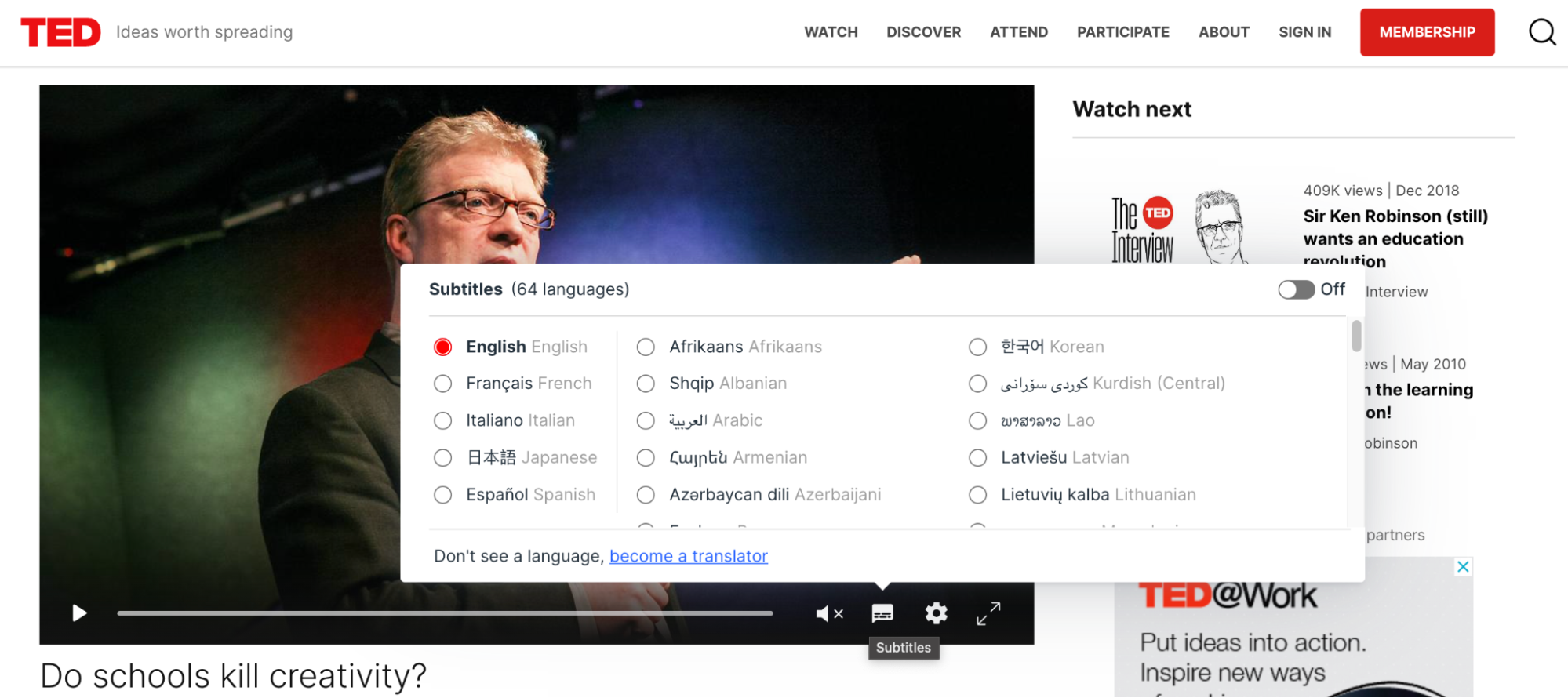

For example, TED Talks, known for its educational and inspirational talks, uses real-time language translation to deliver its content to a global audience. TED Talks offers multiple subtitle options for its videos, allowing viewers to watch the video in the language of their choice. To do this, you can click on the settings in a TED Talks video and select the language you want. Once selected, you can start watching the video in the language of your choice. TED Talks uses artificial intelligence to provide accurate real-time language translation.

You can use platforms such as Google Cloud Speech-to-Text and IBM Watson Language Translator for real-time language translation.

By leveraging AI for real-time language translation, you can reach a global audience and increase audience engagement with content.

Interactive Elements

Interactive elements in video content allow the viewer to interact directly with the video. Clickable features, annotations, and overlays are used for this. This increases viewer interaction and engagement.

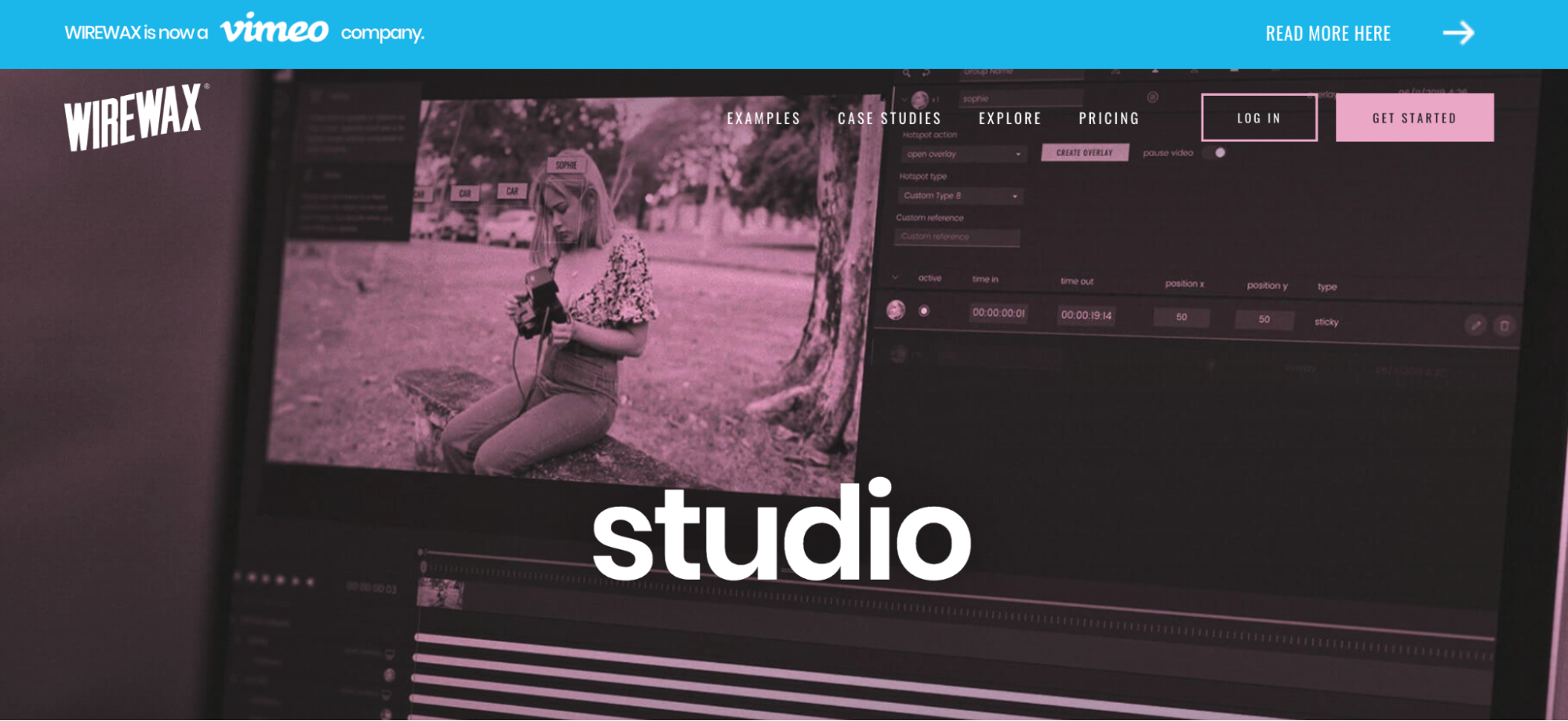

For example, in the video for Honda, we wanted to show that the Honda Civic is both a great family car and a great getaway car. The video produced with WIREWAX is called "The Other Side" and allows the car user to experience two different realities at the same time. When you press and hold the letter "R" while watching the video, you switch to the alternate reality. The reason for pressing the letter "R" is a reference to Honda's new model Civic Type R, which was just released at the time. When this video was published, viewers spent about 3 minutes on the video and Honda Civic's website traffic doubled.

In addition to YouTube, video platforms such as WIREWAX, now part of Vimeo, can be used to create interactive elements in video content.

Interactive elements can make the viewer more interested in the content. Engaging viewers with video content helps collect data on feedback and preferences.

Automatic Transcription and Subtitling

Automated transcription in video content is the use of AI-powered tools to convert the words and sounds in the video into written text in the form of subtitles or titles. This can make video content more accessible and SEO-friendly.

Coursera is an education platform that uses automatic transcription to add captions to video lessons. It uses Automatic Speech Recognition (ASR) technology for this. This makes course content accessible to non-native English speakers and hearing-impaired individuals.

You can use Rev.com, Sonix, or Google Speech-to-Text tools for automatic transcription. In addition, HiChatbot is an AI-powered chatbot that allows you to answer questions about YouTube videos. With the tool, you can get a summary, keywords, and more about any YouTube video.

With automated transcription, accessibility for many users is increased, indexing by search engines is achieved, SEO is improved and the audience experience can be enhanced.

Emotion Analysis

Sentiment analysis in video content is the use of artificial intelligence to detect and interpret voice tones and facial expressions to measure the emotional reactions of viewers. Through sentiment analysis, it is possible to interpret what emotions the video content evokes in the viewer and adapt the content accordingly.

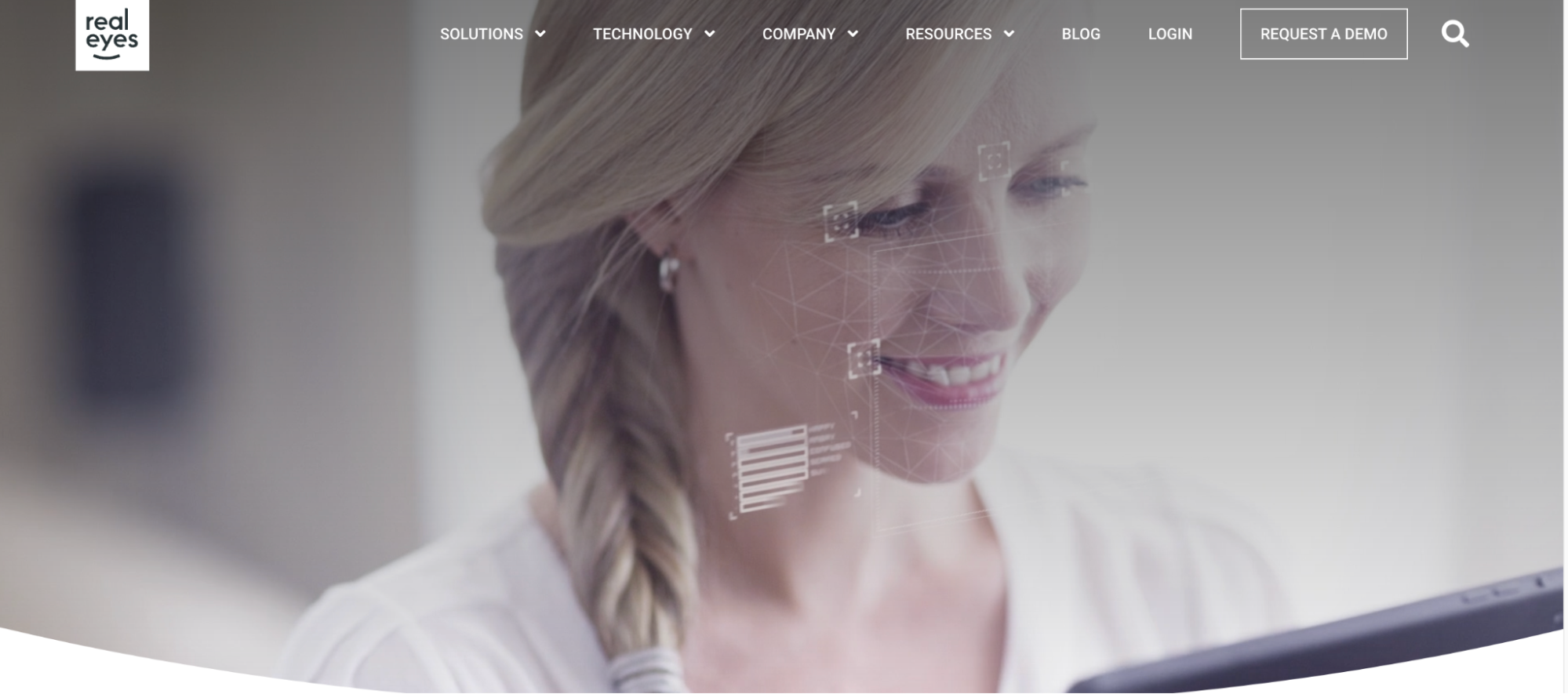

For example, Realeyes is a company specializing in sentiment analysis in the advertising industry. The company uses artificial intelligence and facial recognition technology to measure viewers' emotional reactions to an ad. Realeyes collects video data, including recordings of viewers watching content, through webcams, mobile devices, or in-person studies. The algorithm used by the company identifies facial features in the video, such as eyes, eyebrows, eyebrows, mouth, and nose, and identifies facial expressions and emotions.

You can use AI-powered tools such as Affectiva, Microsoft Azure Cognitive Services Emotion API, and IBM Watson Tone Analyzer for sentiment analysis. In this way, you can detect emotions in videos and adapt your content accordingly.

Automatic Thumbnail Generation

Automatic thumbnail generation is the use of artificial intelligence technology to analyze video content and generate relevant thumbnails. These thumbnails are used as the video's cover image to entice viewers to watch and click.

Netflix uses AI to personalize the thumbnails that display content based on user preferences. The platform analyzes viewing history, genre preferences, and interactions with the help of AI. It then dynamically generates thumbnails of movies, series, and TV shows relevant to the user. The thumbnails highlight specific actors, scenes, and elements that are relevant to their interests.

You can review the document to see how Netflix is moving forward with this process.

AI-powered graphic design tools such as Adobe Sensei and Canva offer automatic thumbnail generation. These tools analyze video content and suggest or create thumbnails that are engaging and relevant to the video content.

Personalized and automatic thumbnails make the content more engaging and improve the user experience. This enables the discovery of content that aligns with user preferences.

Video Performance

Using AI tools to evaluate video performance is a way to assess how well video content performs on audience engagement, retention, and other metrics.

Twitch is a live-streaming platform focused on gaming. The platform provides broadcasters with an AI-powered analytics tool that uses machine learning to monitor the performance of their live streams and videos. Twitch's analytics dashboard includes various metrics such as concurrent viewers, chat activity, follower growth, and viewer demographics. It also provides information on each stream's peak viewership, average duration, and engagement levels.

For detailed information about Twitch Analytics, you can check this content.

You can use AI-powered tools such as VidIQ and Tubular Labs to measure the performance of video content. In this way, you can optimize video content with the insights you gain from analytics, and personalize and improve the viewer experience. In addition, you can increase the performance of your content by learning the video marketing steps with our "Video Marketing at 9 Steps" content!

Improved Video Quality and Visual Effects

AI-enhanced video quality and visual effects improve the quality of video content. This includes color editing, quality enhancement, noise reduction, and the use of visual effects.

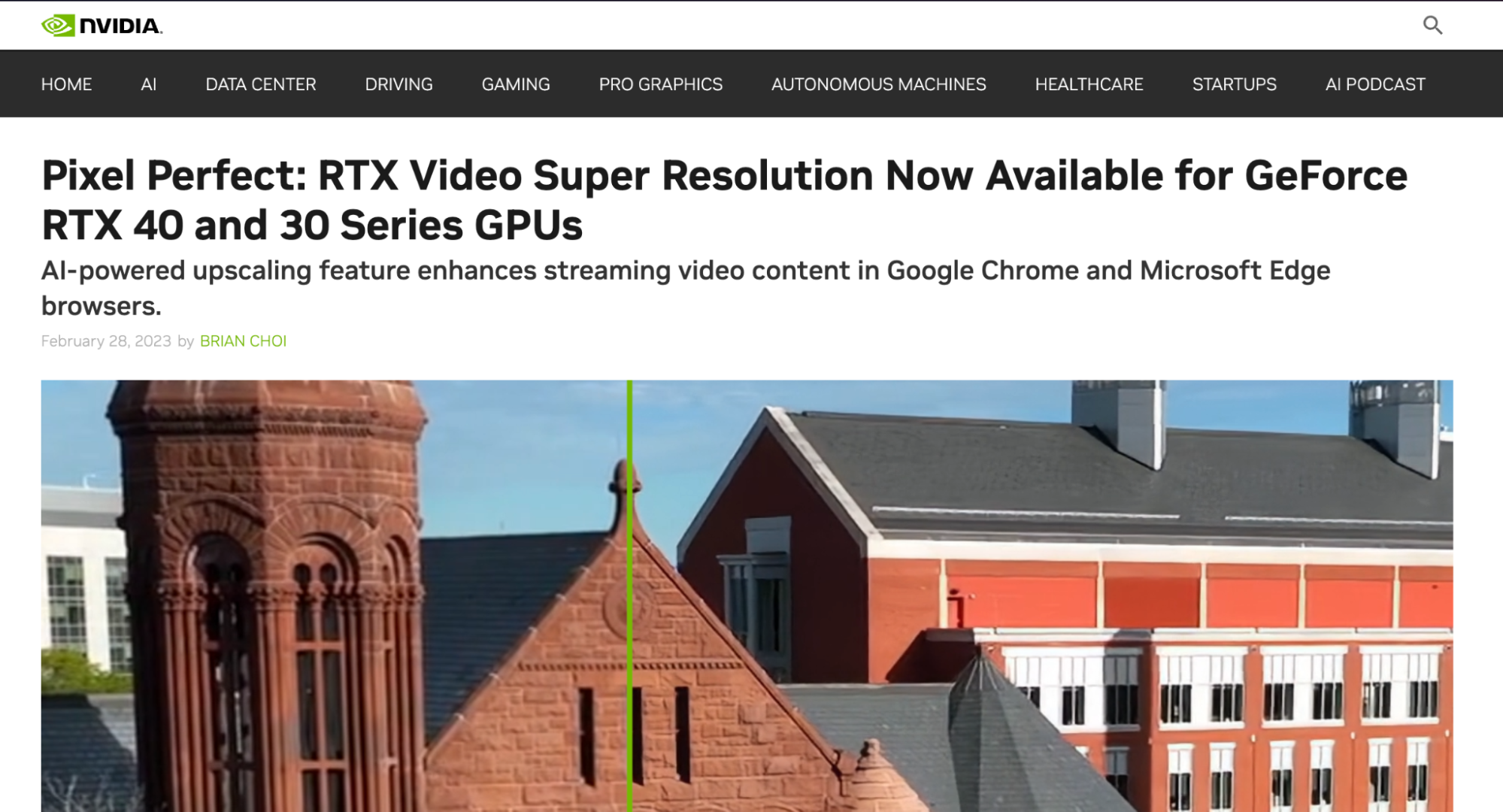

NVIDIA has developed AI-powered video upscaling technology. NVIDIA's video upscaling technology can upscale low-resolution video content from 720p to 4K. It uses deep learning algorithms for this. Artificial intelligence analyzes video quality and makes the necessary adjustments in areas such as sharpness and clarity.

You can use Adobe After Effects with the DeepDream AI plug-in to improve the quality of video content and add visual effects.

Leveraging AI technologies to enhance video quality and use visual effects improves visual appeal, is cost-efficient because it doesn't require manual editing, and allows you to produce more creative work.

Final Words

In rapidly developing technology, the increasing use of artificial intelligence in the production of video content contributes to both viewers and video content producers. Many brands utilize artificial intelligence technologies in video content production. It is a foreseeable result that the use of artificial intelligence in video content will increase even more. In this article, I shared with you methods on how you can improve your video content with the help of artificial intelligence, I hope it was helpful! You can follow our blog to learn more about where and how artificial intelligence is used in every field!

This content was created by Nursena Küçüksoy, Marketing Specialist at Zeo.