Javascript SEO: What is Dynamic Rendering and What are the Testing Stages?

I have prepared a guide on the use of Javascript in SEO, especially Dynamic Rendering. In this article, you can find our suggestions on what to pay attention to and what tests should be performed to make the Javascript Rendering transition process smooth.

For a brief update on Javascript in SEO, Google stated that Googlebot crawled sites according to Chrome 41 in previous years. Later, with the developing technologies, the Chrome 41 version had serious problems in understanding and crawling sites. As of August, Googlebot has been updated to "evergreen rendering" with the latest Chromium version.

Google's tools that test sites also started to provide more accurate analysis with this integration.

What is Dynamic Rendering?

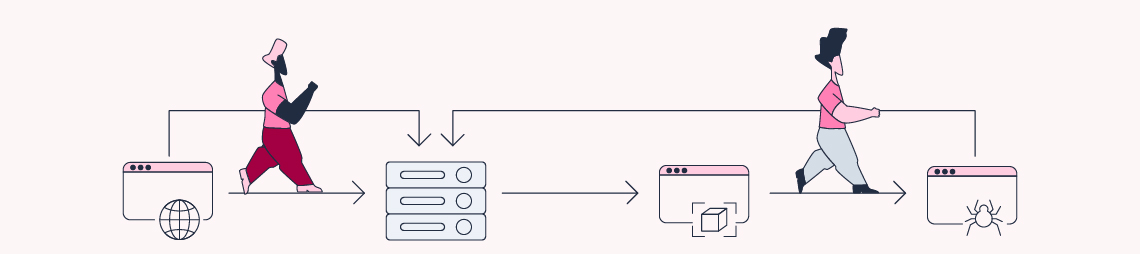

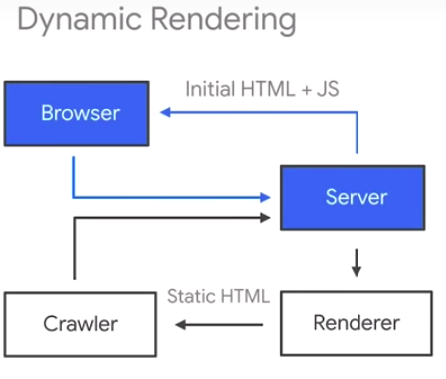

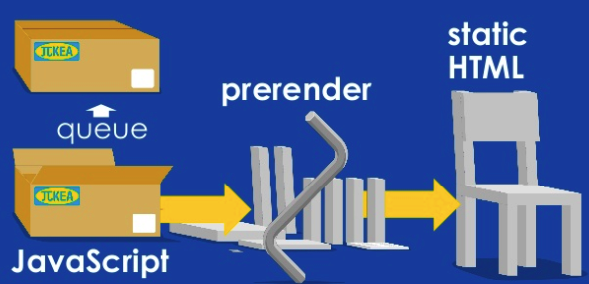

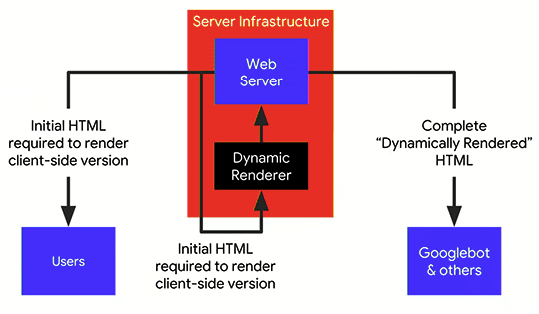

Dynamic Rendering is an intermediate solution for Javascript-based (CSR) websites that offers to facilitate crawling with SSR (Server Side Rendering) for bots coming to the site.

Note: Dynamic rendering does not improve the user experience (UX), it is just an intermediate formula for SEO. Google's John Mueller stated in hangouts that he "predicts that Google won't need dynamic rendering in a few years".

In addition to Google, Bing also recommends using Dynamic Rendering.

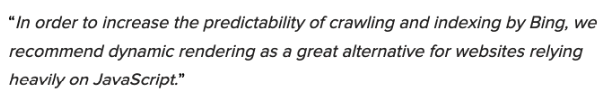

You can examine which JSs search engines support according to the results of the study conducted by Bartosz Góralewicz in the image below.

Some JS Frameworks used;

- AngularJS

- React

- Vue.js

- jQuery

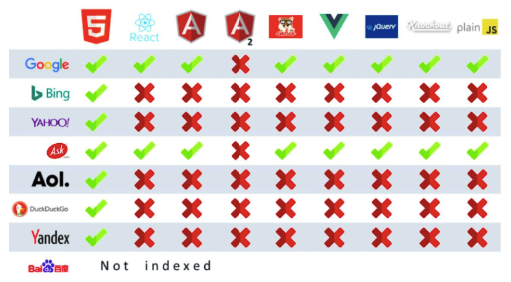

According to wappalyzer.com data, React seems to be the leader in the most used JS Framework types.

JavaScript is more difficult for Google to render than HTML and can take a long time. Dynamic Rendering is proposed as a workaround to this delayed rendering problem.

Image source.

Dynamic Builders

There are 3 Google-recommended renderers for converting content to static HTML;

Prerender.io,

Rendertron,

Puppeteer.

Using Rendertron, you can see step by step how the content created with JS looks on Google in the testing phase. For this, you can copy the example found at this address. Apart from that, prerender.io, which is used by many sites, can also be used as another method.

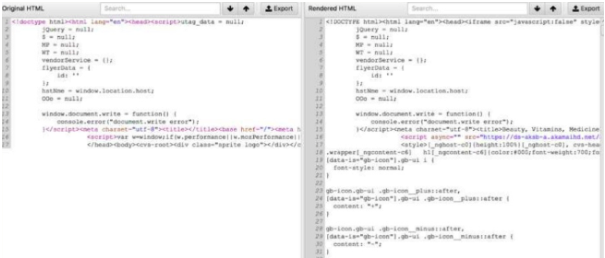

Thanks to dynamic renderers, it is possible to execute the JS codes in the original HTML file and thus serve the rendered HTML file to the bots, as shown below.

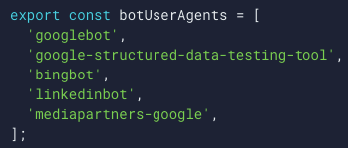

User Agents

In the static HTML you need to specify which user-agent to use. Sample user-agent list for Rendertron;

Pre-Rendering / Verify Googlebot

In some cases, pre-prendering can overwhelm the server and consume resources. You may want to consider using a caching application to prevent similar situations. You can use the information below to check whether the requests coming to the site belong to Googlebot and prevent unnecessary requests. With this measure, you will also prevent spam or malicious attacks and you will also optimize the efficient use of resources. > host 66.249.66.1 1.66.249.66.in-addr.arpa domain name pointer crawl-66-249-66-1.googlebot.com. > host crawl-66-249-66-1.googlebot.com crawl-66-249-66-1.googlebot.com has address 66.249.66.1

If there is content on the page that will take a very long time to load, you should ensure that Googlebot does not time out.

User Agent: Mobile or Desktop?

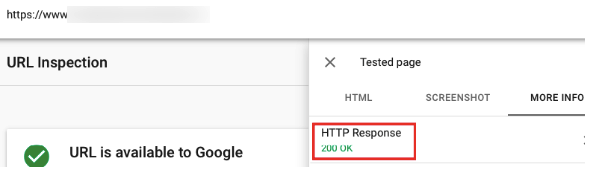

You can tell the aforementioned user agents whether to specify mobile or desktop versions in the content. You can use Dynamic Serving to find the appropriate version. As a result, we should also test that the URL created in the last stage returns a 200 code. Sometimes there are cases where Googlebot shows 503 and the user can see the page without any problems. Such situations should be detected precautions should be taken during the testing phase, and preliminary checks should be made to avoid problems in indexing.

Why Dynamic Rendering Is Not a Long-Term SEO solution?

- We need to remember that Dynamic Rendering is a workaround. The problems created by Dynamic Rendering are more difficult to detect and analyze. Dynamic rendering can significantly slow down your server. A large number of pre-render requests can cause Googlebot to receive server errors when crawling the site.

- Using DR, you create two versions of your site. This means you need to verify separately that your site is well-optimized for users and bots. Excellent SEO and developer teams are required to manage these sites.

Dynamic Rendering Test Procedures

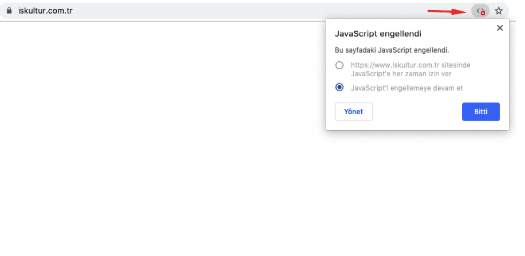

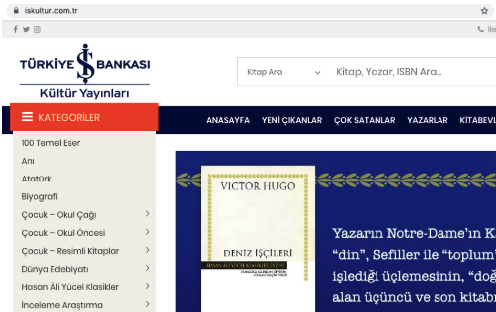

-If you don't plan to use Dynamic Render on the site, make sure to test how your page looks with JS turned off. If your page can't render at all, check that the content can be read even with JS turned off by identifying the resources that prevent the page from rendering with JS.

On the same site with JSs open;

The fact that JSs are off/on should not mean that Googlebot sees the site as you see it.

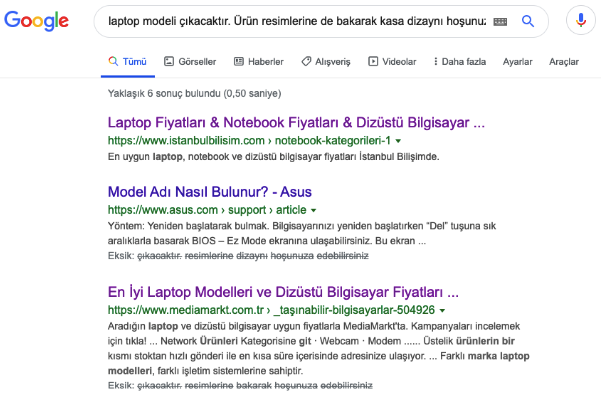

"Show More" Statuses

It is also important not to hide part of the content with a button like "show more". Even if Google sees this in the source code, it may not index it. In the image above, we see that the example site does not show that content in any way when we search Google for a random text that appears after clicking show more:

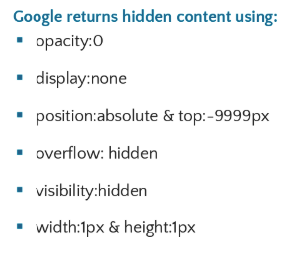

Bastian Grimm, one of the Digitalzone 2019 speakers, explained this situation with various examples. Very important CSS applications were also mentioned. Edits such as "hidden" showed that the content was not in the index even though it appeared in the HTML.

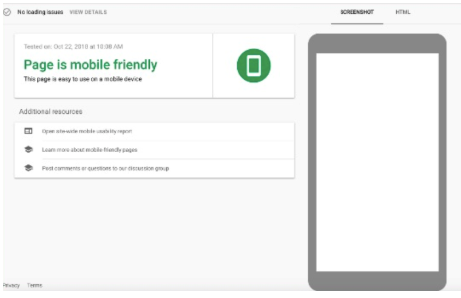

*With the help of the mobile compatibility test tool, we could test whether the content appears on mobile; however, this tool has been removed.

Again, the checks in the mobile compatibility test tool show that the page content is clearly white (i.e. the content cannot be read). Such problems can also cause problems in the indexing & crawling process.

Google Chrome

You can test for JS SEO issues using the latest version of Google Chrome.

Google Search Console & Javascript

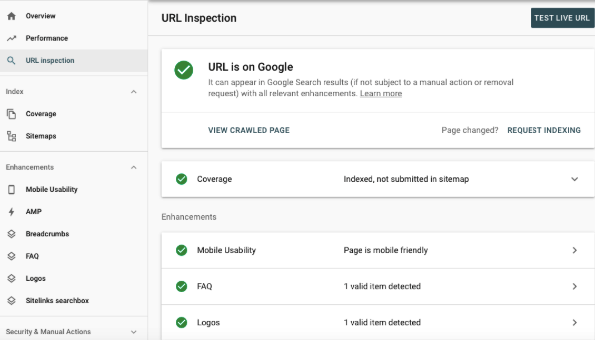

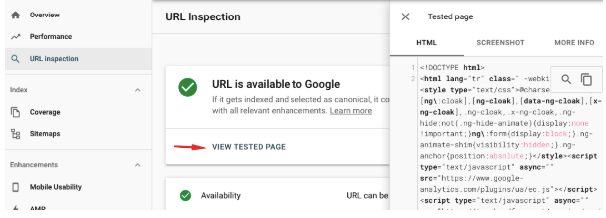

-Check how the page was created and how Googlebot can read the content in JSs with the URL Inspection tool in Google Search Console.

Try to find the content by searching for it in the relevant section, if the content does not appear, it means that the JS Render process could not be completed successfully.

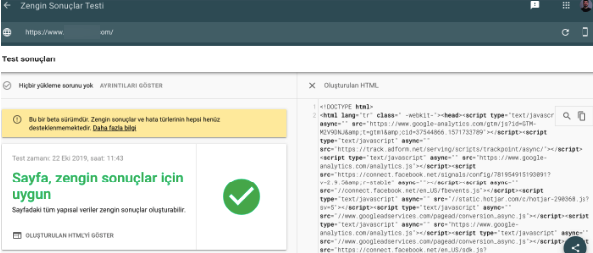

-With the help of the Rich Results Test tool, if you use structured data such as breadcrumbs or articles on your pages, you should check if they are also created.

You can also test whether there is a difference between desktop & smartphone by selecting the user agent.

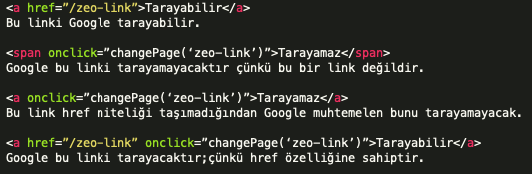

If content is created while doing all of these processes and there is no difference in content between mobile and desktop, it means that the site is ready for Mobile First Indexing. Differences in content may prolong the transition to MFI and the site may not pass. At the source code level, elements such as hreflang, canonical, and title on the site must be present. Again, links should be given with "a href" so that Googlebot can follow those links.

-In addition, these meta elements created with Javascript should not be duplicated with HTML. In the image below, you can see the change between the HTML after rendering and the raw HTML.

To completely solve the problem of content not being visible in JS, dynamic rendering should be applied to ensure that Googlebot can see the entire content.

Cloaking & Dynamic Rendering

When we switch to Dynamic Rendering, let's take a look at what cloaking is according to Google before seeing if Google can count the content as cloaking;

In Dynamic Rendering, this does not fall under the scope of cloaking since you will present the same/similar content to the user. If a different content is presented to the bot during the rendering process, this can be seen as a violation. To explain cloaking through an example, presenting content related to "lentil soup recipe" to the visitor while presenting content related to "ezogelin soup recipe" to Googlebot will cause a violation, in other cases Google will not see this rendering process as a violation. You should keep the difference between the content presented to the user and Googlebot as minimal as possible.

Robots.txt & JS

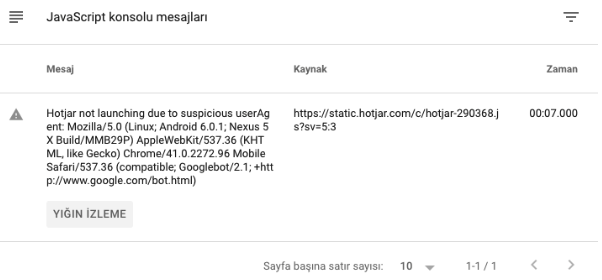

If files with .js extension are blocked with Robots.txt, Googlebot cannot run and read JS. Therefore, CSS or Javascript files should not be blocked with Robots.txt. You can use the Mobile-Friendly Test Tool to test how a URL appears in Google tools and whether it is blocked. After a sample crawl, it is important to look at the JS console messages section and fix any existing JS errors if they are not 3rd party related.

If we look at what 3rd party warnings can be, additional scripts such as Google Analytics, Hotjar, or Facebook Pixel can cause warnings (such as due to Robots.txt blocking). We can also see the cases where JS cannot be rendered in this console messages section (if there is such a problem, of course)

In this article, we tried to explain what needs to be done during the transition to Dynamic Rendering. We hope it has been a useful article about Javascript SEO.

If you have different experiences or curiosities about the transition process, you can share them in the comments.