Step-by-Step DOM Optimization in Technical SEO

"DOM SEO optimization" is a task often heard by those familiar with site speed improvements, and when implemented correctly, it can provide you with excellent returns. I wanted to bring together in this article for you exactly how errors like "Reduce the DOM size" can actually be solved. Don't forget that in my article, where I explained how to use limited resources in SEO efforts, determining priorities is also very valuable in these tasks. Let's start with a brief definition:

What is the DOM?

The DOM (Document Object Model) is essentially a tree-like model of a webpage's HTML structure as interpreted by the browser. Google and other search engines rely on this structure when crawling your site and understanding its content.

Overly complex structures and a slow DOM can make it difficult for Googlebot to crawl the site and can significantly slow down your page load speed. When these issues combine with other problems, your rankings can suffer, and you may experience a loss of organic traffic.

1. How to Reduce DOM Size and Complexity?

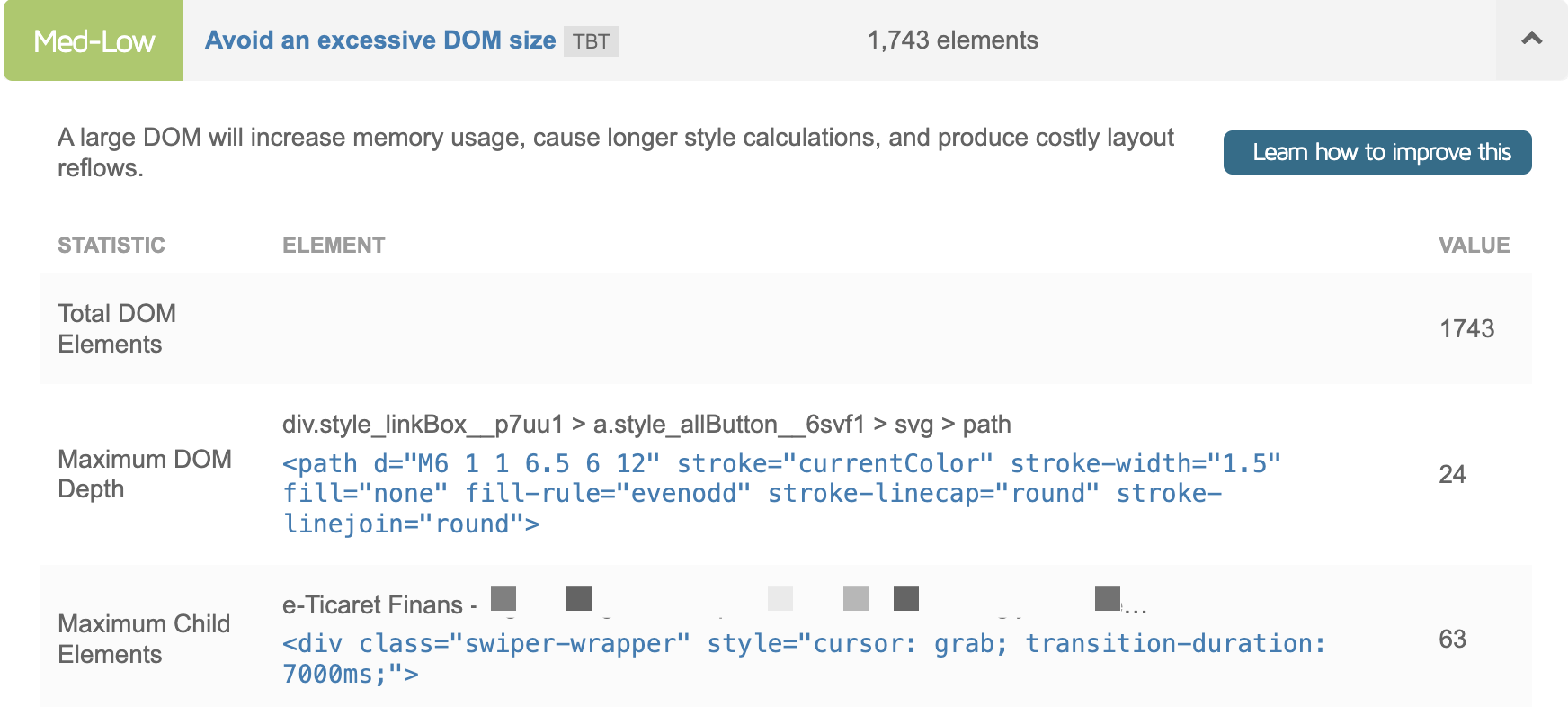

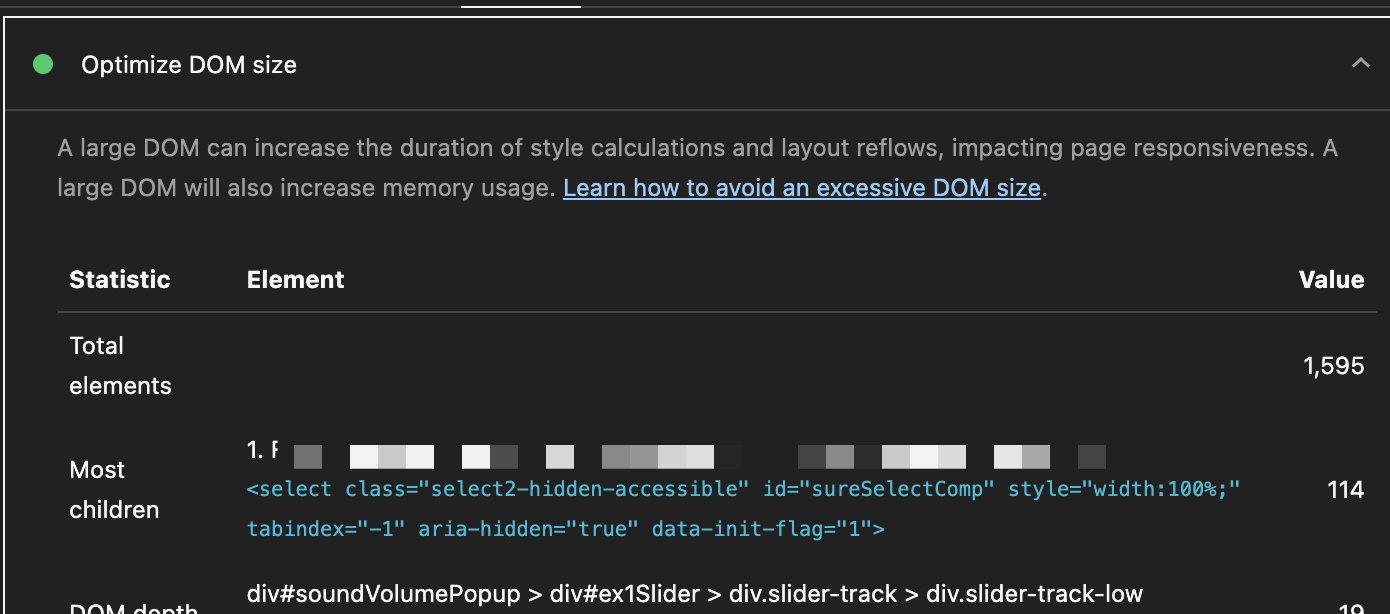

The number of HTML elements on a page and how deeply they are nested directly affects the page load time. This is the main reason we see the "Avoid an excessive DOM size" warning in tools like Google Lighthouse or GTmetrix during audits. We should always present a simple and clean structure for browsers and Googlebot.

Sometimes, unnecessary <div> layers can be created for just a single element. This situation unnecessarily bloats the DOM. Below is a bad example. In this example, it takes 4 layers to reach the profil-karti element:

<div class="zeo-makale"><div class="zeo-icerik-alani"><div class="zeo-profil-karti-wrapper"><div class="profil-karti"><img src="profil.jpg" alt="Samet Özsüleyman"><h3>Samet Özsüleyman</h3></div></div></div></div>

You can see a good and simple example below:

<section class="zeo-makale">

<div class="profil-karti">

<img src="profil.jpg" alt="Samet Özsüleyman">

<h3>Samet Özsüleyman</h3>

</div>

</section>

When you optimize the DOM size, you can get a passing grade in your Lighthouse results:

2. JavaScript and CSS Optimization

JavaScript and CSS optimization are topics we encounter everywhere. So how should we optimize them? More accurately, we should think about how to guide developer teams to optimize them.

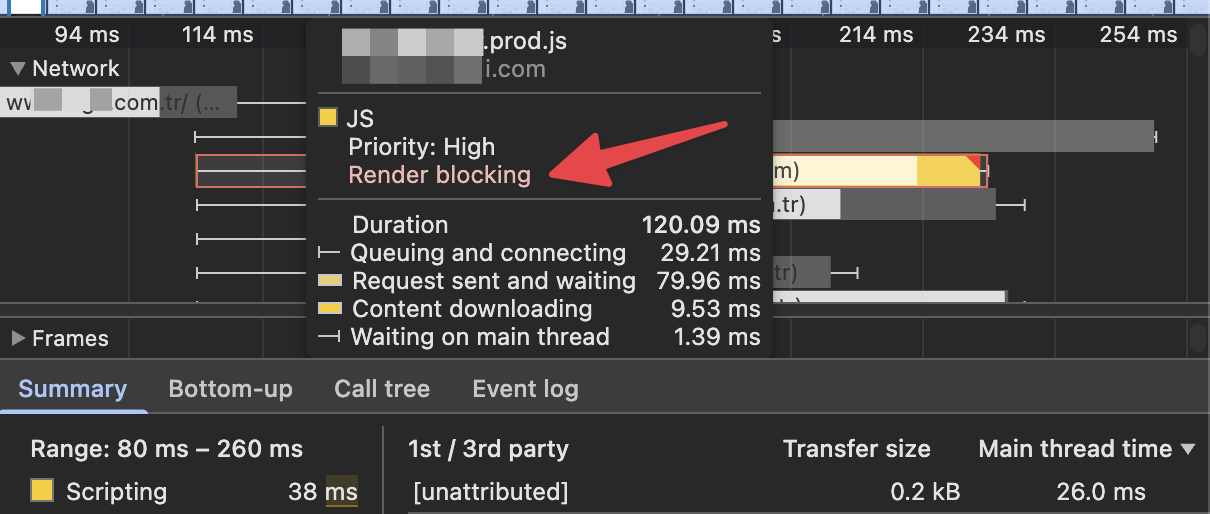

When a browser reads an HTML file and encounters a CSS or JavaScript file, it usually stops building the DOM and waits for a period to download and process that file. This waiting time is defined as render-blocking, and as a result, the time it takes for your page to become visible to the user is delayed.

You can make your page load faster by deferring JavaScript files that are not critical for the initial page load (for example, a chat bubble or an analytics script). You can see a bad example below:

<head>

<title>Page Title</title>

<link rel="stylesheet" href="style.css">

<script src="analytics.js"></script>

</head>

<body>

...

</body>

We can improve the code above by adding the defer attribute. By doing this, we are instructing the browser, "Download this script, but don't run it until you've finished processing the HTML." This simple change will make a huge difference in page load speed:

<head>

<title>Page Title</title>

<link rel="stylesheet" href="style.css">

</head>

<body>

...

<script src="analytics.js" defer></script>

</body>

3. Rendering Strategy

On sites using modern JavaScript frameworks (like React, Vue, and Angular), how the content is rendered is of vital importance in SEO efforts. Although Googlebot can execute JavaScript, this is an additional step and can sometimes lead to it not seeing the entire content. I can say that in such cases, solutions like Dynamic Rendering come into play.

In developments made without considering Google, if the site uses Client-Side Rendering (CSR), for example, the initial HTML from the server is often empty. When Googlebot first looks, it might see a page like this. For Googlebot to see the content, it needs to download and execute the app.bundle.js file. This can slow down or even block the indexing process:

<!DOCTYPE html>

<html>

<head>

<title>Zeo Example Page</title>

</head>

<body>

<div id="root"></div> <script src="app.bundle.js"></script>

</body>

</html>

If SSR (Server-Side Rendering) is used, the server sends the content as fully prepared HTML. What Googlebot sees is like the following, and as you can see, all important content is present in the initial HTML response. This structure is the most ideal scenario for search engines.

<!DOCTYPE html>

<html>

<head>

<title>Zeo Example Page</title>

</head>

<body>

<div id="root">

<main>

<h1>DOM SEO Optimization</h1>

<p>This article explains the importance of DOM optimization...</p>

<img src="seo.jpg" alt="SEO example chart">

</main>

</div>

</body>

</html>

4. Usage of HTML5

Semantic tags (<article>, <nav>, <header>, etc.) give valuable clues to search engines about the content and structure of your page. Instead of putting every detail into a <div>, structuring the content with tags appropriate to its meaning helps Google understand your page layout. An example of non-semantic div usage:

<div class="ust-bolum">

<div class="logo">...</div>

<div class="menu">...</div>

</div>

<div class="ana-icerik">

<div class="yazi">

<h1>Title</h1>

<p>Paragraph...</p>

</div>

</div>

<div class="alt-bolum">All rights reserved.</div>

Tired of reading?

You can also listen to this blog post as a podcast we created with Google NotebookLM on Spotify.

A correct example using semantic HTML5 tags is shown below. If you have news detail pages like this, you can create new strategies to use tags like <article> here. In this example, we can clearly tell Googlebot which section is the header, which is the main content, and which is the footer:

<header>

<div class="logo">...</div>

<nav>...</nav>

</header>

<main>

<article>

<h1>Title</h1>

<p>Paragraph details.</p>

</article>

</main>

<footer>All rights reserved.</footer>

5. Using Too Many CSS Selectors

Using very specific and long CSS selectors has a negative impact on the DOM. Especially on pages with thousands of DOM elements, it shortens the browser's style calculation time. The example below shows a bad CSS example with excessive specificity:

div#main-container section.content-area ul li a.active {

color: red;

}

Instead, it would be much better to assign a simple class directly to the target element:

<a class="menu-link is-active">Link</a>

.menu-link.is-active {

color: red;

}

Additionally, you should definitely check if there are elements hidden with display: none. Sometimes, FAQ structures on a page might appear twice in the HTML with display: none, bloating the DOM.

6. data-* Attributes

The data-* attributes are extremely useful for passing data to JavaScript. However, sometimes these attributes can contain a JSON object. If there are too many of these data attributes, they increase the HTML file size unnoticed and slow down DOM parsing. Let me clarify this situation with an example. On a product listing page of an e-commerce site, the data-product attribute of each product stores a large JSON text containing all details of that product (description, all variations, stock status, etc.):

<div class="product-card"

data-product='{"id": 123, "name": "Awesome Product", "description": "a long description of the product", "variants": [{"sku": "A1", "price": 199}, {"sku": "A2", "price": 205}], "stock": 50, ...}'>

<h3>Awesome Product</h3>

<img src="product.jpg" alt="Awesome Product">

</div>

In this method, if, for example, 30 products are listed on the page, it will seriously increase the HTML file size and the DOM's memory usage. Instead, we can just store the necessary ID. Store only the product's identifier in the DOM. Keep the detailed data in a JavaScript object or fetch it via an API when the user interacts with it:

<div class="product-card" data-product-id="123">

<h3>Awesome Product</h3>

<img src="product.jpg" alt="Awesome Product">

</div>

<script>

// Store the data in a separate JavaScript object

const productData = {

"123": {"id": 123, "name": "Awesome Product", "description": "...", "variants": [...]}

};

document.querySelector('[data-product-id="123"]').addEventListener('click', function() {

const productId = this.dataset.productId;

const details = productData[productId];

// Show the details...

});

</script>

This way, the HTML file size is reduced, and the DOM parsing is accelerated. It improves the Time to First Byte (TTFB) metric, especially for your visitors with slow network connections. Do not forget that there are many more methods besides the optimization techniques I've explained in these subheadings.

By implementing the points listed above, what have we fixed?

- We have improved our Core Web Vitals metrics, especially our LCP metric, and taken a step toward rising in the rankings.

- We have optimized our crawl budget by taking steps that will enable Google to crawl more of your pages.

- Since the pages load faster, we have contributed to the Google Ads Quality Score.

- Most importantly, we have created faster-loading pages by taking the user experience to a higher level. In doing so, we have also taken actions to increase your revenue.

Thank you to everyone who has read this far, and I hope you create lightning-fast landing pages :)