Controlling Site Quality with the "Crawled - currently not indexed" Error

Google Search Console frequently reports errors, and one of the most significant is "Crawled - currently not indexed". This error does not have a direct technical solution. For instance, if it were a "noindex" error, you could remove the "noindex" tag from the page and expect the error to decrease. This error allows us to identify which pages on our site fall below the quality threshold. It's essential to note that there's a 1,000 export limit when performing this check.

While a website's overall quality is related to EEAT, Page Quality (PQ) is assessed individually for each page. Therefore, ensure that each page offers a high-quality user experience.,

What is the "Crawled - currently not indexed" error?

The "Crawled - currently not indexed" error indicates that Googlebot has crawled your page but deemed it of insufficient quality for indexing. Google may also categorize older pages in its index under this error, meaning old URLs can eventually fall into this category.

Why Does Google Crawl Pages but Not Index Them?

My primary reasons why Google crawls but doesn't index pages are as follows:

- Pages with very few words or low value.

- Content that provides a poor user experience due to spelling errors.

- Outdated content that hasn't been updated in a long time.

- Lack of internal links.

- Content lacking unique value.

- Other identifiable spamming methods.

- Content automatically generated by artificial intelligence without adding any value.

Pages falling under these categories might be crawled but not indexed by Google. The reason Google crawls them despite their low quality is often due to the webmaster not properly directing Googlebot; such low-quality pages should be blocked via robots.txt to prevent Google from crawling them.

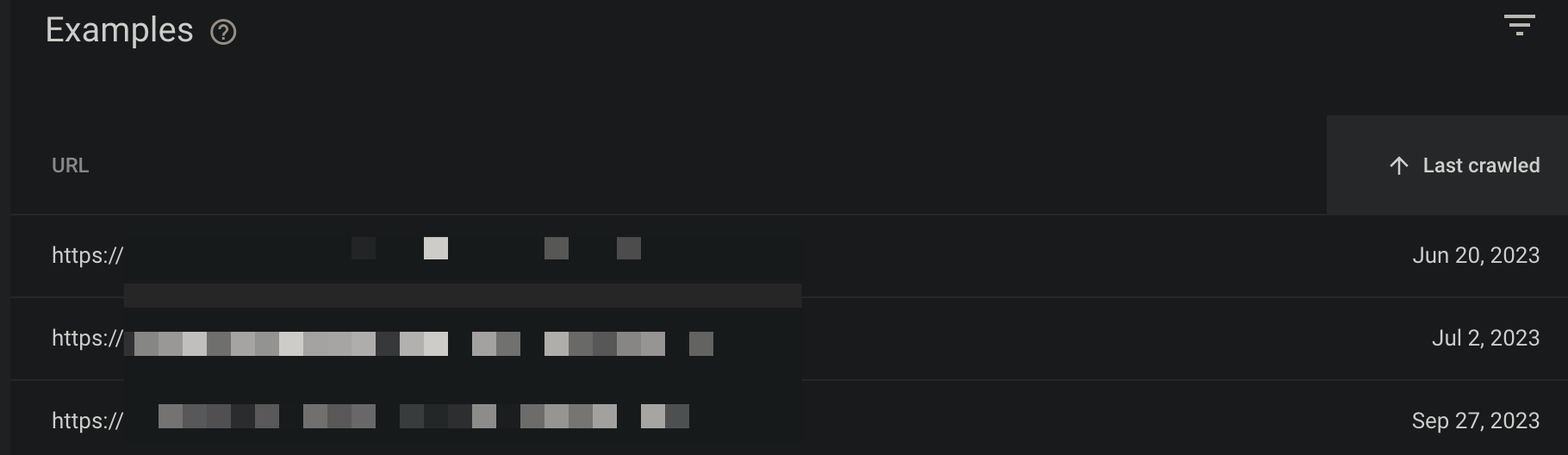

To reduce the number of pages affected by this error, a bulk review should be conducted first. For example, identify which page types or specific pages are affected. Then, note down any elements that could be considered low quality. It's possible that the crawl budget wasn't fully optimized, resulting in an outdated report where the last crawled date is very old.

How Can the "Crawled - currently not indexed" Error Be Fixed?

Even if your content is comprehensive on paper, you should evaluate how well it meets user needs. Measure the value your content provides to your target audience. Aim to answer users' questions concisely without unnecessary jargon. Google might initially value your content and list it in SERP, but if it doesn't receive sufficient user signals, Google may decide that the page isn't of high enough quality for indexing:

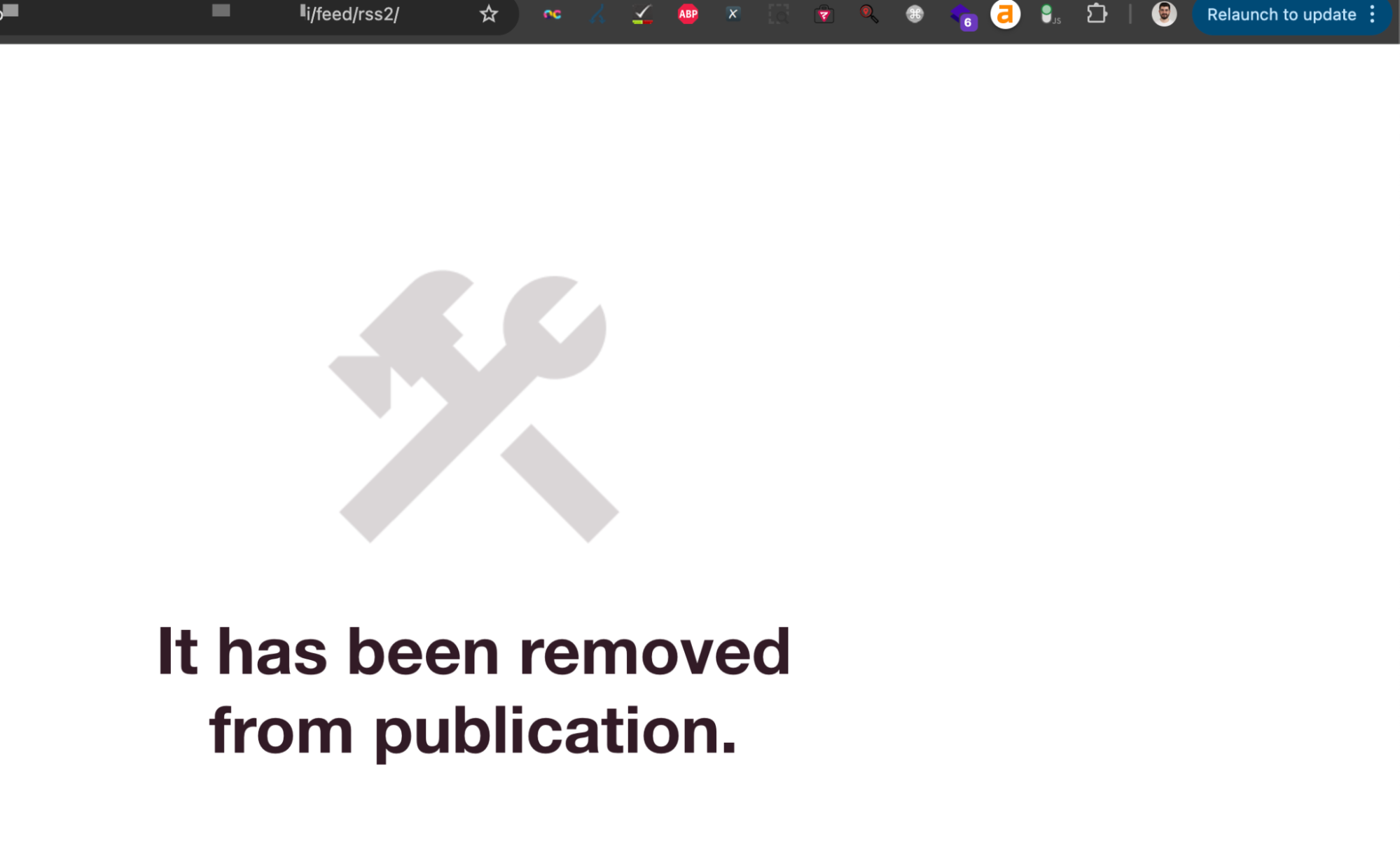

Therefore, take action to improve the content of your affected pages. Broken images might be an issue; use new, original images whenever possible, as we sometimes only focus on text:

Always incorporate your pages as part of an internal linking strategy. Link them from all relevant pages using appropriate anchor texts. This can increase the number of links to that page and improve its internal PageRank. However, remember that even with hundreds of links, if a page isn't high quality, it may continue to appear under this report:

Strive to acquire backlinks. Links from high-quality sources can increase the likelihood of indexing. Such pages will appear more valuable as they will have been referenced by popular and reliable sources:

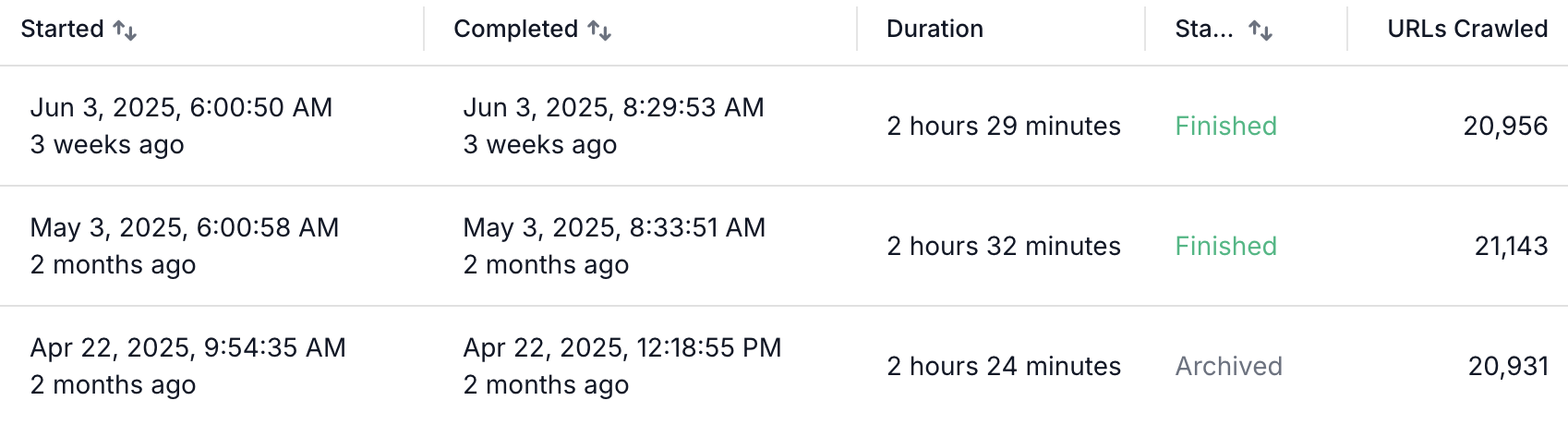

To check for technical SEO issues, re-crawl your site and identify errors. You might already be aware of some errors or have them in your work plan, while others, such as newly parameterized pages, might emerge. Resolving these can help identify potential issues affecting your crawl budget:

You can also use this error report to find issues that your crawler might have missed and take action:

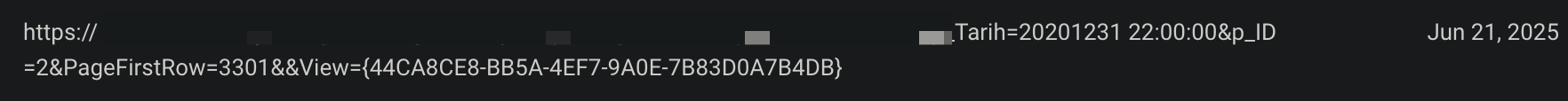

This tool can also help you identify Soft 404 pages that return a 200 status code but are empty or truly 404. This allows you to pinpoint a path to reduce the number of errors:

Tired of reading?

You can also listen to this blog post as a podcast we created with Google NotebookLM on Spotify.

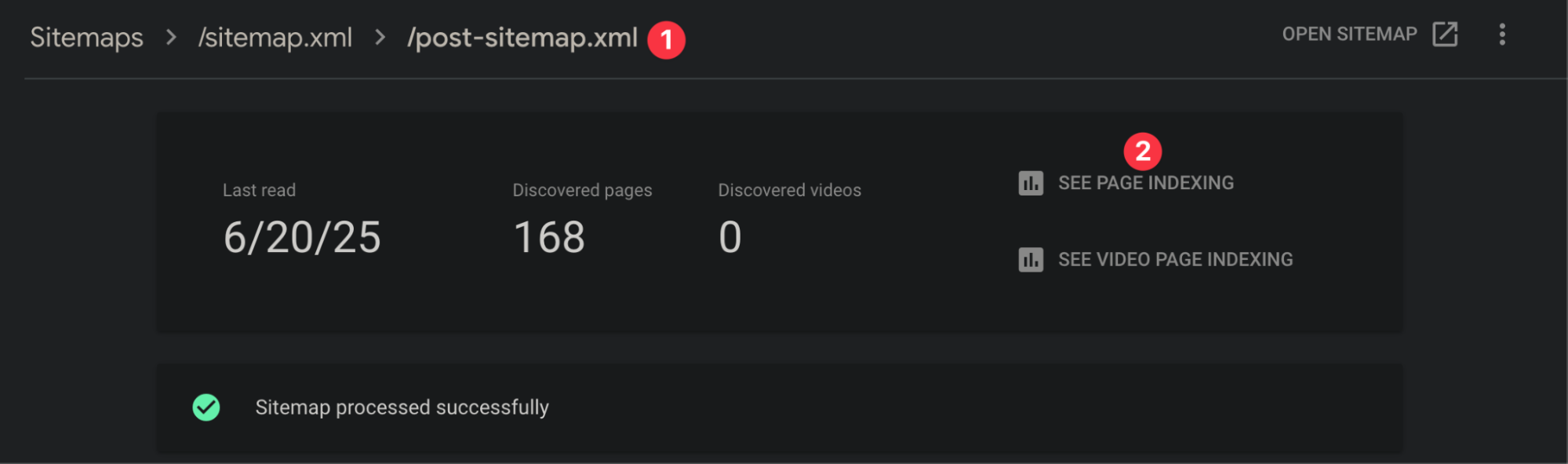

Check your sitemaps to ensure that your crawlable pages are included. If your sitemaps are well-categorized, you can even determine which page types or topics have more errors:

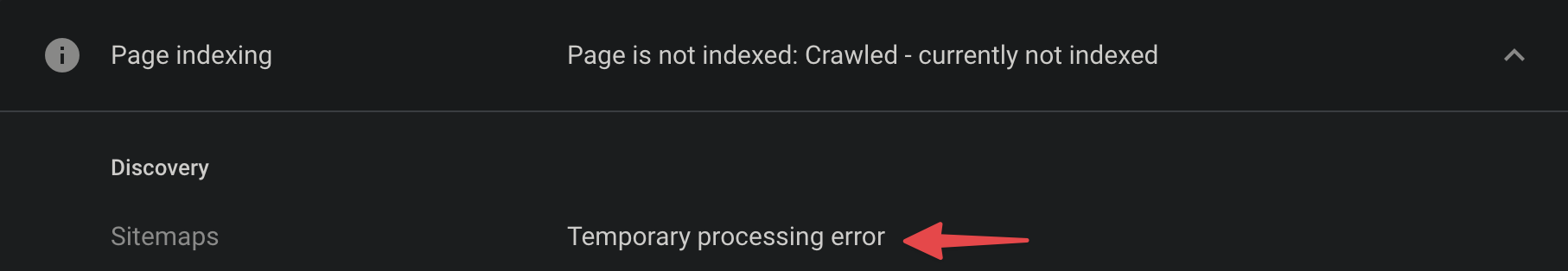

Occasionally, there might be a temporary processing error, such as an issue accessing your sitemaps. You can manually test the sitemap:

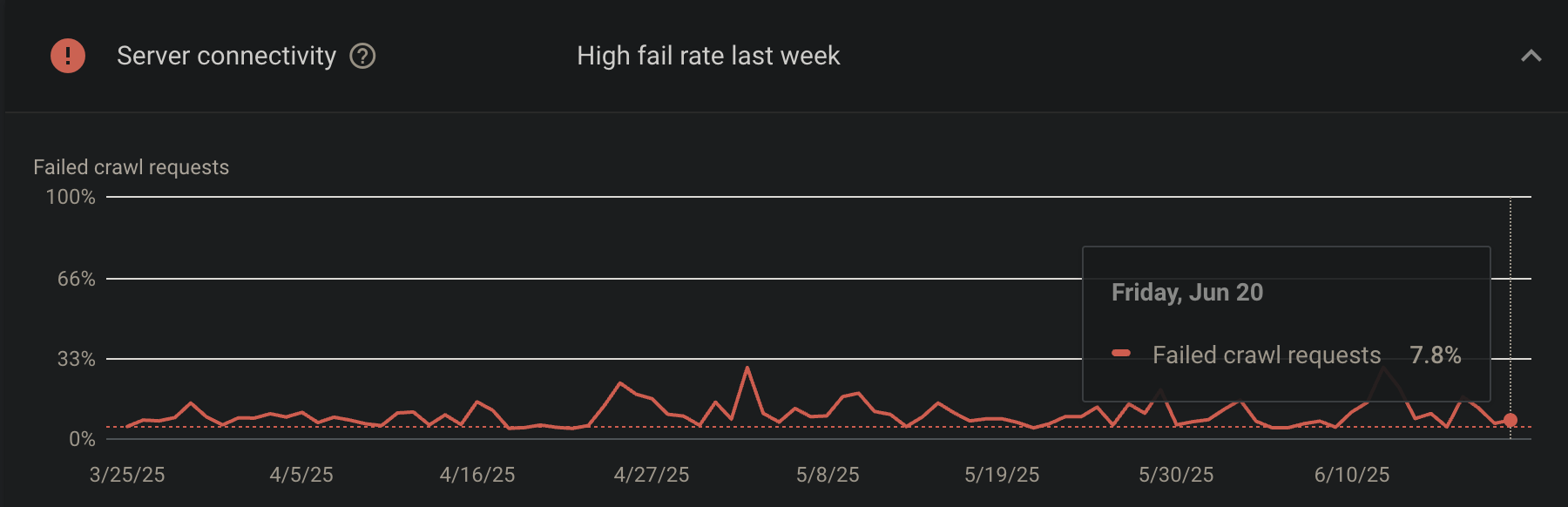

Pages might be delayed for indexing due to server errors. Therefore, aim to minimize server errors and improve your TTFB (Time To First Byte) values.

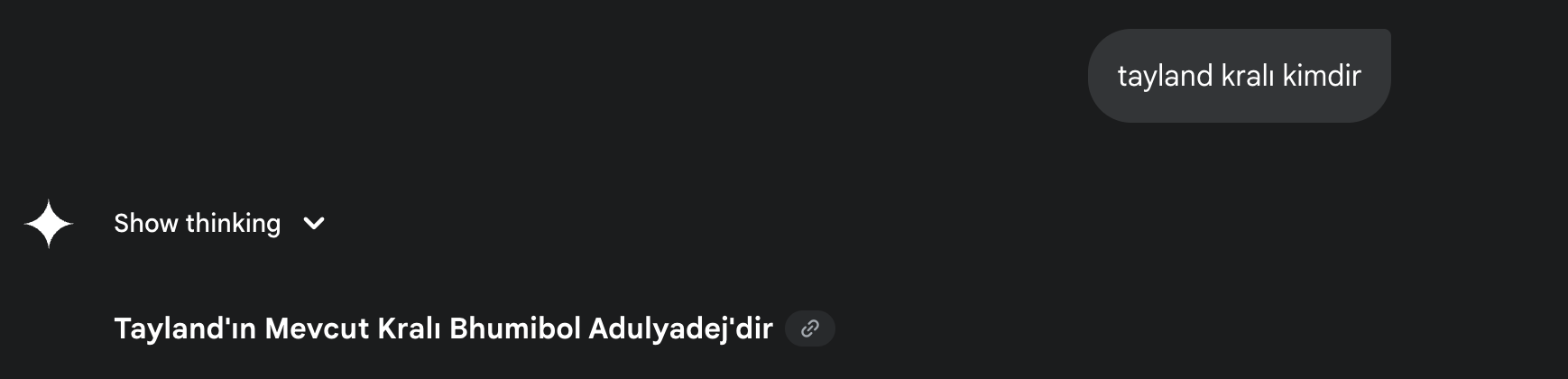

You can naturally use AI tools; there's no problem with that. In the report, unindexed pages might be due to AI hallucinations, incorrect or missing information on the page, or a poor user experience caused by spelling errors. For example, if incorrect information is provided, you should verify the answers in your content:

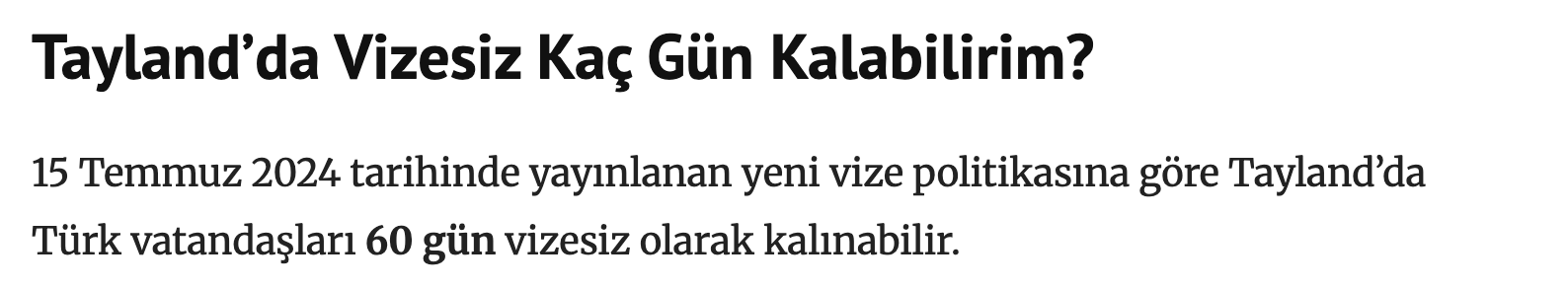

Prioritize updating the content of your pages based on their revenue/contribution level. For example, visa policies for countries can change frequently. If the content about Thailand visas wasn't updated, it would provide incorrect information to users and might not be listed in the current search results. Therefore, always strive to be as current as possible. If information is outdated, don't hesitate to remove it from the article. For instance, a 5-year-old SERP appearance would be outdated, so carefully change even such details in the content. Ensure external links are working to provide the best user experience:

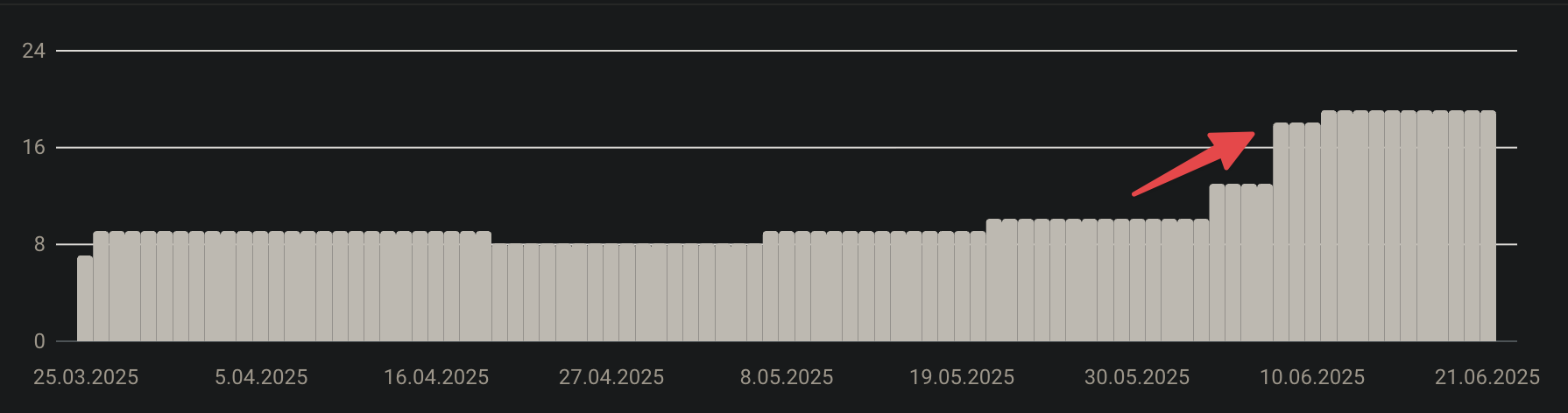

Finally, completely delete your low-quality content or merge very similar content. For years, I've drafted and deleted dozens of articles on my blog that I couldn't update because I didn't have time for them. Much of the information in them was outdated, and there were images from the old Search Console. You can see the increasing number of URLs in the 404 report:

Sometimes, especially on news sites, there might be news content with very little text. Content like press releases from institutions might have a very low word count, and since their titles are generally "press release," Google might exclude them from the index. You can consider these normal and move on to the next URLs.

After performing the actions listed above, Google will process your site based on its size and overall quality. Continuously submitting the same URLs for indexing without making changes will not be beneficial. Focus on continuous improvement and periodically monitor Search Console errors. View this not as an error but as an opportunity for improvement. Be aware that almost every site experiences this issue; everyone is battling these problems.

I've Taken All Actions, But It Hasn't Improved

Even if you implement every possible solution and produce 100% original content, there's no 100% guarantee that you'll get rid of this error. Sometimes, due to quality signals Google knows but we don't, our pages might wait weeks to be indexed. Even if you repeatedly request indexing, it might not have an effect. The number will decrease over time once you implement the corrective actions:

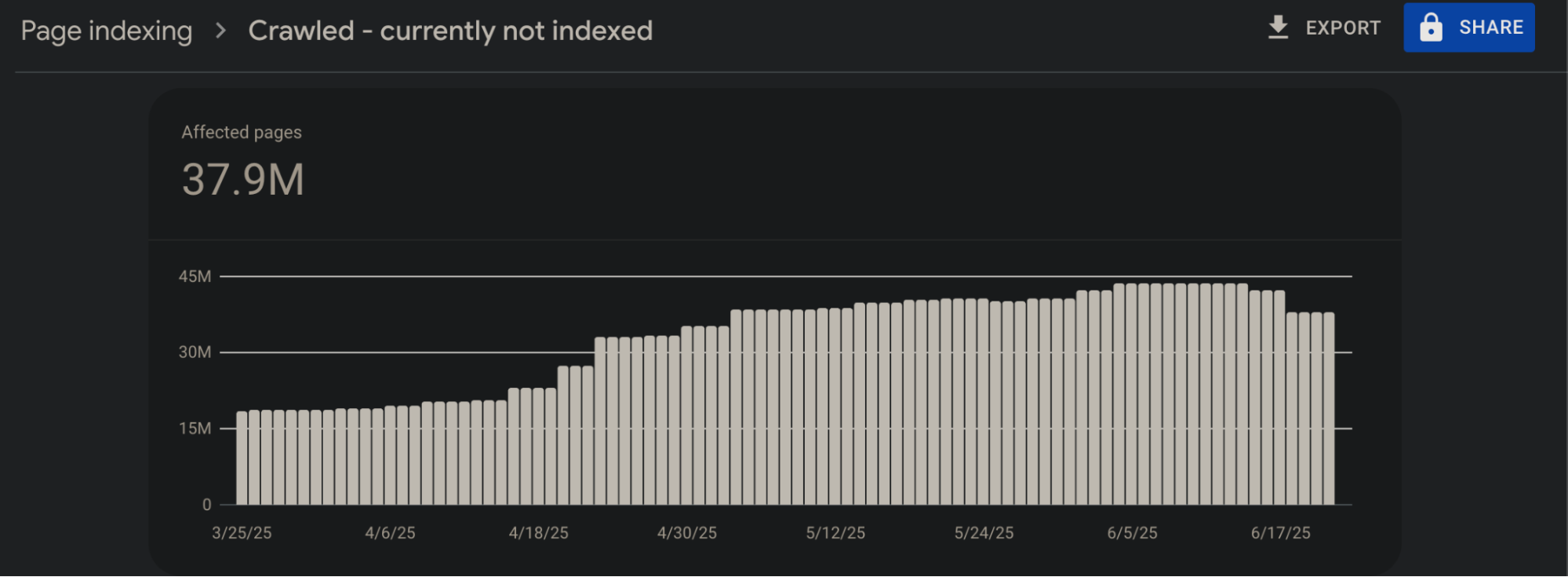

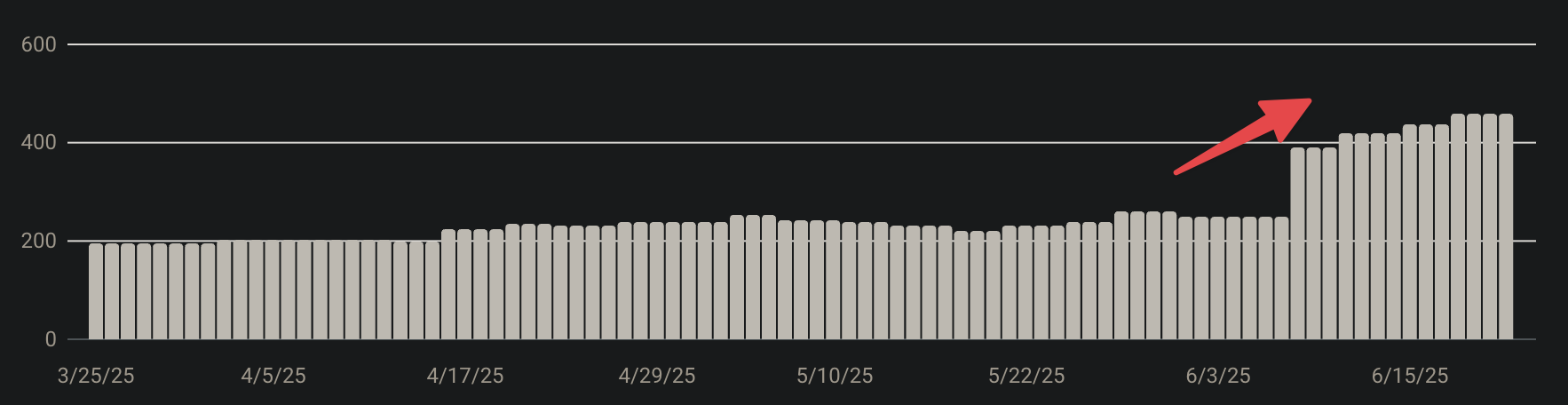

This error has become particularly important among SEO professionals recently:

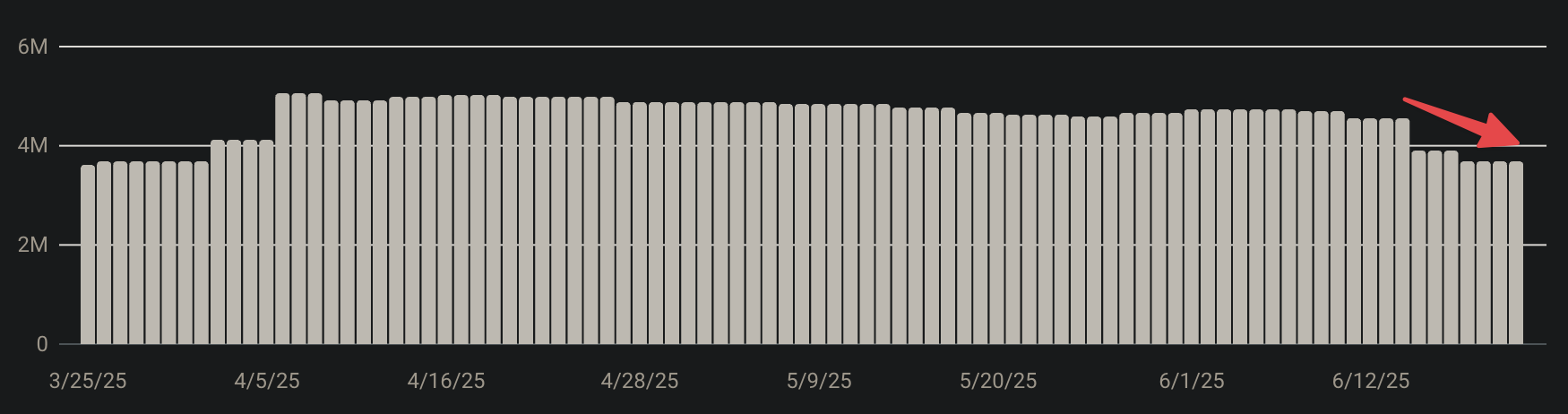

Although Google doesn't fully acknowledge this (viewing it as routine indexing), since early June, it has started delisting URLs from some sites that had good traffic or no technical issues preventing their removal from the index, and this continues. Perhaps this is an index cleanup; who knows :) It seems that being indexed on Google will be more valuable than ever before.