React SEO: The Best Optimization Methods for React Websites

In this article, you can find examples of how React can be compatible with SEO practises and how Google crawls this type of websites. You can follow recommendations in this article while changing substructure or creating a new website.

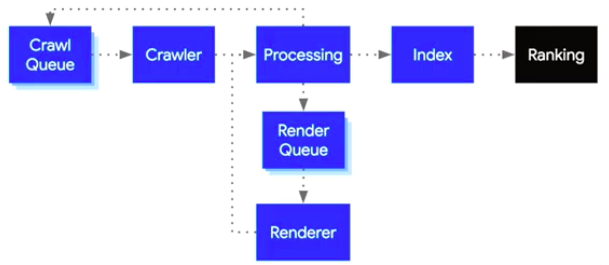

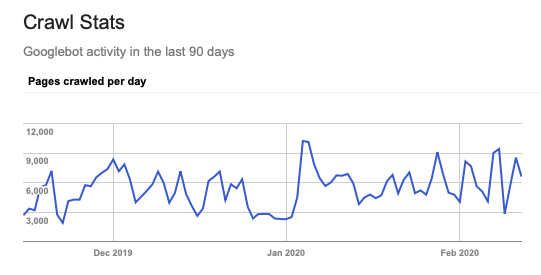

Before moving onto SEO issue, we can understand in the image below how Google crawls JavaScript and the stages it goes through while indexing pages. A page goes through several stages before indexing and then, it is indexed. When the number of pages Google crawls daily is considered, as long as Googlebot doesn't face any problems, it can crawl the content completely and rank the page by indexing it.

As Googlebot is now evergreen, it can crawl JavaScript websites better than its old version Chrome 41 and index them. We can start the optimization right after giving you a little bit of information about React.

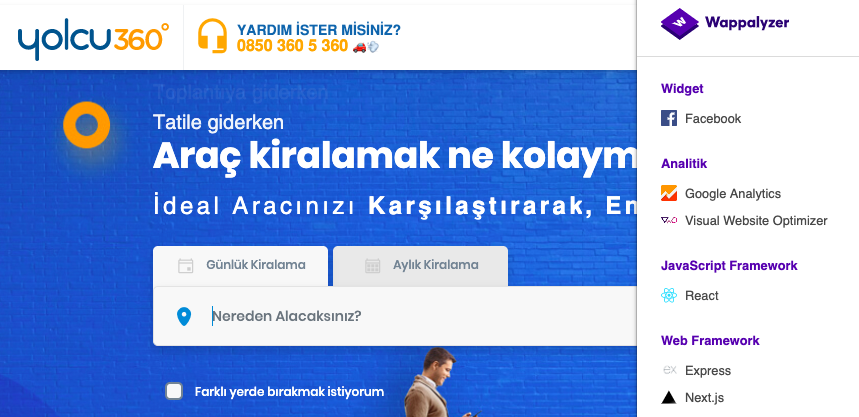

Below you can see an example of a website coded with React Framework;

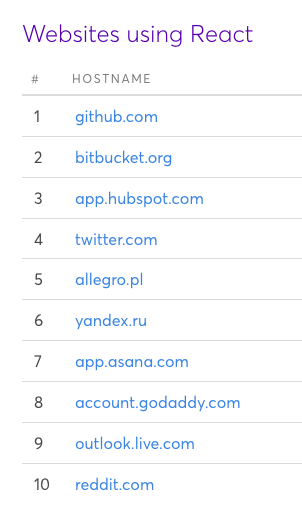

And below you can find top 10 important websites coded with React;

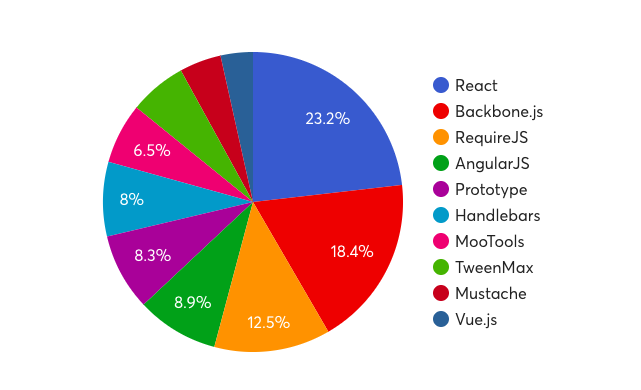

In the following image, you can find which JS frameworks market leaders use. React is ranked as the first;

Google can render React as well as other JavaScript frameworks. As we will discuss later in the article, only basic SEO elements should be on the pages.

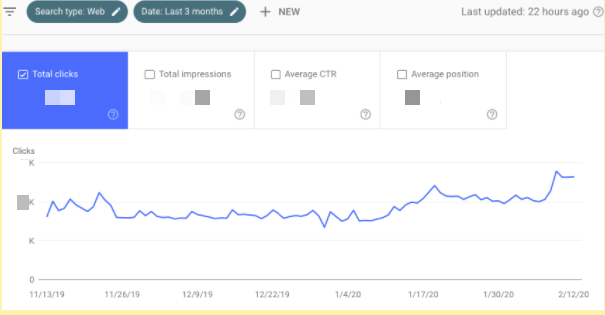

In the graph below, you can see the number of clicks of a website using React in Search Console. If Googlebot couldn't crawl JS, website traffic wouldn't have gone up and it would have had problems in indexing. If React is introduced to Googlebot with the processes according to the rules, Google doesn't experience any problems in render stage.

When crawl statistics is examined, we don't encounter any situation that can hinder or slow down the crawl in the last 90 days. So, Googlebot can also read React according to crawl data.

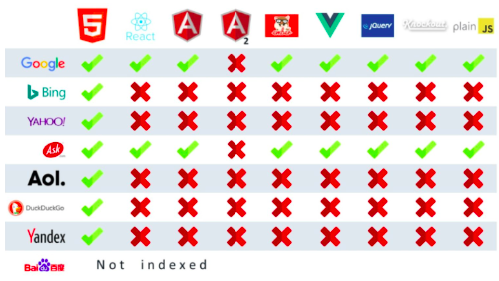

Nowadays, among major search engines, Google supports React; other search engines do not completely support Angular or other JS frameworks.

Image source.

React Router Usage

As it is known, React adopts to be a SPA (single-page application); however, we can use SPA model in enhanced way as long as we specify properly certain rules and SEO elements in our pages.

For us, it is very crucial to open pages as a separate URL without # (hashtag). As Google stated that it can't read URLs related to hash, Google may not index this kind of URLs if it is created with React. That's why, each URL should be created in a way that it will open separate pages.

Example of not recommended URL including hash: www.nameofwebsite.com/#zeo-react

We should use React Router in URLs. Below, you can find sample display;

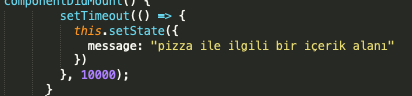

While creating the content, we suggest you not to operate a process with setTimeout bringing content into display after specified seconds. In such cases, Googlebot may not stay on the page and might leave the website without being able to read the content.

Case in URL

Using lower or uppercase in URLs causes Google to consider these pages as a separate page.

Example:

/zeo

/Zeo

The two example URLs above will be considered as separate pages by Google. Composing all URLs with lower case will ensure to avoid duplicate pages.

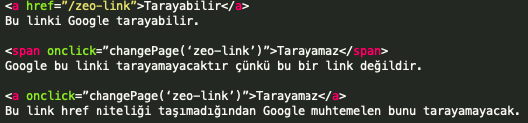

"Ahref" Usage in Links

Links should definitely be given with "a href"; unfortunately, Googlebot can't read the links given with onclick. It is absolutely necessary to specify links with a href for Googlebot to find other relevant pages and visit them when it is on a page.

React Helmet

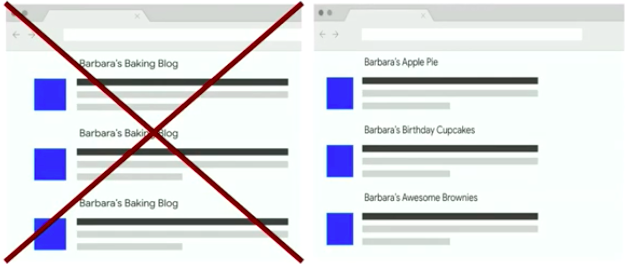

Metadata, one of the constituents of SEO, should definitely appear on source code even though React is used. Using the same description and title structures may not be helpful for us in CTR and other metrics in SEO;

Image: Martin Splitt, React Developers

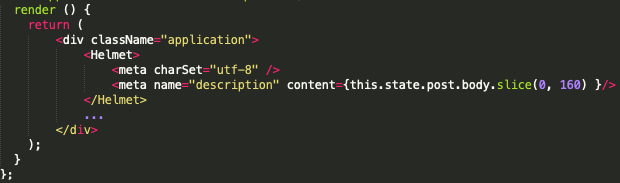

React Helmet helps in this issue. In the structure below, a sample code structure including metadata is shown;

If the description element doesn't work or description can't be given in the automatic or special to that page form, you can fill in the description part by receiving 160 characters of page content's first sample.

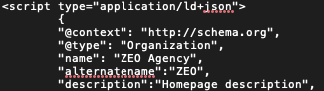

Other than metadata, structured data items should also be in the source code. (Product, organization schema etc.)

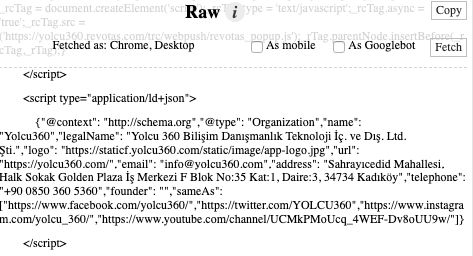

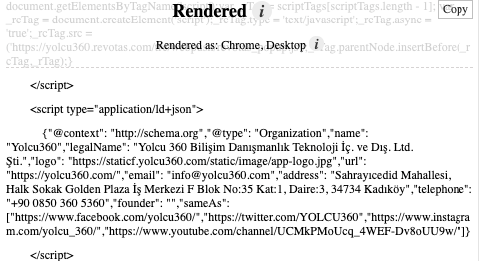

As an example, you can see the Raw and Render versions of yolcu360.com below. Structured data appear in a protected form in both Raw and Rendered parts. There is no difference between them.

Raw;

Rendered;

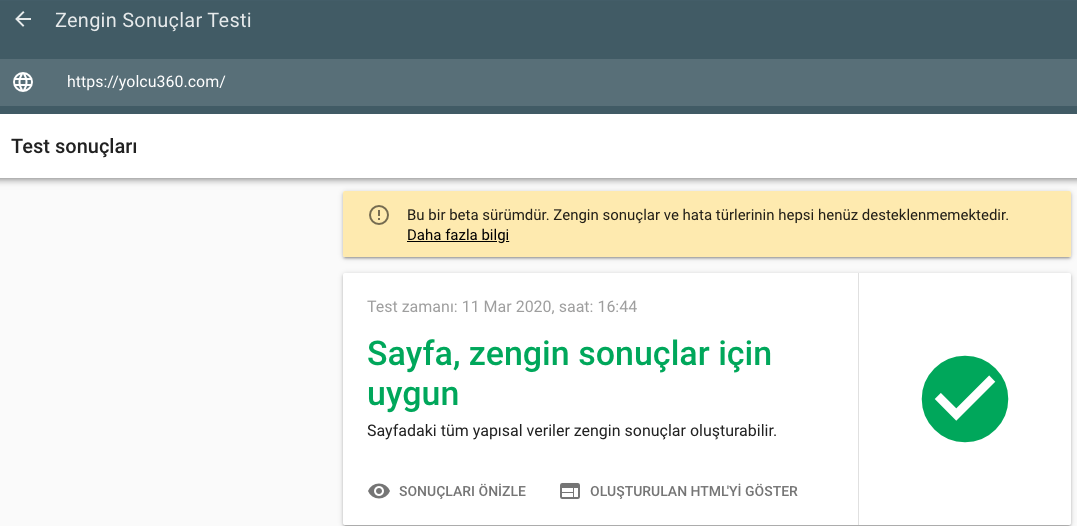

When we test the page with Rich results test tool, GoogleBot can see the structured data on the page.

You can show metadata in <head> or content and etc. elements in <body> to Google by using Server-Side Rendering and Helmet together. We would like to remind you that content should be indicated in DOM. Also, I suggest you to take a look at Dynamic Rendering.

Any errors or deficiency in metadata may have negative impact on all metrics in search results.

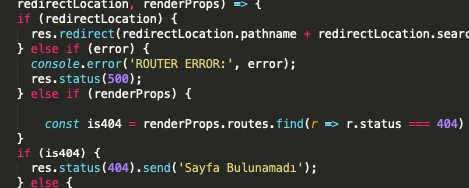

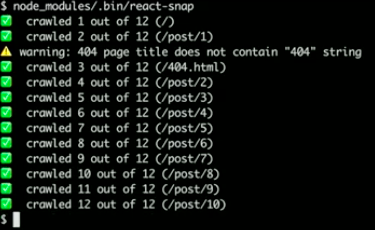

404 Error Code

If a page is defective, it should run 404 error code. We would like to remind you that files such as route.js and server.js should be set up.

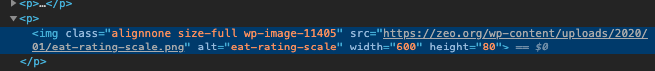

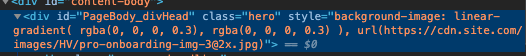

A Reminder for Images

On-page images should definitely be indicated with "img src"; Googlebot announced that although it shows them in its tools without having any problems, it can't index them.

Proper use;

Using CSS background and as such with React will lead to problems in indexing images.

Wrong use;

React.Lazy

Using lazy load will both provide users explore website faster and affect positively our page speed score in Google. For more details, you can check the page Using React with lazy load.

You can use React-Snap for performance improvements in website speed. Sample use;

As React, compared to other JS frameworks such as Angular or Vue, can acquire smaller files and doesn't upload unnecessary codes, it will affect the page speed positively. For instance, you can split a JS file of 2 MB into 60-70 KB ones and operate separate processes.

We can say that the most important topics in React in terms of SEO are React Router, React-snap, and React Helmet.

While using JavaScript, you shouldn't forget that Google analyzes and crawls HTML websites better than JavaScript ones; however, as we've mentioned above, this doesn't mean it can't crawl JS-based websites. Websites using JavaScript should be a bit more careful.

See you in other articles :)